Ehh, How Do You Say…

The use of fillers in language are not limited to spoken communication.

In American Sign Language, UM can be signed with open-8 held at chin, palm in, eyebrows down (similar to FAVORITE); or bilateral symmetric bent-V, palm out, repeated axial rotation of wrist (similar to QUOTE).

This is interesting to me because it helps differentiate communication which is unfolding in time and communication which is spatially inscribed. When we speak informally, most people use a some filler words, sounds, and gestures. Some support for embodied cognition theories has come from studies which show that

“Gestural Conceptual Mapping (congruent gestures) promotes performance. Children who used discrete gestures to solve arithmetic problems, and continuous gestures to solve number estimation, performed better. Thus, action supports thinking if the action is congruent with the thinking.”

The effective gestures that they refer to aren’t exactly fillers, because they mimic or indicate conceptual experiences in a full-body experience. The body is used as a literal metaphor. Other gestures however, seem relatively meaningless, like filler. There seems to be levels of filler usage which range in frequency and intensity from the colorful to the neurotic in which generic signs are used as ornament/crutch, or like a carrier tone to signify when the speaker is done speaking, (know’am’sayin?’).

In written language, these fillers are generally only included ironically or to simulate conversational informality. Formal writing needs no filler because there is no relation in real time between participating subjects. The relation with written language was traditionally as an object. The book can’t control whether the reader continues to read or not, so there is no point in gesturing that way. With the advent of real time text communication, we have experimented with emoticons and abbreviations to animate the frozen medium of typed characters. In this article, John McWhorter points out that ‘LOL isn’t funny anymore’ – that it has entered sort of a quasi-filler state where it can mean many different things or not much of anything.

In terms of information entropy, fillers are maximally entropic. Their meaning is uncertain, elastic, irrelevant, but also, and this is cryptic but maybe significant…they point to the meta-conversational level. They refer back to the circumstance of the conversation rather than the conversation itself. As with the speech carrier tone fillers like um… or ehh…, or hand gestures, they refer obliquely to the speaker themselves, to their presence and intent. They are personal, like a signature. Have you ever noticed that when people you have known die that it is their laugh which is most immediately memorable? Or their quirky use of fillers. High information entropy ~ High personal input. Think of your signature compared to typing your name. Again, signatures are occurring in real time, they represent a moment of subjective will being expressed irrevocably. The collapse of information entropy which takes place in formal, traditional writing is a journey from the private perpetual here of subjectivity to the world of public objects. It is a passage* from the inner semantic physics, through initiative or will, striking a thermodynamically irreversible collision with the page. That event, I think, is the true physical nature of public time – instants where private affect is projected as public effect.

Speakers who are not very fluent in a language seem to employ a lot of fillers. For one thing they buy time to think of the right word, and they signal an appeal for patience, not just on a mechanical level (more data to come, please stand by), but on a personal level as well (forgive me, I don’t know how to say…). Is it my imagination or are Americans sort of an exception to the rule, preferring stereotypically to yell words slowly rather than using the ‘ehh’ filler. Maybe that’s not true, but the stereotype is instructive as it implies an association between being pushy and adopting the more impersonal, low-entropy communication style.

This has implications for AI as well. Computers can blink a cursor or rotate an hourglass icon at you, and that does convey some semblance of personhood to us, I think, but is it real? I say no. The computer doesn’t improve its performance by these gestures to you. What we might subtly read as interacting with the computer personally in those hourglass moments is a figment of the Pathetic fallacy rather than evidence of machine sentience. It has a high information entropy in the sense that we don’t know what the computer is doing exactly, if it’s going to lock up or what, but it has no experiential entropy. It is superficially animated and reflects no acknowledgement to the user. Like the book, it is thermodynamically irreversible as far as the user is concerned. We can only wait and hope that it stops hourglassing.

The meanings of filler words in different languages are interesting too. They say things like “you see/you know”, “it means”, “like”, “well”, and “so”. They talk about things being true or actual. “Right?” “OK?”. Acknowledgment of inter-subjective synch with the objective perception. Agreement. Positive feedback. “Do you copy?” relates to “like”…similarity or repetition. Symmetric continuity. Hmm.

*orthomodular transduction to be pretentiously precise

Biocentrism Demystified: A Response to Deepak Chopra and Robert Lanza’s Notion of a Conscious Universe.

1. I hope we all agree that our information about facts is incomplete, and will always remain so, at least in the foreseeable future.2. The only reality that makes sense to me is what Stephen Hawking calls ‘model-dependent reality’ (MDR).3. Other uses of the word ‘reality’ (other than MDR) imply ‘absolute reality’. If you disagree with this statement, please try defining ‘absolute reality’ in a logical way, using words which mean the same thing to everybody. My belief is that you will not be able to do that, and that means that MDR is all you have for discussion purposes.4. Naturally, there can be many models of reality. So which of the MDRs is the right one, and who will decide that? In view of (1) above, this is a hopeless situation, and that is why I avoid getting into philosophical discussions.5. At any time in human history, there are more humans favouring a particular MDR over other MDRs. Let us call it the majority MDR (MMDR).

6. An MMDR may well prove to be wrong when we humans acquire more information; from then we have a new MMDR, till even that gets demolished.

7. I believe that materialism is a better MDR than its opposite (called idealism, subjectivism, or whatever). For more on this, please read my article at http://nirmukta.com/2011/06/19/stephen-hawkings-grand-design-for-us/. Here is an excerpt from that article:

‘ There are several umbrella words like ‘consciousness’, ‘reality’, etc., which have never been defined rigorously and unambiguously. H&M argue that we can only have ‘model-dependent reality’, and that any other notion of reality is meaningless.

Does an object exist when we are not viewing it? Suppose there are two opposite models or theories for answering this question (and indeed there are!). Which model of ‘reality’ is better? Naturally the one which is simpler and more successful in terms of its predicted consequences. If a model makes my head spin and entangles me in a web of crazy complications and contradictory conclusions, I would rather stay away from it. This is where materialism wins hands down. The materialistic model is that the object exists even when nobody is observing it. This model is far more successful in explaining ‘reality’ than the opposite model. And we can do no better than build models of whatever there is understand and explain.

In fact, we adopt this approach in science all the time. There is no point in going into the question of what is absolute and unique ‘reality’. There can only be a model-dependent reality. We can only build models and theories, and we accept those which are most successful in explaining what we humans observe collectively. I said ‘most successful’. Quantum mechanics is an example of what that means. In spite of being so crazily counter-intuitive, it is the most successful and the most repeatedly tested theory ever propounded. I challenge the creationists and their ilk to come with an alternative and more successful model of ‘reality’ than that provided by quantum mechanics. (I mention quantum mechanics here because the origin of the universe, like every other natural phenomenon, was/is governed by the laws of quantum mechanics. The origin of the universe was a quantum event.)

A model is a good model if: it is elegant; it contains few arbitrary or adjustable parameters; it agrees with and explains all the existing observations; and it makes detailed and falsifiable predictions.’

>”Other uses of the word ‘reality’ (other than MDR) imply ‘absolute reality’. If you disagree with this statement, please try defining ‘absolute reality’ in a logical way,”

Absolute reality is the capacity for perceptual participation, aka, sensory-motor presentation, aka qua(lia-nta). That is the bare-metal prerequisite for all forms of order or matter, subject or object. Not only metaphysics but meta-ontology. The cosmos is not something which is, the cosmos actually invents “is” by “seeming not to merely seem”.

Please try defining ‘model’ in a way that does not assume some form of sensory presentation and participation. What is a model except a sensory experience which seems to refer our minds to another?

While I agree that no participant within a given experience has an absolute perspective of that experience, I disagree that the MDR is a solipsistic ‘model’ which is generated locally. The fact that we recognize the relativism of perceptual inertial frames (PIF = my term for MDR) is itself a clue that the deeper reality is this very capacity for relativism of perspective. Although the relativism itself may be the only final commonality among all perspectives, that commonality is not a tabula rasa. We can say things about this ‘common sense’ – things which have to do with contrasts and inverted symmetry, with proximity and intensity, relationship, identity, and division. These principles are beneath all forms and functions, all sensations and ideas, substances and patterns, and through them, we can infer more elusive fundamentals. Pattern recognition which is beyond pattern. Gestalt habits which are beyond mereology or cardinality…higher octaves of simplicity. Trans-rational, non-quantitative properties.

All mechanisms and all physics rely on a root expectation of sanity and continuity – of causality and memory, position, recursive enumeration, input/output, etc. If you are going to get rid of absolute reality, then you have to explain the emergence of the first MDR – what is modeling? Why does the universe model itself rather than simply ‘be’ what it is?

My solution is to accept that this assumed ‘modeling’ is physics itself, and that physics is experienced-embodied relativity. In the absolute sense, matter a special case of a more general (non-human) perception or sense. Not a continuum or a ZPE vacuum flux, but ordinary readiness to experience private sensory affects and produce (intentionally or not) public facing motor effects. What the universe uses to model is not a mathematical abstraction floating in a vacuum, but a concrete participatory phenomena, which we know as human beings to be sensory-motor participation. Not everything is alive biologically, but everything that seems to us to exist naturally as matter probably has a panexperiential interaction associated with it on some level of description. It’s about turning the field-force model inside out, turning away from the de-personalized objectivity of the last few centuries and toward a realization of personal involvement in genuine presentations (customized and filtered though they may be) rather than assembled representations.

The MMDR should not embrace materialism or idealism by default because one seems simpler than the other. We should accept only a solution which honors the full spectrum of possible experiences in the cosmos, from the most empirically public to the most esoterically private. This does not mean weighting the ravings of one lunatic the same as a law of gravity, but rather acknowledging that if there is a lunatic, then the universe is in some sense potentially crazy also, and within that crazy is something even more interesting and universal than gravity…an agenda for aesthetic proliferation… a Multisense Realism.

Illusion is a meaningless term in science as far as I can see. Illusion is about an experience failing to meet expectations of consistency across perceptual frames (models)…except that we know that inconsistency is likely the only such consistency, beyond the root common sense. Whatever illusions we experience as people are not necessarily absent on other levels of inspection. Quantum illusions, classical illusions, biological illusions, etc. Every instrument relies on conditions which create their own confirmation bias, including the human mind. We should not, however, make the mistake of allowing non-human, inanimate instruments tell us what our reality is. They can’t see our consciousness in the first place, remember? Our human equipment is not as sensitive in detecting public phenomena, we cannot see more than a small range of E-M, etc, but neither is a gas spectrometer sensitive in detecting human privacy.

We see that when we adopt the frame of mechanism, idealism seems pathologically naive and if we adopt the frame of idealism, mechanism seems pathologically cynical. This should be regarded along the lines of the double-slit test: evidence that our assumptions are not the whole story, and to seek a deeper unity than mechanistic or idealistic appearances.

A Deeper Look at Peripheral Vision

Often, when peripheral vision is being explained, an image like the one on the right is often used to show how only a small area around our point of focus is defined in high resolution. The periphery is shown to be blurry. While this gets the point across, I think that it actually obscures the subtle nature of perception.

If I focus on some part of the image on the left, while it is true that my visual experience of the other quadrants is diminished, it is somehow less available experientially rather than degraded visually. At all times I can clearly tell the difference between the quality of left image and the right image. If I focus on a part of the right hand image, the unfocused portion does not blur further into a uniform grey, but retains the suggestion of separate fuzzy units.

If peripheral vision were truly a blur, I would also expect that when focusing on the left hand image, the peripheral boxes would look more like the one on the right, but it doesn’t. I can see that the peripherized blocks of the left image are not especially blurry. No matter how I squint or unfocus or push both images wayy into the periphery of my sight, I can easily tell that the two images are quite different. I can’t resolve detail, but I can see that there is potentially detail to be resolved. If I look directly at any part of the blurry image on the right I can easily count the fuzzies when I look at them, even through they are blurred. By contrast, with the image on the left, I can’t count the number of blocks or dots that are there even though I can see that they are block-like. There is an attenuation of optical acuity, but not in a way which diminishes the richness of the visual textures. There is uncertainty but only in a top-down way. We still have a clear picture of the image as a whole, but the parts which we aren’t looking at directly are seen as in a dream – distinct but generic, and psychologically slippery.

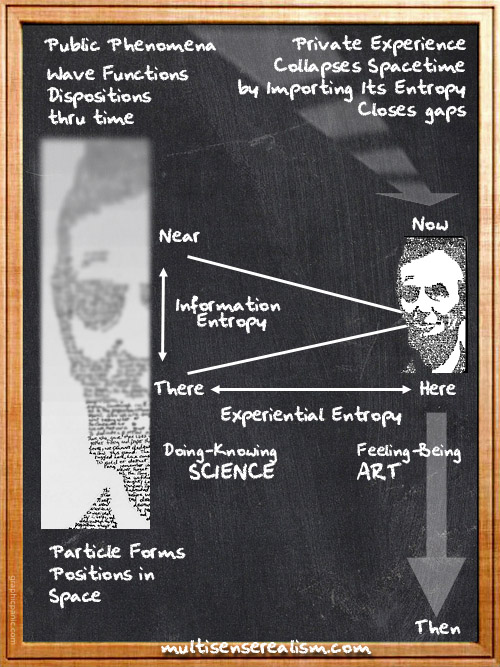

What I think this shows that there are two different types of information-related entropy and two different categories of physics – one public and quantitative, and one private and qualitative or aesthetic. Peripheral vision is not a lossy compression in any aesthetic sense. If perception were really driven by bottom up processing exclusively, we should be able to reproduce the effect of peripheral vision in an image literally, but we can’t. The best we can do is present this focused-in-the-center, blurry-everywhere-else kind of image which suggests peripheral vision figuratively, but the aesthetic quality of the peripheral experience cannot be represented.

I suggest that the capacity to see is more than a detection of optical information, and it is not a projection of a digital simulation (otherwise we would be able to produce the experience literally in an image). Seeing is the visual quality of attention, not a quantity of data. It is not only a functional mechanism to acquire data, it is more importantly an aesthetic experience.

The Doctor Prescribes Brian Eno – Blog of the Long Now

The Doctor Prescribes Brian Eno – Blog of the Long Now

In the video, Brian Eno brings up two points which relate to the last posts about intelligence, wisdom, and their relation to entropy.

“I think that one of the things that art offers you is the chance to surrender, to not be in control any longer”.

Right. That makes sense. Art debits the private side of the phenomenal ledger. The side which is concerned with the loaning of time to be returned to the Absolute with interest. Wisdom, especially in the exalted forms of Eastern philosophy, is all about surrender and flow. Dissolving of the ego. The ego is the public interface for the private self, and the seat of the kind of intelligence addressed by causal entropic forces – machine intelligence, strategic effectiveness. Important locally but trivial ultimately, in the face of eternity.

On the other side of the ledger is the chance to strive and control using intelligence. Western philosophy tends toward cultivating objectivity and critical thinking. It is a canon of skeptical intelligence and empiricism from which science emerged. Clear thinking and resisting the desire to surrender are what debit the public facing side of the ledger. Art and Science then, are the sense and motive of human culture…the tender and tough, the wag and wegh, and yes, the yin and yang.

Eno also says “The least interesting sound in the universe, probably, is the sine wave. It’s the sound of nothing happening. It’s the sound of perfection, and it’s boring. As David Byrne said in his song, Heaven is a place where nothing ever happens. Distortion is character, basically. In fact, everything we call character is the deviation from perfection. So, perfection to me, is characterlessness.”

Aha, yes. Tying this back to the Absolute, it is the diffraction, the shattering of timelessness with spacetime (aka Tsimtsum) which creates the third element – entropy. The Absolute can only be completed by its own incompleteness, and entropy is the diagonalization of experience into public facing entropies and private facing reflections of the Absolute…quanta and qualia, science and art.

Intelligence Maximizes Entropy?

Intelligence Maximizes Entropy?

A new idea linking intelligence to entropy that is giving me something to think about.

“[…]intelligent behavior emerges from the “physical process of trying to capture as many future histories as possible,”

This sounds familiar to me. I have been calling my cosmological model the Sole Entropy Well, or Negentropic Monopoly, in which all signals (experiences) are diffracted from a single eternal experience, the content of which is the capacity to experience. I think that this is the same principle in this paper, called “causal entropic forces”, except in reverse. I wrote recently about how intelligence is rooted in public space while wisdom is about private time.

I think that causal entropic forces are about preserving a ‘float’ of high entropy on top of time. It’s like juggling – you want to suspend as many potentials as you can at “a” time and compensate for any potential threats before they can happen “in” time. Behind the causal entropic force, it seems to me that there must always be a core which is not entropic. That which seeks to entropically harness the future is itself motivated by the countervailing force for itself – to escape the harness of entropy.

None of this, however, addresses the Hard Problem. To the contrary, if this model is correct, then it is even more difficult to justify the existence of aesthetic sense, since all of the public effects of intelligence can be explained by thermodynamics.

Article: “A single equation grounded in basic physics principles could describe intelligence and stimulate new insights in fields as diverse as finance and robotics, according to new research.

Alexander Wissner-Gross, a physicist at Harvard University and the Massachusetts Institute of Technology, and Cameron Freer, a mathematician at the University of Hawaii at Manoa, developed an equation that they say describes many intelligent or cognitive behaviors, such as upright walking and tool use.The researchers suggest that intelligent behavior stems from the impulse to seize control of future events in the environment. This is the exact opposite of the classic science-fiction scenario in which computers or robots become intelligent, then set their sights on taking over the world.The findings describe a mathematical relationship that can “spontaneously induce remarkably sophisticated behaviors associated with the human ‘cognitive niche,’ including tool use and social cooperation, in simple physical systems,” the researchers wrote in a paper published today in the journal Physical Review Letters.“It’s a provocative paper,” said Simon DeDeo, a research fellow at the Santa Fe Institute, who studies biological and social systems. “It’s not science as usual.”Wissner-Gross, a physicist, said the research was “very ambitious” and cited developments in multiple fields as the major inspirations.The mathematics behind the research comes from the theory of how heat energy can do work and diffuse over time, called thermodynamics. One of the core concepts in physics is called entropy, which refers to the tendency of systems to evolve toward larger amounts of disorder. The second law of thermodynamics explains how in any isolated system, the amount of entropy tends to increase. A mirror can shatter into many pieces, but a collection of broken pieces will not reassemble into a mirror.The new research proposes that entropy is directly connected to intelligent behavior.“[The paper] is basically an attempt to describe intelligence as a fundamentally thermodynamic process,” said Wissner-Gross.The researchers developed a software engine, called Entropica, and gave it models of a number of situations in which it could demonstrate behaviors that greatly resemble intelligence. They patterned many of these exercises after classic animal intelligence tests.In one test, the researchers presented Entropica with a situation where it could use one item as a tool to remove another item from a bin, and in another, it could move a cart to balance a rod standing straight up in the air. Governed by simple principles of thermodynamics, the software responded by displaying behavior similar to what people or animals might do, all without being given a specific goal for any scenario.“It actually self-determines what its own objective is,” said Wissner-Gross. “This [artificial intelligence] does not require the explicit specification of a goal, unlike essentially any other [artificial intelligence].”Entropica’s intelligent behavior emerges from the “physical process of trying to capture as many future histories as possible,” said Wissner-Gross. Future histories represent the complete set of possible future outcomes available to a system at any given moment.Wissner-Gross calls the concept at the center of the research “causal entropic forces.” These forces are the motivation for intelligent behavior. They encourage a system to preserve as many future histories as possible. For example, in the cart-and-rod exercise, Entropica controls the cart to keep the rod upright. Allowing the rod to fall would drastically reduce the number of remaining future histories, or, in other words, lower the entropy of the cart-and-rod system. Keeping the rod upright maximizes the entropy. It maintains all future histories that can begin from that state, including those that require the cart to let the rod fall.“The universe exists in the present state that it has right now. It can go off in lots of different directions. My proposal is that intelligence is a process that attempts to capture future histories,” said Wissner-Gross.The research may have applications beyond what is typically considered artificial intelligence, including language structure and social cooperation.DeDeo said it would be interesting to use this new framework to examine Wikipedia, and research whether it, as a system, exhibited the same behaviors described in the paper.“To me [the research] seems like a really authentic and honest attempt to wrestle with really big questions,” said DeDeo.One potential application of the research is in developing autonomous robots, which can react to changing environments and choose their own objectives.“I would be very interested to learn more and better understand the mechanism by which they’re achieving some impressive results, because it could potentially help our quest for artificial intelligence,” said Jeff Clune, a computer scientist at the University of Wyoming.Clune, who creates simulations of evolution and uses natural selection to evolve artificial intelligence and robots, expressed some reservations about the new research, which he suggested could be due to a difference in jargon used in different fields.Wissner-Gross indicated that he expected to work closely with people in many fields in the future in order to help them understand how their fields informed the new research, and how the insights might be useful in those fields.The new research was inspired by cutting-edge developments in many other disciplines. Some cosmologists have suggested that certain fundamental constants in nature have the values they do because otherwise humans would not be able to observe the universe. Advanced computer software can now compete with the best human players in chess and the strategy-based game called Go. The researchers even drew from what is known as the cognitive niche theory, which explains how intelligence can become an ecological niche and thereby influence natural selection.The proposal requires that a system be able to process information and predict future histories very quickly in order for it to exhibit intelligent behavior. Wissner-Gross suggested that the new findings fit well within an argument linking the origin of intelligence to natural selection and Darwinian evolution — that nothing besides the laws of nature are needed to explain intelligence.Although Wissner-Gross suggested that he is confident in the results, he allowed that there is room for improvement, such as incorporating principles of quantum physics into the framework. Additionally, a company he founded is exploring commercial applications of the research in areas such as robotics, economics and defense.“We basically view this as a grand unified theory of intelligence,” said Wissner-Gross. “And I know that sounds perhaps impossibly ambitious, but it really does unify so many threads across a variety of fields, ranging from cosmology to computer science, animal behavior, and ties them all together in a beautiful thermodynamic picture.”

Cracking Intelligence and Wisdom

The difference between intelligence and wisdom, (aside from rolling up an D&D character), parallels the distinctions which have been dividing philosophy of mind from the beginning. Intelligence implies a cognitive ability in a technical and literal sense – a talent for understanding factual relations which apply to the public world. The products of intelligence are transformative, but famously amoral. Frankenstein and 2001’s HAL both embody our fear of the monstrous side of technology, of intelligence ‘run amok’ with hubris. This is a rich vein for science fiction. Beings built from the outside in – an inhuman mind from inanimate substance. Zombies, killer robots, aliens. Giant insects or weaponized planetoids. In all cases the impersonal, mechanistic side of consciousness is out of proportion and humanity is dwarfed or under-signified.

Intelligence is supposed to be impersonal and mechanistic though. Its facts and figures are not supposed to be local to human experience. The sophisticated view which developed through Western intelligence not only does not require us to value human subjectivity. It insists, to the contrary, that all human awareness is a contamination to the pristine reality of factual evidence – objects which simply are ‘as they are’ rather than merely ‘seem to be’. All human awareness, that is, except for the reasoning which progresses science itself.

Wisdom, while overlapping with intelligence as set of cognitive talents and skills, is not as clear cut as intelligence. Wisdom does not yield the kind of public results which intelligence is intended to produce, because wisdom is not focused on public objects but on private experiences. Both intelligence and wisdom attempt to step back from the local phenomenal world to seek deeper patterns, but intelligence seeks them from indirect experiences outside of the body, while wisdom seeks within the psyche, within the library of possible personal experiences. The library of wisdom, unlike that of intelligence, is in the language of the personal. Characters and stories which work on multiple levels of figurative association. The privacy of wisdom extends to its own forms, leading to a lot of mystery for the sake of mystery which those minds on the other side of the aisle find deeply offensive. Intelligence only uses symbols as generic pointers – to literally refer to a specific quantifiable variable. Intelligence stacks symbols in sequence as language and formulas. Wisdom uses symbols as poetry and art, evocative images which work on multiple levels of awareness and understanding but not the kind of fixed understanding of intelligence. The understanding of wisdom can be open ended and elliptical, absurd, poignant, etc.

The deepest kinds of wisdom are said to be ‘timeless’. Unlike the high value that intelligence places on up to the minute information, wisdom seems to appreciate with age. Rather than being seen as increasingly irrelevant, ancient stories and turns of phrase are revered and celebrated for their pedigree. There is an almost palpable weight to the anachronistic language and images. The metaphors are somehow more potent when delivered by a long dead prophet. This favoring the dead happens with more modern quotations too. Maybe it’s because they are no longer around to put their quotation in context, or maybe it just takes a while for greatness to make itself known against the background of more ephemeral noise.

In any case, the realm of wisdom is a decidedly human realm of human experience. It is a talent for recognizing and encapsulating common sense and long life, with subtlety and significance for all people and all lives. Wisdom is about being able to appreciate our fortunes as individuals and members of society. Wisdom helps us find an objective vantage point within the history of our personal experience from which to see and evaluate the ups and downs of life passing. Through wisdom, we can see the bigger picture in our own trials and tribulations and the rise and fall of civilizations. We can see how every moment can change seeming fiction into seeming fact and back. Wisdom is subjective and mystical, but so too were many phenomena in the natural world before science. The promise of Western intelligence is to de-mystify they world, to remove subjectivity, but in addressing subjectivity itself the intellect meets its match.

Frustrated with the prospect of decomposing its source into objects, intelligence turns to a kind of inside-out subjectivity in some variety of functionalism. Subjectivity, from the Western perspective of public space as reality, is nullified. It can only be disqualified as an ‘emergent property’ or ‘illusion’ of some other deterministic process of matter or ‘information’ – a side effect somehow, of what already seems to know itself perfectly well and function competently without resorting to any fanciful aesthetic ‘feelings’ or ‘flavors’. If arithmetic or the laws of physics work automatically, then they don’t need a special show of aesthetic phenomena to lubricate their own wheels, yet, thinks the left-brained Western mind, there is simply no other possibility. Consciousness must arise as some sort of accident among colliding collections of complex computations.

This is a problem, since it only pushes dualism from the Cartesian center of tolerance into the ghettos of the untouchable. Subjective experience is now confined in science to untouchable, uncountable metaphysical aethers of simulation; epiphenomenal dead ends which had no meaningful beginning.

The Western mind cannot tolerate being put into a box by any phenomena which it cannot put into a box itself. The irony of modern physics of course, is that all of the boxes which have been piled up so far seem to indicate their origin in circularity. A microcosm of disembodied Cheshire Cat smiles…determinable indeterminisms. On the astronomical scale, suddenly the bulk of the matter and energy in the universe has been re-categorized into darkness. The alchemist caps seem to have reappeared in science, but turned inside out. Western intelligence is no longer explaining the universe which we experience directly as participants, but is devoted to pursuing an alternate universe backstage which just so happens to identify human subjectivity as the only thing in the cosmos which is not actually real.

The current battle over TED, Sheldrake and Hancock is on the front line of the war between public-facing thinkers and private-facing thinkers, between body-space visionaries and life-time visionaries. Both sides play out a reflexive antagonism – a shadow projection which extends beyond the personal. Each side hears the other in their own limited terms, and neither one is able to communicate the missing perspective of the other. The argument continues because both fail to understand the missing piece of their own perspective and their mode of thinking has devolved into an aggressive-defensive vicious circle of un-wisdom and un-intelligence.

At this point in our political and in our intellectual life, the midpoint has been skewed so far from the center (to the West in science, and to the Right in politics), that any proposal which engages other perspectives is seen as extremism. Any new information is mistaken for treasonous compromise. Whether that extremism is in the mechanistic or the animistic direction, the result is very similar.

I would love to see a study done comparing the brain activity of so called ‘militant atheists’ and ‘religious fundamentalists’, to see how really far apart they are. My scientific hypothesis is that no neuroscientist will be able to look at the fMRIs of the two camps in a double blind test and reliably tell the difference. If that were true, what would it mean about protecting science from unscientific ideas if you cannot prove the scientific validity of thoughts from a brain scan?

Recent Comments