Archive

Can Effort Be Simulated?

This may seem like an odd question, but I think that it is a great one if you’re thinking about AI and the hard problem of consciousness.

Let’s say I want my dishwasher to feel the sense of effort that I feel when I wash dishes. How would I do it? It could make groaning noises or seem to procrastinate by refusing to turn on for days on end, but this would be completely pointless from a practical perspective and it would only seem like effort in my imagination. In reality, any machine can be made to perform any function that it is able to do for as long as the physical parts hold up without any effort on anyone’s part. That’s why they are machines. That’s why we replace human labor with robot labor…because it’s not really labor at all.

It is very popular to think of human beings as a kind of machine and the brain as a kind of computer, but imagine if that were really true. You could wash dishes for your entire lifetime and do nothing else. If someone wanted a house, you could simply build it for them. Machines are useful precisely because they don’t have to try to do anything. They have no sense of effort. They don’t care what they do or don’t do.

You might say, “There’s nothing special about that. Biological organisms just evolved to have this sense of effort to model physiological limits.” Ok, but what possible value would that have to survival? Under what circumstances would it serve an organism to work less than the maximum that it could physiologically? Any consideration such as conserving energy for the Winter would naturally be rolled into the maximum allowed by the regulatory systems of the body.

So, I say no. Effort cannot be simulated. Effort is not equal to energy or time. It is a feeling which is so powerful that it dictates everything that we are able to do and unable to do. Effort is a telltale sign of consciousness. If we could sleep while we do the dishes, we would, because we would not have to feel the discomfort of expending effort to do it.

Any computer, AI, or robot that would be useful to us could not possibly have a sense of its own efforts as being difficult. Once we understand how a sense of effort is truly antithetical to machine behaviors, perhaps we can then begin to see why consciousness in general cannot be simulated. How would an AI that has no sense of not wanting to do the dishes every be able to truly understand what activities are pleasurable and what are painful?

The Failure of Positivism And It’s Relevance Today

In evaluating 20th century philosophy, a few names regularly crop up into most conversations. Gottlob Frege, Bertrand Russell, Ludwig Wittgenstein, many of them are Austrian or British, many are lo…

New to MSR?

It might help to start with my other writings on Quora: https://www.quora.com/profile/Craig-Weinberg

This site is more of a place for recording ideas before I forget them.

3ॐc

Does consciousness emerge from the brain?

Are Ideas Physically Manifested in the Brain?

I don’t think that ideas can be said to be physically manifested, unless we extend physics to include phenomenology. Neurons, brains, and bodies* can all be reduced to the behavior of three dimensional structures in space. In this context, behavior is really the function of those 3D structures over time, and function is a chronological sequence (so a fourth dimension or 3D+1D) of changes in those structures. All such changes can be described in terms of movements of structural units, typically atoms or molecules, as they are rearranged according to deterministic and random-seeming chain reactions. The existence of ‘ideas’ or consciousness within such a system is, to paraphrase William James, “an illegitimate birth in any philosophy that starts without it, and yet professes to explain all facts by continuous evolution.

This is to say that there is no logical entailment which can explain how we get from a phenomenon which can be imagined to be composed of countless particle collisions that look like like this:

to something else. This gap, known in Philosophy of Mind as the Explanatory Gap, and the existence of that something else in the first place (known as the Hard Problem of Consciousness) are the two insurmountable obstacles for anti-idealistic worldviews**.

What many computer scientists may not appreciate is that while the theoretical underpinnings of information science point to brains being reducible to simple arithmetic functions, this reduction cuts both ways. If we can reduce everything that a brain does to a computation which can be embodied on any material substrate, that also means that no semantic content is required, other than low level digital logic. Just as all of the content of the internet can be routed by dumb devices which have no appreciation of images or dialogue, so too can the entire content of the brain be reproduced without any thoughts, feelings, or ideas.

Once we have used a mathematical or physical schema to encode our communication, there is no functional benefit to be gained by decoding it into any form other than computation. The computation alone – invisible, intangible, silent facts about the parts of a calculating machine in relation to each other (3D+1) is all that is necessary or sufficient to execute whatever behaviors will allow an animal’s body to survive and reproduce. Once we have converted conscious experience to localized machine signals, the signals alone are enough to generate any physical effect, without signifying any non-local content. This is what Searle was talking about with the Chinese Room, and is known as the symbol grounding problem.

In consideration of the above, the answer to the question is “no”. What is present in the brain can only be a-signifying material relations, not ideas. The connection between matter and ideas cannot be accessed in terms of physics or information, but only in the direct aesthetic participation which we call ‘consciousness’. In my view, it is not consciousness which is emergent from information or mechanisms, but information and mechanisms which are ‘diffracted’ or alienated from consciousness.

*not to mention windmills, computers, and rooms with people translating messages that they don’t understand from a book.

**These would include materialism, eliminativism, logical positivism, behaviorism, empiricism, verificationism, functionalism, computationalism, and emergentism.

Inflection Point

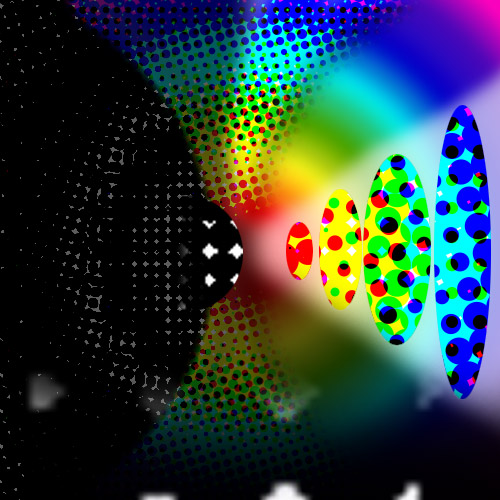

An illustration based on the finer points of multisense realism. The combination of monochrome, spectral color, multiple scales of halftone, and the nested lensing of scales suggest the primacy of sense. It’s about the relation between different features of consciousness and how they diverge rather than emerge from the totality. The totality is masked into personal and impersonal levels of description, instead of assembled from simple parts.

Consciousness can be mindless, but Mind cannot be unconscious

The mind is the cognitive range of consciousness. Consciousness includes many more aesthetic forms than just mind.

Recent Comments