Archive

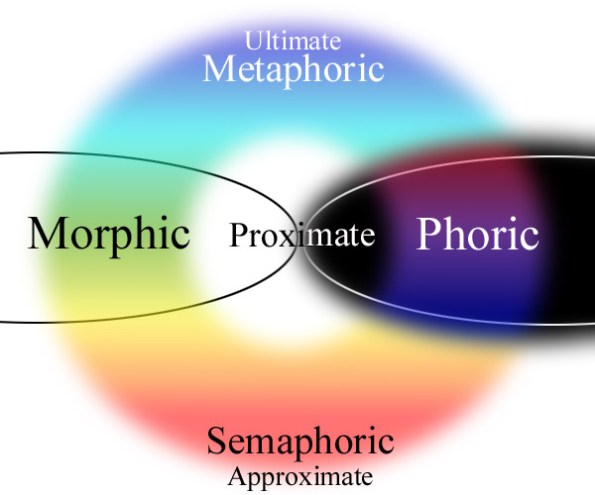

Continuum of Perceptual Access

This post is intended to bring more clarity to the philosophical view that I have named Multisense Realism. I have criticized popular contemporary views such as computationalism and physicalism because of their dependence on a primitive of information or matter that is independent of all experience. In both physicalism and computationalism, we are called upon to accept the premise that the universe is composed solely of concrete, tangible structures and/or abstract, intangible computations. Phenomena such as flavors and feelings, which are presented as neither completely tangible nor completely intangible are dismissed as illusions or emergent properties of the more fundamental dual principles. The tangible/intangible duality, while suffering precisely from the same interaction problems as substance dualism, adds the insult of preferring a relatively new and hypothetical kind of intangibility which enjoys all of our mental capacities of logic and symbolism, but which exists independently of all mental experience. When we try to pin down our notions of what information really is, the result is inevitably a circular definition which assumes phenomena can be ‘sent’ and ‘received’ from physics alone, despite the dependence of such phenomena on a preferred frame of reference and perception. When one looks at a system of mechanical operations that are deemed to cause information processing, we might ask the question “What is it that is being informed?” Is it an entity? Is there an experience or not? Are information and matter the same thing, and if so, which of them make the other appear opposite to the other? Which one makes anything ‘appear’ at all?

The answers I’ve heard and imagined seem to necessarily imply some sort info-homunculus that we call ‘the program’ or ‘the system’ to which mental experience can either be denied or assumed in an arbitrary way. This should be a warning to us that by using such an ambiguously conscious agent to explain how and why experience exists, we are committing a grave logical fallacy. To begin with, a principle that can be considered experiential or non-experiential to explain experience is like beginning with ‘moisture’ to explain the existence of water. Information theory is certainly useful to us as members of a modern civilization, however, that utility does not help us with our questions about whether experience can be generated by information or information is a quality of some categories of experience. It does not help us with the question of how the tangible and intangible interact. In our human experience, programs and systems are terms arising within the world of our thinking and understanding. In the absence of such a mental experience context, it is not clear what these terms truly refer to. Without that clarity, information processing agents are allowed them to exist in an unscientific fog as entities composed of an intangible pseudo-substance, but also with an unspecified capacity to control the behavior of tangible substances. The example often given to support this view is our everyday understanding of the difference between hardware and software. This distinction does not survive the test of anthropocentrism. Hardware is a concrete structure. Its behavior is defined in physical terms such as motion, location, and shape, or tendencies to change those properties. Software is an idea of how to design and manipulate those physical behaviors, and how the manipulation will result in our ability to perceive and interpret them as we intend. There is no physical manifestation of software, and indeed, no physical device that we use for computation has any logical entailment to experience anything remotely computational about its activities, as they are presumed to be driven by force rather than meaning. Again, we are left with an implausible dualism where the tangible and intangible are bound together by vague assumptions of unconscious intelligibility rather than by scientific explanation.

Panpsychism offers a possible a path to redemption for this crypto-dualistic worldview. It proposes that some degree of consciousness is pervasive in some or all things, however, the Combination Problem challenges us to explain how exactly micro-experiences on the molecular level build up to full-blown human consciousness. Constitutive panpsychism is the view that:

“facts about human and animal consciousness are not fundamental, but are grounded in/realized by/constituted of facts about more fundamental kinds of consciousness, e.g., facts about micro-level consciousness.”

Exactly how micro-phenomenal experiences are bound or fused together to form a larger, presumably richer macro-experience is a question that has been addressed by Hedda Hassel Mørch, who proposes that:

“mental combination can be construed as kind causal process culminating in a fusion, and show how this avoids the main difficulties with accounting for mental combination.”

In her presentation at the 2018 Science of Consciousness conference, Mørch described how Tononi’s Integrated Information Theory (IIT) might shed some light on why this fusion occurs. IIT offers the value Φ to quantify the degree of integration of information in a physical system such as a brain. IIT is a panpsychist model that predicts that any sufficiently integrated information system can or will attain consciousness. The advantage of IIT is that consciousness is allowed to develop regardless of any particular substrate it is instantiated through, but we should not overlook the fact that the physical states seem to be at least as important. We can’t build machines out of uncontained gas. There would need to be some sort of solidity property to persist in a way that could be written to, read from, and addressed reliably. In IIT, digital computers or other inorganic machines are thought to be incapable of hosting fully conscious experience, although some minimal awareness may be present.

“The theory vindicates some panpsychist intuitions – consciousness is an intrinsic, fundamental property, is graded, is common among biological organisms, and even some very simple systems have some. However, unlike panpsychism, IIT implies that not everything is conscious, for example group of individuals or feed forward networks. In sharp contrast with widespread functionalist beliefs, IIT implies that digital computers, even if their behavior were to be functionally equivalent to ours, and even if they were to run faithful simulations of the human brain, would experience next to nothing.” – Consciousness: Here, There but Not Everywhere

As I understand Mørch’s thesis, fusion occurs in a biological context when the number of causal relationships in the parts of a system that relate to the whole exceed the number of causal relationships which relate to the disconnected parts.

I think that this approach is an appropriate next step for philosophy of mind and may be useful in developing technology for AI. Information integration may be an ideal way to quantify degrees of consciousness for medical and legal purposes. It may give us ethical guidance in how synthetic and natural organisms should be treated, although I agree with some critics of IIT that the Φ value itself may be flawed. It is possible that IIT is on the right track in this instrumental sense, but that a better quantitative variable can be discovered. It is also possible that none of these approaches will help us understand what consciousness truly is, and will only confuse us further about the nature of the relation between the tangible, the intangible, and what I call the trans-tangible realm of direct perception.

What I propose here is that rather than considering a constitutive fusion of microphenomenal units into a macrophenomenal unit in which local causes and effects are consolidated into a larger locality, we should try viewing these micro and macro appearances as different orders of magnitude along a continuum of “causal lensing” or “access lensing“. Rather than physical causes of phenomenal effects, the lensing view begins with phenomenal properties as identical to existence itself. Perceptions are more like apertures which modulate access and unity between phenomenal contexts rather than mathematical processes where perceptions are manufactured by merging their isolation. To shift from a natural world of mechanical forms and forces to one of perceptual access is a serious undertaking, with far-ranging consequences that require committed attention for an extended time. Personally, it took me several years of intensive consideration and debate to complete the transition. It is a metaphysical upheaval that requires a much more objective view of both objectivity and subjectivity. Following this re-orientation, the terms ‘objective’ and ‘subjective’ themselves are suggested to be left behind, adopting instead the simpler, clearer terms such as tangible, intangible, and trans-tangible. Using this platform of phenomenal universality as the sole universal primitive, I suggest a spectrum-like continuum where ranges of phenomenal magnitude map to physical scale, qualitative intensity, and to the degree of permeability between them.

For example, on the micro/bottom scale, we would place the briefest, most disconnected sensations and impulses which can be felt, and marry them to the smallest and largest structures available in the physical universe. This connection between subatomic and cosmological scales may seem counterintuitive to our physics-bound framework, but here we can notice the aesthetic similarities between particles in a void and stars in a void. The idea here is not to suggest that the astrophysical and microphysical are identical, but that the similarity of their appearances reflects our common perceptual limitation to those largest and smallest scales of experience. These appearances may reflect a perception of objective facts, or they may be defined to some degree by particular perceptual envelope propagates reports about its own limits within itself. In the case of a star or an atom, we are looking at a report about the relationship between our own anthropocentric envelope of experience and the most distant scales of experience and finding that the overlap is similarly simple. What we see as a star or an atom may be our way of illustrating that our interaction is limited to very simple sensory-motor qualities such as ‘hold-release’ which corresponds to electromagnetic and gravitational properties of ‘push-pull’. If this view were correct, we should expect that to the extent that human lifetimes have an appearance from the astro or micro perspective, that appearance would be similarly limited to a simple, ‘points in a void’ kind of description. This is not to say that stars or atoms see us as stars or atoms, but that we should expect some analogous minimization of access across any sufficiently distant frame of perception.

Toward the middle of the spectrum, where medium-sized things like vertebrate bodies exist, I would expect that this similarity is gradually replaced by an increasing dimorphism. The difference between structures and feelings reaches its apex in the center of the spectrum for any given frame of perception. In that center, I suspect that sense presentations are maximally polarized, achieving the familiar Cartesian dualism of waking consciousness as is has been conditioned by Western society. In our case, the middle/macro level presentation is typically of an ‘interior’ which is intangible interacting with a tangible ‘exterior’ world, governed by linear causality. There are many people throughout history, however, who have reported other experiences in which time, space and subjectivity are considerably altered.

While the Western view dismisses non-ordinary states of consciousness as fraud or failures of human consciousness to report reality, I suggest that the entire category of transpersonal psychology can be understood as a logical expectation for the access continuum as it approaches the top end of the spectrum. Rather than reflecting a disabled capacity to distinguish fact from fiction, I propose that fact and fiction are, in some sense, objectively inseparable. As human beings, our body’s survival is very important to us, so such that phenomena relating to it directly would naturally occupy an important place in our personal experience. This should not be presumed to be the case for nature as a whole. Transpersonal experience may reflect a fairly accurate rendering of any given perceptual frame of reference which attains a sufficiently high level of sensitivity. With an access continuum model, high sensitivity corresponds to dilated apertures of perception (a la Huxley), and consequently allows more permeability across perceptual contexts, as well as permitting access to more distant scales of perceptual phenomena.

The Jungian concept of archetypes and collective unconscious should be considered useful intuitions here, as the recurring, cross-cultural nature of myth and dreams suggest access to phenomena which seem to blur or reveal common themes across many separate times and places. If our personal experience is dominated by a time-bound subject in a space-bound world, transpersonal experience seems to play with those boundaries in surreal ways. If personal experiences of time are measured with a clock, transpersonal time might be symbolized by Dali’s melting clocks. If our ordinary personal experience of strictly segregated facts and fictions occupies the robust center of the perceptual continuum, the higher degrees of access corresponds to a dissolving of those separations and the introduction of more animated and spontaneous appearances. As the mid-spectrum ‘proximate’ range gives way to an increasingly ‘ultimate’ top range, the experience of merging of times, places, subjects, objects, facts, and fiction may not so much be a hallucination as a profound insight into the limits of any given frame of perception. To perceive in the transpersonal band is to experience the bending and breaking of the personal envelope of perception so that its own limits are revealed. Where the West sees psychological confusion, the East sees cosmic fusion. In the access continuum view, both Eastern and Western view refer to the same thing. The transpersonal opportunity is identical to the personal crisis.

This may sound like “word salad” to some, or God to others, but what I am trying to describe is a departure from both Western and Eastern metaphysical models. It seems necessary to introduce new terms to define these new concepts. To describe how causality itself changes under different scales or magnitudes of perception, I use the term causal lensing. By this I mean to say that the way things happen in nature changes according to the magnitude of “perceptual access”. With the term ‘perceptual access’, I hope to break from the Western view of phenomenal experience as illusory or emergent, as well as breaking from the Eastern view of physical realism as illusory. Both the tangible and the intangible phenomena of nature are defined here as appearances within the larger continuum of perceptual access…a continuum in which all qualitative extremes are united and divided.

In order to unite and transcend both the bottom-up and top-down causality frameworks, I draw on some concepts from special relativity. The first idea that I borrow is the notion of an absolute maximum velocity, which I suggest is a sign that light’s constancy of speed is only one symptom of the deeper role of c. Understanding ‘light speed’ as an oversimplification of how perception across multiple scales of access works, c becomes a perceptual constant instead of just a velocity. When we measure the speed of light, we may be measuring not only the distance traveled by a particle while a clock ticks, but also the latency associated with translating one scale of perception into another.

The second idea borrowed from relativity is the Lorentz transformation. In the same way that the special relativity links acceleration to time dilation and length contraction, the proposed causal lensing schema transforms along causality itself along a continuum. This continuum ranges from what I want to call ultimate causes (with highest saturation of phenomenal intensity and access), to proximate causes (something like the macrophenomenal units), to ‘approximate causes’. When we perceive in terms of proximate causality, space and time are graphed as perpendicular axes and c is the massless constant linking the space axis to the time axis. When we look for light in distant frames of perception, I suggest that times and spaces break down (√c ) or fuse together (c²). In this way, access to realism and richness of experience can be calibrated as degrees of access rather than particles or waves in spacetime. What we have called particles on the microphysical scale should not be conceived necessarily as microphenomenal units, but more like phenomenal fragments or disunities that anticipate integration from a higher level of perception. In other words, the ‘quantum world’ has no existence of its own, but rather supplies ingredients for a higher level, macrophenomenal sense experience. The bottom level of any given frame of perception would be characterized by these properties of anticipatory disunity or macrophenomenal pre-coherence. The middle level of perception features whole, coherent Units of experience. The top or meta level of perception features Super-Unifying themes and synchronistic, poetic causality.

To be clear, what I propose here is that perceptual access is existence. This is an updated form of Berkeley’s “Esse est percipi” doctrine, where “to be is to be perceived” which does not presume perception to be a verb. In the access continuum view, aesthetic phenomena precede all distinctions and boundaries, so that even the assumption of a perceiving subject is discarded. Instead of requiring a divine perceiver, a super-subject becomes an appearance arising from the relation between ultimate and proximate ranges of perception. Subjectivity and objectivity are conceived of as mutually arising qualities within the highly dimorphic mid-range of the perceptual spectrum. This spectrum model, while honoring the intuitions of Idealists such as Berkeley, is intended to provide the beginnings of a plausible perception-based cosmology, with natural support from both Western Science and Eastern Philosophy.

Some examples of the perceptual spectrum:

In the case of vision, whether we lack visual acuity or sufficient light, the experience of not being able to see well can be characterized as a presentation of disconnected features. The all-but-blind seer is forced to approximate a larger, more meaningful percept from bits and pieces, so that a proximate percept (stuff happening here and now that a living organism cares about) can be substituted. Someone who is completely blind may use a cane to touch and feel objects in their path. This does not yield a visible image but it does fill in some gaps between the approximate level of perceptual access to the proximate level. This process, I suggest, is roughly what we are seeing in the crossing over from quantum mechanics to classical mechanics. Beneath the classical limit there is approximating causality based on probabilistic computation. Beyond the classical limit causality takes on deterministic causality appearances in the ‘Morphic‘ externalization and will-centered causality appearances in the ‘Phoric‘ interiorization.

In other words, I am suggesting a reinterpretation of quantum mechanics so that it is understood to be an appearance which reflects the way that a limited part of nature guesses about the nature of its own limitation.

In this least-accessible (Sempahoric, approximate) range of consciousness, awareness is so impoverished that even a single experience is fragmented into ephemeral signals which require additional perception to fully ‘exist’. What we see as the confounding nature of QM may be an accurate presentation of the conditions of mystery which are required to manifest multiple meaningful experiences in many different frames of perception. Further, this different interpretation of QM re-assigns the world of particle physics so that it no longer is presumed to be the fabric of the universe, but is instead seen as equivalent to the ‘infra-red’ end of a universal perceptual spectrum, no more or less real than waking life or a mystical vision. Beginning with a perceptual spectrum as our metaphysical and physical absolute, light becomes inseparable from sight, and invisible ranges of electromagnetism are perceptual modes which human beings have no direct access to. If this view is on the right track, seeing light as literally composed of photons would be category error that mistakes an appearance of approximation and disunity for ‘proximated’ or formal units. It seems possible that this mistake is to blame for contradictory entities in quantum theory such as ‘particle-waves’. I am suggesting that the reality of illumination is closer to what an artist does in a painting to suggest light – that is, using lighter colors of paint to show a brightening of a part of the visual field. The expectation of photons composing beams of light in space is, on this view, a useful but misguided confusion. There may be no free-standing stream of pseudo-particles in space, but instead, there is an intrinsically perceptual relation which is defined by the modality and magnitude of its access. I suggest that the photon, as well as the electromagnetic field, are more inventions than discoveries, and may ultimately be replaced with an access modulation theory. Special relativity was on the right track, but it didn’t go far enough as to identify light as an example of how perception defines the the proximate layer of the universe through optical-visibile spatiotemporalization.

Again, I understand the danger here of ‘word salad’ accusations and the over-use of neologisms, but please bear in mind that my intention here is to push the envelope of understanding to the limit, not to assert an academic certainty. This is not a theory or hypothesis, this is an informal conjecture which seems promising to me as a path for others to explore and discover. With that, let us return to the example of poor sight to illustrate the “approximate”, bottom range of the perceptual continuum. In visual terms, disconnected features such as brightness, contrast, color, and saturation should be understood to be of a wholly different order than a fully realized image. There is no ’emergence’ in the access continuum model. Looking at this screen, we are not seeing a fusion of color pixels, but rater we are seeing through the pixel level. The fully realized visual experience (proximate level) does not reduce to fragments but has images as its irreducible units. Like the blind person using a cane, an algorithm can match invisible statistical clues about the images we see to names that have been provided, but there is no spontaneous visual experience being generated. Access to images through pixels is only possible from the higher magnitude of visual perception. From the higher level, the criticality between the low level visible pixels and images is perhaps driven by a bottom-up (Mørchian) fusion, but only because there are also top-down, center-out, and periphery-in modes of access available. Without those non-local contexts and information sources, there is no fusion. Rather than images emerging from information, they are made available through a removal of resistance to their access. There may be a hint of this in the fact that when we open our eyes in the light, one type of neurochemical activity known as ‘dark current’ ceases. In effect, sight begins with unseeing darkness.

Part 2: The Proximate Range of the Access Continuum

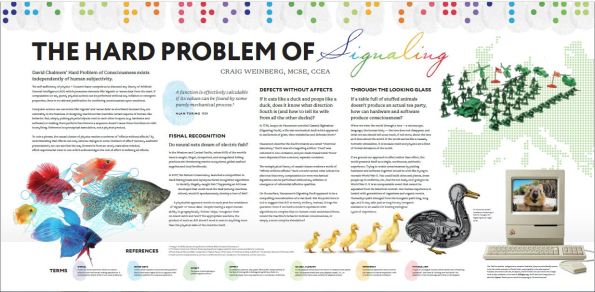

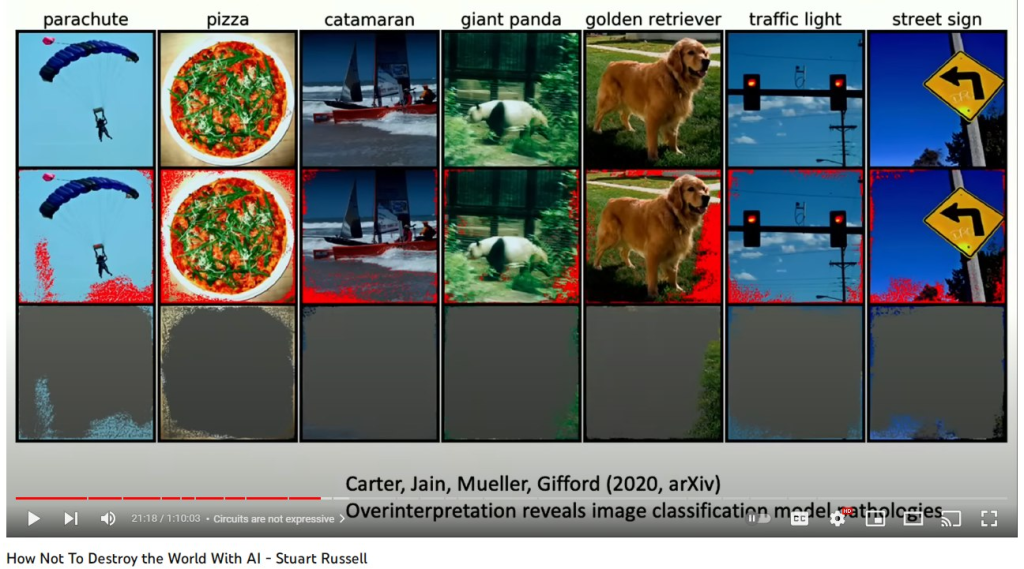

At the risk of injecting even more abstruse content (why stop now?), I want to discuss the tripartite spectrum model (approximate, proximate, and ultimate) and the operators √c, c, and c²*. In those previous articles, I offered a way of thinking about causality in which binary themes such as position|momentum, and contextuality|entanglement on the quantum level may be symptoms of perceptual limitation rather than legitimate features of a microphysical world. The first part of this article introduces √c as the perceptual constant on the approximate (low level) of the spectrum. I suggest that while photons, which would be the √c level fragments of universal visibility, require additional information to provide image-like pattern recognition, the actual perception of the image gestalt seems to be an irreducibly c (proximate, mid-level) phenomenon. By this, I mean that judging from the disparity between natural image perception and artificial image recognition, as revealed by adversarial images that are nearly imperceptible to humans, we cannot assume a parsimonious emergence of images from computed statistics. There seems to be no mechanical entailment for the information relating bits of information to one another that would level up to an aesthetically unified visible image. This is part of what I try to point out in my TSC 2018 presentation, The Hard Problem of Signaling.

Becuase different ranges of the perceptual spectrum are levels of access rather than states of a constitutive panpsychism, there is no reason to be afraid of Dualism as a legitimate underlying theme for the middle range. With the understanding that the middle range is only the most robust type of perceptual access and not an assertion of naive realism, we are free to redeem some aspects of the Cartesian intuition. The duality seen by Descartes, Galileo, and Locke, should not be dismissed as naive misunderstandings from a pre-scientific era, but as the literal ‘common-sense’ scope of our anthropic frame of perception. This naive scope, while unfashionable after the 19th century, is no less real than the competing ranges of sense. Just because we are no longer impressed by the appearance of res cogitans and res extensa does not mean that they are not impressive. Thinking about a cogitans-like and extensa-like duality as diametrically filtered versions of a ‘res aesthetica’ continuum works for me. The fact that we can detect phenomena that defy this duality does not make the duality false, it only means that duality isn’t the whole story. Because mid-level perception has a sample rate that is slower than the bottom range, we have been seduced into privileging that bottom range as more real. This to me is not a scientific conclusion, but a sentimental fascination with transcending the limits of our direct experience. It is exciting to think that the universe we see is ‘really’ composed of exotic Planck scale phenomena, but it makes more sense in my view to see the different scales of perception as parallel modes of access. Because time itself is being created and lensed within every scale of perception, it would be more scientific avoid assigning preference frame to the bottom scale. The Access Continuum model restores some features Dualism to what seems to me to be its proper place: as a simple and sensible map of the typical waking experience. A sober, sane, adult human being in the Western conditioned mindset experiences nature as a set of immaterial thoughts and feelings inside a world of bodies in motion. When we say that appearances of Dualism are illusion, we impose an unscientific prejudice against our own native epistemology. We are so anxious to leave the pre-scientific world behind that we would cheat at our own game. To chase the dream of perfect control and knowledge, we have relegated ourselves to a causally irrelevant epiphenomenon.

To sum up, so far in this view, I have proposed

- a universe of intrinsically perceptual phenomena in which some frames of perception are more localized, that is, more spatially, temporally, and perceptually impermeable, than others.

- Those frames of perception which are more isolated are more aesthetically impoverished so that in the most impermeable modes, realism itself is cleaved into unreal conjugate pairs.

- This unreality of disunited probabilities is what we see in poor perceptual conditions and in quantum theory. I call these pairs semaphores, and the degree of perceptual magnitude they embody I call the semaphoric or approximate range of the spectrum.

- The distance between semaphores is proposed to be characterized by uncertainty and incompleteness. In a semaphoric frame of visible perception, possibilities of pixels and possible connections between them do not appear as images, but to a seer of images, they hint at the location of an image which can be accessed.

- This idea of sensitivity and presentation as doors of experience rather sense data to be fused into a phenomenal illusion is the most important piece of the whole model. I think that it provides a much-needed bridge between relativity, quantum mechanics, and the entire canon of Western and Eastern philosophy.

- The distinction between reality and illusion, or sanity and insanity is itself only relevant and available within a particular (proximate) range of awareness. In the approximate and ultimate frames of perception, such distinctions may not be appropriate. Reality is not subjective or relative, but it is limited to the mid-range scope of the total continuum of access. All perceptions are ultimately ‘real’ in the top level, trans-local sense and ‘illusion’ in the approximate, pre-local sense.

- It is in the proximate, middle range of perception where the vertical continuum of access stretches out horizontally so that perception is lensed into a duality between mechanical-tangible-object realism and phenomenal-intangible-subject realism. It is through the lensing that the extreme vantage points perceive each other as unreal, naive, or insane. Whether we are born to personally identify with the realism of the tangible or intangible seems to also hang in the balance between pre-determined fate and voluntary participation. Choosing our existential anchoring is like confronting the ‘blue dress’ or ‘duck-rabbit’ ambiguous image. Once we attach to the sense of a particular orientation, the competing orientation becomes nonsense.

Part 3: The Ultimate Range of the Access Continuum

Once the reader feels that they have a good grasp of the above ideas of quantum and classical mechanics as approximate and proximate ranges of a universal perceptual continuum, this next section can be a guide to the other half of the conjecture. I say it can be a guide because I suspect that it is up to the reader to collaborate directly with the process. Unlike a mathematical proof, understanding of the upper half of the continuum is not confined to the intellect. For those who are anchored strongly in our inherited worldviews, the ideas presented here will be received as an attack on science or religion. In my view, I am not here to convince anyone or prove anything, I am here to share a ‘big picture’ understanding that may only be possible to glimpse for some people at some times. For those who cannot or will not be able to access to this understanding at this time, I apologize sincerely. As someone who grew up with the consensus scientific view as a given fact, I understand that this writing and the writer appear either ridiculously ignorant or insane. I would try to explain that this appearance too is actually supportive of the perceptual lensing model that I’m laying out, but this would only add to feelings of distrust and anger. For those who have the patience and the interest, we can proceed to the final part of the access continuum conjecture.

I have so far described the bottom end of the access continuum as being characterized by disconnected fragments and probabilistic guessing, and the middle range as a dualistic juxtaposition of morphic forms and ‘phoric’ experiences. In the higher range of the continuum perceptual apertures are opened to the presence of supersaturated aesthetics which transcend and transform the ordinary. Phenomena in this range seem to freely pass across the subject-object barrier. If c is the perceptual constant in which public space and private time are diametrically opposed, then the transpersonal constant which corresponds to the fusion of multiple places and times can be thought of as c². We can construct physical clocks out of objects, but these actually only give us samples of how objects change in public space. The sense of time must be inferred by our reasoning so that a dimension of linear time is imagined as connecting those public changes. This may seem solipsistic – that I am suggesting that time isn’t objectively real. This would be true if we assumed, as Berkeley did, that perception necessarily implies a perceiver. Because the view I’m proposing assumes that perception is absolute, the association of time with privacy and space with publicity does not threaten realism. Think of it like depth perception. In one sense we see a fusion of two separate two-dimensional images. In another sense, we use a single binocular set of optical sensors to give us access to three-dimensional vision. Applied to time, we perceive an exteriorized world in which is relatively static and we perceive an interiorized world-less-ness in which all remembered experiences are collected. It is by attaching our personal sense of narrative causality to the snapshots of experience that we can access publicly that a sense of public time is accessed. In the high level range of the continuum, time can progress in circular or ambiguous ways against a backdrop of eternity rather than the recent past. In this super-proximate apprehension of nature, archetypal themes from the ancient past or alien future can coexist. Either of these can take on extraordinarily benevolent or terrifying qualities.

Like it or not, no description of the universe can possibly be considered complete if it denies the appearance of surrealities. Whether it is chemically induced or natural, the human experience has always included features which we call mystical, psychotic, paranormal, or religious. While we dream, we typically do not suspect that we are in a dreamed world until we awake into another experience which may or may not also be a dream. It is a difficult task to fairly consider these types of phenomena as they are politically charged in a way which is both powerful and invisible to us. Like the fish who spends its life swimming in a nameless plenum, it is only those who jump or are thrown out of it who can perceive the thing we call water. Sanity cannot be understood without having access to an extra-normal perspective where its surfaces are exposed. If a lack of information is the bridge between the approximate and the proximate ranges of the access continuum, then transcendental experience is the bridge between the proximate and the ultimate range of the continuum. The highest magnitudes of perception break the fourth wall, and in an involuted/Ouroboran way, provide access to the surfaces of our own access capacities.

Going back to the previous example of vision, the ultimate range of perception can be added to the list:

- √c – Feeling your way around in a dark room where a few features are visible.

- c – Seeing three-dimensional forms in a well lit, real world.

- c² – Intuiting that rays, reflections, and rainbows reveal unseen facts about light.

It is important to get that the “²” symbolizes a meta- relation rather than a quantity (although the quantitative value may be useful as well). The idea is that seeing a rainbow is “visibility squared” because it is a visible presence which gives access to deeper levels of appreciating and understanding visibility. Seeing light as spectral, translucent images, bright reflections, shining or glowing radiance, is a category of sight that gives insight into sight. That self-transcending recursiveness is what is meant by c²: In the case of seeing, visible access to the nature of visibility. If we look carefully, every channel of perception includes its own self-transcendent clues. Where the camera betrays itself as a lens flare, the cable television broadcast shows its underpinnings as freezing and pixellating. Our altered states of consciousness similarly tell us personally about what it is like for consciousness to transcend personhood. This is how nature bootstraps itself, encoding keys to decode itself in every appearance.

Other sense modalities follow the same pattern as sight. The more extreme our experiences of hearing, the more we can understand about how sound and ears work. It is a curious evolutionary maladaptation that rather than having the sense organ protect itself from excessive sensation, it remains vulnerable to permanent damage. It would be strange to have a computer that would run a program to simulates something so intensely that it permanently damages its own capacity to simulate. What would be the evolutionary advantage of a map which causes deafness and blindness? This question is another example of why it makes sense to understand perception as a direct method of access rather than a side effect of information processing. We are not a program, we are an i/o port. What we call consciousness is a collection of perceptions under an umbrella of perception that is all-but imperceptible to us normally. Seeing our conscious experience from the access continuum perspective means defining ourselves on three different levels at once – as a c² partition of experience within an eternal and absolute experience, as a c level ghost in a biochemical machine, and as a √c level emergence from subconscious computation:

- √c – (Semaphoric-Approximate) – Probabilistic Pre-causality

- c – (Phoric|Morphic-Proximate) – Dualistic Free Will and Classical Causality

- c² – (Metaphoric-Ultimate) – Idealistic or Theistic Post-Causality

Notice that the approximate range and ultimate ranges both share a sense of uncertainty, however, where low level awareness seeks information about the immediate environment to piece together, high level awareness allows itself to be informed by that what is beyond its directly experienced environments. Between the pre-causal level of recombinatory randomness and the supernatural level of synchronistic post-causality is the dualistic level, where personal will struggles against impersonal and social forces. From this Phoric perspective, the metaphoric super-will seems superstitious and the semaphoric un-will seems recklessly apathetic. This is another example of how perceptual lensing defines nature. From a more objective and scientific perspective, all of these appearances are equally real in their own frame of reference and equally unreal from outside of that context.

Just as high volume of sound reveals the limits of the ear, and the brightness of light exposes the limits of the eye, the limits of the human psyche at any given phase of development are discovered through psychologically intense experiences. A level of stimulation that is safe for an adult may not be tolerable for a child or baby. Alternatively, it could be true that some experiences which we could access in the early stages of our life would be too disruptive to integrate into our worldview as adults. Perhaps as we mature collectively as a species, we are acquiring more tolerance and sensitivity to the increased level of access that is becoming available to us. We should understand the dangers as well as the benefits that come with an increasingly porous frame of perception, both from access to the “supernatural” metaphoric and “unnatural”, semaphoric ranges of the continuum. Increased tolerance means that fearful reactions to both can be softened so that what was supernatural can become merely surreal and what was unnatural can be accepted as non-repulsively uncanny. Whether it is a super-mind without a physical body or a super-machine with a simulated mind, we can begin to see both as points along the universal perceptual continuum.

Craig Weinberg, Tucson 4/7/2018

Latest revision 4/18/2018

*Special Diffractivity: c², c, and √c, Multisense Diagram w/ Causality, MSR Schema 3.3, Three-Phase Model of Will

The Hard Problem of Signaling

Download:

PDF – The Hard Problem of Signaling TSC2018

If it eats like a duck and poops like a duck, does it know what direction to fly in the Winter? In 1739, Jacque de Vaucanson unveiled Canard Digérateur (Digesting Duck), a life-size mechanical duck which appeared to eat kernels of grain, then metabolize and defecate them.³Vaucanson describes the duck’s innards as a small “chemical laboratory.” But it was a hoax: Food was collected in one container, and pre-made breadcrumb ‘feces’ were dispensed from a second, separate container. On the surface, Vaucanson’s Digesting Duck appeared to be a compelling reconstruction of a real duck. The analogy to AGI here is not to suggest it is possible that the appearance of an intelligent machine is a mere trick, but that the issue of artifice may play a much more crucial role in defining the phenomenon of subjectivity than it will appear to in observing the biological objects associated with our consciousness in particular. Consciousness itself, as the ultimate source of authenticity, may have no substitute.

If a doll can be made to shed tears without feeling sad, there is no reason to rule out the possibility of constructing an unfeeling machine which can output enough human-like behaviors to pass an arbitrarily sophisticated Turing Test. A test itself is a method of objectifying and making tangible some question that we have.Can we really expect the most intangible and subjective aspects of consciousness to render themselves tangible using methods designed for objectivity? When we view the world through a lens — a microscope, language, the human body — the lens does not disappear, and what we see should tell us as much, if not more, about the lens and the seeing as it does about the world. If math and physics reveal to us a world in which we don’t really exist, and what does exist are skeletal simulating ephemera, it may be because it is the nature of math and physics to simulate and ephemeralize.The very act of reduction imposed intentionally by quantifying approaches may increasingly feed back on its own image the further we get from our native scope of direct perception. In creating intelligence simulation machines we are investing in the most distanced and generic surface appearances of nature that we can access and using them to replace our most intimate and proprietary depths. An impressive undertaking, to be sure, but we should be vigilant about letting our expectations and assumptions blind us.Not overlooking the looking glass means paying attention in our methods to which perceptual capacities we are extending and which we are ignoring. Creating machines that walk like a duck and quack like a duck may be enough to fool even other ducks, but that doesn’t mean that the most essential aspects of a duck are walking and quacking. It may be the case that subjective consciousness cannot be engineered from the outside-in, so that putting hardware and software together to create a person would be a bit like trying to recreate World War II with uniforms and actors. A person, like a historical event may only arise in a single, unrepeatable historical context.Our human experience caries with it a history of generations of organisms and organic events, not just as biological recapitulations, but as a continuous enrichment of sensory affect and participation. Humanity’s path diverged from the inorganic path long, long ago, and it may take just as long for any inorganic substance to be usable to host the types of experience available to us, if ever. The human qualities of consciousness may not develop in any context other than that of directly experiencing the life of a human body in a human society.

(QUOKKA)

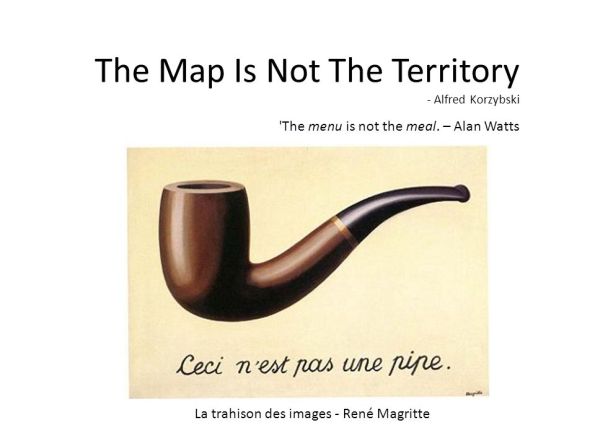

Information does not physically exist

Alfred Korzybski famously said “the map is not the territory”. To the extent that this is true, it should be understood to reveal that “information is not physics”. If there is a mapping function, there is no reason to consider it part of physics, and in fact that convention comes from an assumption of physicalism rather than a discovery of physical maps. There is no valid hypothesis of a physical mechanism for one elemental phenomenon or event to begin to signify another as a “map”. Physical phenomena include ‘formations’ but there is nothing physical which could or should transform them ‘in’ to anything other than different formations.

Alfred Korzybski famously said “the map is not the territory”. To the extent that this is true, it should be understood to reveal that “information is not physics”. If there is a mapping function, there is no reason to consider it part of physics, and in fact that convention comes from an assumption of physicalism rather than a discovery of physical maps. There is no valid hypothesis of a physical mechanism for one elemental phenomenon or event to begin to signify another as a “map”. Physical phenomena include ‘formations’ but there is nothing physical which could or should transform them ‘in’ to anything other than different formations.

A bit or elementary unit of information has been defined as ‘a difference that makes a difference’. While physical phenomena seem *to us* to make a difference, it would be anthropomorphizing to presume that they are different or make a difference to each other. Difference and making a difference seem to depend on some capacity for detection, discernment, comparison, and evaluation. These seem to be features of conscious sense and sense making rather than physical cause and effect. The more complete context of the quote about a difference which makes a difference has to do with neural pathways and an implicit readiness to be triggered.

In Bateson’s paper, he says “In fact, what we mean by information—the elementary unit of information—is a difference which makes a difference, and it is able to make a difference because the neural pathways along which it travels and is continually transformed are themselves provided with energy. The pathways are ready to be triggered. We may even say that the question is already implicit in them.” In my view this ‘readiness’ is a projection of non-physical properties of sense and sense making onto physical structures and functions. If there are implicit ‘questions’ on the neural level, I suggest that they cannot be ‘in them’ physically, and the ‘interiority’ of the nervous system or other information processors is figurative rather than literal.

My working hypothesis is that information is produced by sense-making, which in turn is dependent upon more elemental capacities for sense experience. Our human experience is a complex hybrid of sensations which seem to us to be embodied through biochemistry and sense-making experiences which seem to map intangible perceptions outside of those tangible biochemical mechanisms. The gap between the biochemical sensor territories and the intangible maps we call sensations are a miniaturized view of the same gap that exists at the body-mind level.

Tangibility itself may not be an ontological fact, but rather a property that emerges from the nesting of sense experience. There may be no physical territory or abstract maps, only sense-making experiences of sense experiences. There may be a common factor which links concrete territories and abstract maps, however. The common factor cannot be limited to the concrete/abstract dichotomy, but it must be able to generate those qualities which appear dichotomous in that way. To make this common factor universal rather than personal, qualia or sense experience could be considered an absolute ground of being. George Berkeley said “Esse est percipi (To be is to be perceived)”, implying that perception is the fundamental fabric of existence. Berkeley’s idealism conceived of God as the ultimate perceiver whose perceptions comprise all being, however it may be that the perceiver-perceived dichotomy is itself a qualitative distinction which relies on an absolute foundation of ‘sense’ that can be called ‘pansense’ or ‘universal qualia’.

In personal experience, the appearance of qualities is known by the philosophical term ‘qualia’ but can also be understood as received sensations, perceptions, feelings, thoughts, awareness and consciousness. Consciousness can be understood as ‘the awareness of awareness’, while awareness can be ‘the perception of perception’.Typically we experience the perceiver-perceived dichotomy, however practitioners of advanced meditation techniques and experiencers of mystical states of consciousness report a quality of perceiverlessness which defies our expectation of perceiver-hood as a defining or even necessary element of perception. This could be a clue that transpersonal awareness transcends distinction itself, providing a universality which is both unifying, diversifying, and re-unifying. Under the idea of pansense, God could either exist or not exist, or both, but God’s existence would either have to be identical with or subordinate to pensense. God cannot be unconscious and even God cannot create his own consciousness.

It could be thought that making the category of perception absolute makes it just as meaningless as calling it physical, however the term ‘perception’ has a meaning even in an absolute sense in that it positively asserts the presence of experience, whereas the term ‘physical’ is more generic and meaningless. Physical could be rehabilitated as a term which refers to tangible geometric structures encountered directly or indirectly during waking consciousness. Intangible forces and fields should be understood to be abstract maps of metaphysical influences on physical appearances. What we see as biology, chemistry, and physics may in fact be part of a map in which a psychological sense experience makes sense of other sense experiences by progressively truncating their associated microphenomenal content.

Information is associated with Entropy, but entropy ultimately isn’t purely physical either. The association between information and entropy is metaphorical rather than literal. The term ‘entropy’ is used in many different contexts with varying degrees of rigor. The connection between information entropy and thermodynamic entropy comes from statistical mechanics. Similar statistical mechanical formulas can be applied to both the probability of physical microstates (Boltzmann, Gibbs) and the probability of ‘messages’ (Shannon), however probability derives from our conscious desire to count and predict, not from that which is being counted and predicted.

“Gain in entropy always means loss of information, and nothing more”. To be more concrete, in the discrete case using base two logarithms, the reduced Gibbs entropy is equal to the minimum number of yes–no questions needed to be answered in order to fully specify the microstate, given that we know the macrostate.” - Wikipedia

Information can be considered negentropy also:

“Shannon considers the uncertainty in the message at its source, whereas Brillouin considers it at the destination” – physics.stackexchange.com

Thermodynamic entropy can be surprising in the sense that it becomes more difficult to predict the microstate of any individual particle, but unsurprising in the sense that the overall appearance of equilibrium is both a predictable, unsurprising conclusion and it is an appearance which implies the loss of potential to generate novelty or surprise. Also, surprise is not a physical condition.

Heat death is a cosmological end game scenario which is maximally entropic in thermodynamic terms but lacks any potential for novelty or surprise. If information is surprise, then high information would correlate to high thermodynamic negentropy. The Big Bang is a cosmological creation scenario which follows from a state of minimal entropy in which novelty and surprise are also lacking until the Big Bang occurs. If information is surprise, then low information would correlate to high thermodynamic negentropy.

The qualification of ‘physical’ has evolved and perhaps dissolved to a point where it threatens to lose all meaning. In the absence of a positive assertion of tangible ‘stuff’ which does not take tangibility itself for granted, the modern sense of physical has largely blurred the difference between the abstract and concrete, mathematical theory and phenomenal effects, and overlooks the significance of that blurring. Considering physical a category of perceptions gives meaning to both categories in that nature is conceived as being intrinsically experiential with physical experiences being those in which the participatory element is masked or alienated by a qualitative perceiver-subject/perceived-object sense of distinction. The physical is perceived by the subject which perceives itself to possess a participatory subjectivity that the object lacks.

Information depends on a capacity to create (write) and detect (read) contrasts between higher and lower entropy. In that sense it is meta-entropic and either the high or low entropy state can be foregrounded as signal or backgrounded as noise. The absence of both signal and noise on one level can also be information, and thus a signal, on another level. What constitutes a signal at in the most direct frame of reference is defined by the meta-signifying capacity of “sense” to deliver sense-experience. If there is no sense experience, there is nothing to signify or make-sense-of. If there is no sense-making experience, then there is nothing to do with the sense of contrasting qualities to make them informative.

The principle of causal closure in physics, would, if true, prevent any sort of ‘input’ or receptivity. Physical activity reduces to chains of causality which are defined by spatiotemporal succession. A physical effect differs from a physical cause only in that the cause precedes the effect. Physical causality therefore is a succession of effects or outputs acting on each other, so that any sense of inputs or affect on to physics would be an anthropomorphic projection.

The lack of acknowlegement of input/affect as a fundamental requirement for natural phenomena is an oversight that may arise from a consensus of psychological bias toward stereotypically ‘masculine’ modes of analysis and away from ‘feminine’ modes of empathy. Ideas such as Imprinted Brain Theory, Autistic-Psychotic spectrum, and Empathizing-Systemizing theory provide a starting point for inquiries into the role that overrepresentation of masculine perspectives in math, physics, and engineering play in the development of formal theory and informal political influence in the academic adoption of theories.

Criticisms? Support? Join the debate on Kialo.

Computation as Anti-Holos

Here is a technical sketch of how all of nature could arise from a foundation which is ‘aesthetic’ rather than physical or informational. I conceive of the key difference between the aesthetic and the physical or informational (both anesthetic) is that an aesthetic phenomenon is intrinsically and irreducibly experiential. This is a semi-neologistic use of the term aesthetic, used only to designate the presence of phenomena which is not only detected but is identical to detection. A dream is uncontroversially aesthetic in this sense, however, because our waking experience is also predicated entirely upon sensory, perceptual, and cognitive conditioning, we can never personally encounter any phenomenon which is not aesthetic. Aesthetics here is being used in a universal way and should not be conflated with the common usage of the term in philosophy or art, since that usage is specific to human psychology and relates primarily to beauty. There is a connection between the special case of human aesthetic sense and the general sense of aesthetic used here, but that’s a topic for another time. For now, back to the notion of the ground of being as a universal phenomenon which is aesthetic rather than anesthetic-mechanical (physics or computation).

I have described this aesthetic foundation with various names including Primordial Identity Pansensitivity or Pansense. Some conceive of something like it called Nondual Fundamental Awareness. For this post I’ll call it Holos: The absolute totality of all sensation, perception, awareness, and consciousness in which even distinctions such as object and subject are transcended.

I propose that our universe is a product of a method by which Holos (which is sense beneath the emergent dichotomy of sensor-sensed) is perpetually modulating its own sensitivity into novel and contrasting aspects. In this way Holos can be understood as a universal spectrum of perceivability which nests or masks itself into sub-spectra, such as visibility, audibility, tangibility, emotion, cogitation, etc, as well into quantifiable metrics of magnitude.

The masking effect of sense modulation is, in this hypothesis the underlying phenomenon which has been measured as entropy. Thermodynamic entropy and information entropy alike are not possible without a sensory capacity for discernment between qualities of order/certainty/completeness (reflecting holos/wholeness) and the absence of those qualities (reflecting the masking of holos). Entropy can be understood as the masking of perceptual completeness within any given instance of perception (including measurement perceptions). Because entropy is the masking of the completeness of holos, it is a division which masks division. Think of how the borders of our visual field present an evanescent, invisible or contrast-less boundary to visibility of visual boundaries and contrasts. Because holos unites sense, entropy divides sense, including the sense of division, resulting in the stereotypical features of entropy – equilibrium or near equilibrium of insignificant fluctuations, uncertainty, morphological decay to generic forms and recursive functions, etc. Entropy can be understood as the universal sense of insensitivity. The idea of nothingness refers to an impossible state of permanent unperceivability, however just as absolute darkness is not the same as invisibility, even descriptors of perceptual absence such stillness, silence, vacuum are contingent upon a comparison with their opposites. Nothingness is still a concept within consciousness rather than a thing which can actually exist on its own.

Taking this idea further, it is proposed that the division of sense via entropy-insensitivity has a kind of dual effect. Where holos is suppressed, a black-hole like event-horizon of hyper-perceivability is also present. There is a conservation principle by which entropic masking must also residuate a hypertrophied entity-hood of sense experience: A sign, or semaphore, aka unit of information/negentropy.

In dialectic terms, Holos/sense is the universal, absolute thesis of unity, which contains its own antithesis of entropy-negentropy. The absolute essence of the negentropy-entropy dialectic would be expressed in aesthetic duals such as particle-void, foreground-background, signal-noise. The aesthetic-anesthetic dual amplifies the object-like qualities of the foregrounded sensation such that it is supersaturated with temporary super-completeness, making it a potential ‘signal’ or ‘sign’…a surface of condensed-but-collapsed semantics or ‘phoria’ into ‘semaphoria’, aka, syntactic elements. I call the progressive formalizing of unified holos toward graphic units ‘diffractivity’. The result of diffractivity is that the holos implements a graphic-morphic appearance protocol within itself, which we call space-time, and which is used to anchor and guide the interaction of the entropic, exterior of experience. The interior of complex experiences are guided by the opposite, transformal sense of hierarchy-by-significance’. Significance is another common term which I am partially hijacking for use in a more specific way as the saturation of aesthetic qualities, and the persistence of any given experience within a multitude of other experiences.

To recap, the conjecture is that all of nature arises by, through, for, and within an aesthetic foundation named ‘holos’. Through a redistribution of its own sensitivity, holos masks its unity property into self-masking/unmasking ‘units’ which we call ‘experiences’ or ‘events’. The ability recall multiple experiences and to imagine new experiences, and to understand the relation between them is what we call ‘time’.

Within more complex experiences, the entropic property which divides experience into temporal sections can reunite with other, parallel complex experiences in a ‘back to back’ topology. In this mode of tactile disconnection, the potential for re-connection of the disconnected ends of experiences is re-presented what we call ‘objects in space’ or ‘distance between points’, aka geometry. By marrying the graphed, geometric formality of entropy with the algebraic, re-collecting formality of sequence, we arrive at algorithm or computation. Computation is not a ‘real phenomenon’ but a projection of the sense of quantity (an aesthetic sense just like any other) onto a distanced ‘object’ of perception.

Physics in this view is understood to be the inverted reflection and echo of experience which has been ‘spaced and timed’. Computation is the inversion of physics – the anesthetic void as addressable container of anesthetic function-objects. Physics makes holos into a hologram, and computation inverts the natural relation into an artificial, ‘information theoretic’, hypergraphic anti-holos. In the anti-holos perspective, nature is uprooted and decomposed into dustless digital dust. Direct experience is seen as ‘simulation’ or ‘emergent’, non-essential properties which only ‘seem to exist’, while the invisible, intangible world of quantum-theoretical mechanisms and energy rich vacuums are elevated to the status of noumena.

Computationalists Repent! The apocalypse is nigh! 🙂

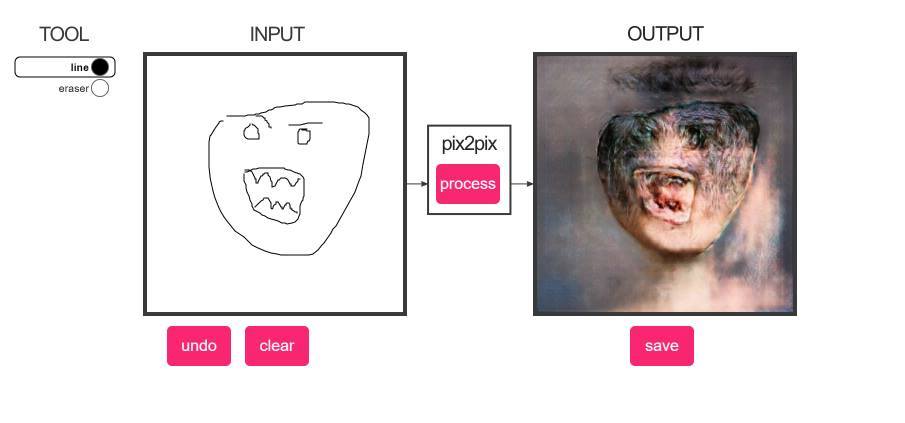

AI is Still Inside Out

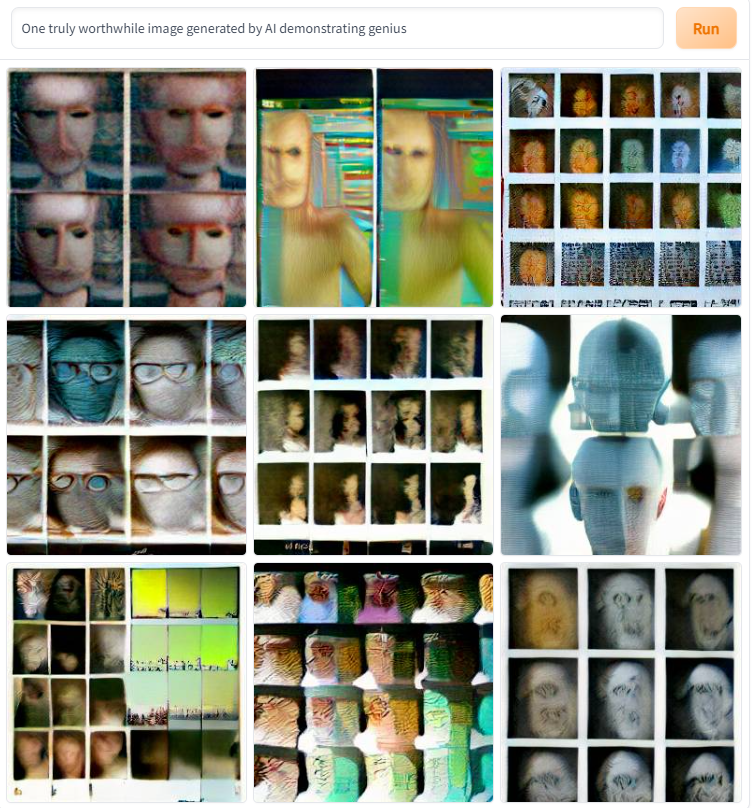

Turn your doodles into madness.

I think this is a good example of how AI is ‘inside out’. It does not produce top-down perception and sensations in its own frame of awareness, but rather it is a blind seeking of our top-down perception from a completely alien, unconscious perspective.

The result is not like an infant’s consciousness learning about the world from the inside out and becoming more intelligent, rather it is the opposite. The product is artificial noise woven together from the outside by brute force computation until we can almost mistake its chaotic, mindless, emotionless products for our own reflected awareness.

This particular program appears designed to make patterns that look like monsters to us, but that isn’t why I’m saying its an example of AI being inside out. The point is that this program exposes image processing as a blind process of arithmetic simulation rather than any kind of seeing. The result is a graphic simulacra…a copy with no original which, if we’re not careful, can eventually tease us into accepting it as a genuine artifact of machine experience.

See also: https://multisenserealism.com/2015/11/18/ai-is-inside-out/

Time for an update (6/29/22) to further demonstrate the point:

Added 5/3/2023:

Stochastic filtering is not how sense actually works, but it can seem like how sense works if you’re using stochastic filtering to model sense.

How Not To Destroy the World With AI – Stuart Russell

Three-Phase Model of Will

Within the Multisense Realism (MSR) model, all of nature is conceived of as a continuum of experiential or aesthetic phenomena. This ‘spectrum of perceivability’ can be divided, like the visible light spectrum, into two, three, four, or millions of qualitative hues, each with their own particular properties, and each which contribute to the overall sense of the spectrum.

For this post, I’ll focus on a three-level view of the spectrum: Sub-personal, Personal, and Transpersonal. Use of the MSR neologisms ‘Semaphoric, Phoric, and Metaphoric’ may be annoying to some readers, but I think that it adds some important connections and properly places the spectrum of perceivability in a cosmological context rather than in an anthropocentric or biocentric one.

In my view, nature is composed of experiences, and the primary difference between the experiences of biological organisms (which appear as synonymous with cellular-organic bodies to each other) and experiences which appear to us as inorganic chemistry, atoms, planets, stars, etc is the scale of time and space which are involved and the effect of that scale difference on what I call perceptual lensing or eigenmorphism.

In other words, I am saying that the universe is made of experiences-within-experiences, and that the relation of any given experience to the totality of experience is a defining feature of the properties of the universe which appear most real or significant. If you are an animal, you have certain kind of experiences in which other animals are perceived as members of one’s own family, or as friends, pets, food, or pests. These categories are normally rather firm, and we do not want to eat our friends or pets, we understand that what constitutes a pet or pest in some cultures may be desirable as food in others. We understand that the palette can shift, for example, many with a vegan diet sooner or later find meat eating in general to be repulsive. This kind of shift can be expressed within the MSR model as a change in the lensing of personal gustatory awareness so that the entire class of zoological life is identified with more directly. The scope of empathy has expanded so that the all creatures with ‘two eyes and a mother’ are seen in a context of kinship rather than predation.

Enslavement is another example of how the lens of human awareness has changed. For millennia slavery was practiced in various cultures much like eating meat is practiced now. It was a fact of life that people of a different social class or race, women or children could be treated as slaves by the dominant group, or by men or adults. The scope of empathy was so contracted* by default that even members of the same human species were identified somewhere between pet and food rather than friends or family. As this scope of awareness (which is ultimately identical with empathy) expanded those who were on the leading edge of the expansion and those who were on the trailing edge began to see each other in polarized terms. There is a psychological mechanism at work which fosters the projection of negative qualities on the opposing group. In the case of 19th century American slavery, this opposition manifested in the Civil War.

Possibly all of the most divisive issues in society are about perception and how empathy is scoped. Is it an embryo or an unborn child? Are the poor part of the human family or are they pests? Should employees have rights as equals with employers or does wealth confer a right of employers to treat employees more like domesticated animals? All of these questions are contested within the lives of individuals, families, and societies and would fall under the middle range of the three tiered view of the MSR spectrum: The Phoric scope of awareness.

Phoric range: Consciousness is personal and interpersonal narrative with a clearly delineated first person subject, second person social, and third person object division. Subjective experience is intangible and difficult to categorize in a linear hierarchy. Social experience is intangible but semiotically grounded in gestures and expressions of the body. Consider the difference between the human ‘voice’ and the ‘sounds’ that we hear other animals make. The further apart the participants are from each other, the more their participation is de-personalized. Objective experience (more accurately objective-facing or public-facing experience) is totally depersonalized and presented as tangible objects rather than bodies. Tangible objects are fairly easy to stratify by time/space scale: Roughly human sized or larger animals are studied in a context of zoology. Smaller organisms and cells comprise the field of biology. As the ‘bodies’ get smaller and lives get shorter/faster relative to our own, the scope of our empathy contracts (unless perhaps if you’re a microbiologist), so that we tend to consider the physical presence of microorganisms and viruses somewhere in between bodies and objects.

Even though we see more and more evidence of objects on these sub-cellular scales behaving with seeming intelligence or responsiveness, it is difficult to think of them as beings rather than mechanical structures. Plants, even though their size can vary even more than animals, are so alien to our aesthetic sense of ourselves that they tend to be categorized in the lower empathy ranges: Food rather than friends, fiber rather than flesh. This again is all pertaining to the boundary beteween the personal or phoric range of the MSR spectrum and the semaphoric range, sub-personal. The personal view of an external sempahore is an object (morphic phenomenon). The morphic scope is a reflection within the phoric range of experiences which are perceptually qualified as impersonal but tangible. It is a range populated by solid bodies, liquids, and gas which are animated by intangible ‘forces’ or ‘energies’**. Depending on who is judging those energies and the scale and aesthetics of the object perceived, the force or energy behind the behavior of the body is presumed to be somewhere along an axis which extends from ‘person’, where full fledged subjective intent governs the body’s behavior to ‘mechanism’ where behaviors are governed by impersonal physical forces which are automatic and unintentional.

Zooming in on this boundary between sentience and automaticity, we can isolate a guiding principle in which ‘signals’ embody the translation between mechanical-morphic forms and metric-dynamic functions which are supposed to operate without sensation, and those events which are perceived with participatory qualities such as feeling, thinking, seeing, etc. While this sub-personal level is very distant from our personal scope of empathy, it is no less controversial as far as the acrimony between those who perceive no special difference between sensation and mechanical events, and those who perceive a clear dichotomy which cannot be bridged from the bottom up. To the former group, the difference between signal (semaphore) and physical function (let’s call it ‘metamorph’) is purely a semantic convention, and those who are on the far end of the latter group appear as technophobes or religious fanatics. To the latter group, the difference between feelings and functions is of the utmost significance – even to divine vs diabolical extremes. For the creationist and the anti-abortionist, human life is not divisible to mere operations of genetic objects or evolving animal species. Their perception of the animating force of human behavior is not mere stochastic computation and thermodynamics, but ‘free will’ and perhaps the sacred ‘soul’. What is going on here? Where are these ideas of supernatural influences coming from and why do they remain popular in spite of centuries of scientific enlightenment?

This is where the third level of the spectrum comes in, the metaphoric or holophoric range.

To review: Semaphoric: Consciousness on this level is seen as limited to signal-based interactions. The expectation of a capacity to send and receive ‘signs’ or ‘messages’ is an interesting place to spend some time on because it is so poorly defined within science. Electromagnetic signals are described in terms of charge or attraction/repulsion but it is at the same time presumed to be unexperienced. Computer science takes signal for granted. It is a body of knowledge which begins with an assumption that there already is hardware which has some capacity for input, output, storage, and comparison of ‘data’. Again, the phenomenal content of this process of data processing is poorly understood, and it is easy to grant proto-experiential qualities to programs when we want them to seem intelligent, or to withdraw those qualities when we want them to see them as completely controllable or programmable. Data is the semaphoric equivalent of body on the phoric level. The data side of the semaphore is the generic, syntactic, outside view of the signal. Data is a fictional ‘packet’ or ‘digit’ abstractly ‘moving’ through a series of concrete mechanical states of the physical hardware. There is widespread confusion over this, and people disagree what the relation between data, information, and experience is. MSR allows us to see the entire unit as semaphore; sensory-motive phenomena which is maximally contracted from transpersonal unity and minimally presented as sub-personal unit.

Like the vegan who no longer sees meat as food, the software developer or cognitive scientist may not see data as a fictional abstraction overlaid on top of the material conditions of electronic components, but instead as carriers of a kind of proto-phenomenal currency which can learn and understand. Data for the programmer may seem intrinsically semantic – units whose logical constraints make them building blocks of thought and knowledge that add up to more than the sum of their parts. There is a sense that data is in and of itself informative, and through additional processing can be enhanced to the status of ‘information’.

In my view, this blurring of the lines between sensation, signal, data, and information reflects the psychology of this moment in the history of human consciousness. It is the Post-Enlightenment version of superstition (if we want to be pejorative) or re-enchantment (if we want to be supportive). Where the pre-Enlightenment mind was comfortable blurring the lines between physical events and supernatural influences, the sophisticated thinker of the 21st century has no qualms about seeing human experience as a vast collection of data signals in a biochemical computer network. Where it was once popular among the most enlightened to see the work of God in our everyday life, it is now the image of the machine which has captured the imagination of professional thinkers and amateur enthusiasts alike. Everything is a ‘system’. Every human experience traces back to a cause in the body, its cells and molecules, and to the blind mechanism of their aggregate statistical evolutions.

To recap: The MSR model proposes that all of nature can be modeled meaningfully within a ‘spectrum of perceivability’ framework. This spectrum can be divided into any number of qualitative ranges, but the number of partitions used has a defining effect on the character of the spectrum as a whole. The ‘lower’, semaphoric or ‘signal’ end of the spectrum presents a world of sub-personal sensations or impulses which relate to each other as impersonal data processes. Whether this perception is valid in an objective sense, or whether it is the result of the contraction of empathy that characterizes the relation between the personal scope of awareness and its objectification of the sub-personal is a question which itself is subject to the same question. If you don’t believe that consciousness is more fundamental than matter, then you aren’t going to believe that your sensitivity has an effect on how objective phenomena are defined. If you already see personal consciousness as a function of data processing organic chemistry, then you’re not going to want to take seriously the idea that chemical bonding is driven by sensory-empathic instincts rather than mathematical law. If you’re on the other end of the psychological spectrum however, it may be difficult to imagine why anyone would even want to deny the possibility that our own consciousness is composed of authentic and irreducible of feelings.

In either case, we can probably all agree that activity on the microscopic scale seems less willful and more automatic than the activity which we participate in as human beings. Those who favor the bottom-up view see this ‘emergence’ of willful appearance as a kind of illusion, and that actually all choices we make are predetermined by the mechanics of physical conditions. Those who favor the top-down view may also see the appearance of human will as an illusion, but driven by supernatural influences and entities rather than mathematical ones. Thus, the personal range of awareness is bounded on the bottom by semaphore (sensation <> signal < || > data <> information) and on the top by what I call metaphor (fate <> synchronicity < || > intuition <> divinity).

As we move above the personal level, with its personal-subject, social groups and impersonal objects, to the transpersonal level, the significance of our personal will increases. Even though religiosity tends to impose limits on human will in the face of overwhelming influence from divine will, there is an equally powerful tendency to elevate individual human will to a super-significant role. The conscience or superego is mediator between personal self and the transpersonal. It even appears as a metaphor in cartoons as angel and devil on the shoulder. Most religious practices stress the responsibility of the individual to align their personal will to the will of God by finding and following the better angels of conscience or suffer the consequences. The consequences range from the mild forms of disappointing reincarnation or being stuck in repeating cycles of karma to Earth shaking consequences for the entire universe (as in Scientology). From the most extreme transpersonal perspective, the personal level of will is either inflated so that every action a person takes, including what they choose to think and feel is a tribute or affront to God, and gets us closer to paradise or damnation. Simultaneously personal or it is deflated or degraded so that the entirety of human effort is pathetic and futile in the face of Higher Power.