Archive

Notes on Privacy

The debates on privacy which have been circulating since the dawn of the internet age tend to focus either on the immutable rights of private companies to control their intellectual property or the obsolescence of the notion of actual people to control access to their personal information. There’s an interesting hypocrisy there, as the former rights are represented as pillars of civilized society and the latter expectations are represented as quaint but irrelevant luxuries of a bygone era.

This double standard aside, the issue of privacy itself is never discussed. What is it, and how do we explain its existence within the framework of science? To me, the term privacy as applied to physics is more useful in some ways than consciousness. When we talk about private information being leaked or made public, we really mean that the information can now be accessed by unintended private parties. There is really no scientific support for the idea of a truly ‘public’ perspective ontologically. All information exists only within some interpreter’s sensory input and information processing capacity. While few would argue that there is no universe beyond our human experience of it, who can say that there is no universe beyond *any* experience of it? Just because I don’t see it doesn’t mean it doesn’t look like something, but if there nothing can see it can we really say that it looks like something?

Privacy would be more of a problem for theoretical physics than it is for internet users, if physicists were to try to explain it. It is through the problems which have risen with the advent of widespread computation that we can glimpse the fundamental issue with our worldview and with our legacy understanding of its physics. With identity theft, pirated software, appropriated endorsements, data mining, and now Prism, it should be obvious that technology is exposing something about privacy itself which was not an issue before.

The physics of privacy that I propose suggests that by making our experiences public through a persistent medium, we are trading one kind of entropy for another. When we express an aspect of our private life into a public network, the soft, warm blur of inner sense is exposed to the cold, hard structure of outer knowledge. It is an act which is thermodynamically irreversible – a fact which politicians seem slow to understand as the cover-up of the act seems invariably the easier transgression to discover and prove. The cover up alerts us to the initial crime as well as a suggestion of the knowledge of guilt, and the criminal intent to conceal that guilt. The same thing undoubtedly occurs on a personal level as subjects which are most threatening to people’s marriages and careers are probably those which can be found by searching for purging behavior and keywords related to embarrassment.

As the high-entropy fuzziness of inner life is frozen into the low-entropy public record, a new kind of entropy over who can access this record is introduced. Security issues stem from the same source as both IP law issues and surveillance issues. The ability to remain anonymous, to expose anonymity, to spoof identifiers leading to identification, etc, are all examples of the shadow of private entropy cast into the public realm. There’s no getting around it. Identity simply cannot be pinned down 100% – that kind of personal entropy can only be silenced personally. Only we know for sure that we are ourselves, and that certainty, that primordial negentropy is the only absolute which we can directly experience. Decartes cogito is a personal statement of that absolute certainty (Je pense donc je suis), although I would say that he was too narrow in identifying thought in particular as the essence of subjectivity. Indeed, thinking is not something that we notice until we are a few years old, and it can be backgrounded into our awareness through a variety of techniques. I would say instead that it is the sense of privacy which is the absolute: solace, solitude, solipsism – the sense of being apart from all that can be felt, seen, known, and done. There is a sense of a figurative ‘place’ in which ‘we’ are which is separate and untouchable to anything public.

This sense seems to be corroborated by neuroscience as well, since no instrument of public discovery seems to be able to find this place. I don’t see this as anything religious or mystical (though religion and mysticism does seek to explain this sense more than science has), but rather as evidence that our understanding of physics is incomplete until we can account for privacy. Privacy should be understood as something which is as real as energy or matter, in fact, it should be understood as that which divides the two and discerns the difference. Attention to reveal, intention to reveal or conceal, and the oscillation between the three is at the heart of all identity, from human beings to molecules. The control of uncertainty, through camouflage, pretending, and outright deception has been an issue in biology almost from the start. Before biology, concealment seems limited to unintentional circumstances of placement and obstruction, although that could be a limitation of our perception as well. Since what we can see of another’s privacy may not ever be what it appears, it stands to reason that our own privacy may not ever be able to play the role of impartial public observer. Privacy is made of bias, and that bias is the relativistic warping of perception itself.

Privacy and Social Media

Continuing with the idea of information entropy as it relates to privacy, social media acts as a laboratory for these kinds of issues. Before Facebook, the notion of friendship floated on a cushion of consensual entropy – politeness. As the song goes “don’t ask me what I think of you, I might not give the answer that you want me to.”. Whom one considered a friend was largely a subjective matter with high public entropy. Even when declaring friendship openly, there was no binding agreement and it was effortless for sociable people to retain many asymmetric relations. Politeness has always been part of the security apparatus of those who are powerful or popular. Nobility and politeness have a curious relation, as the well heeled are expected to embody exemplary breeding but also have license to employ rudeness and blunt honesty at will. The haughtiness of high position is one of reserving one’s own right to expose others faults while being protected from others ability to do the same.

Facebook, while not the first social network to employ a structure of friendship granting, has made the most out of it. From the start, the agenda of Facebook has been to neutralize the power of politeness and to encourage public declaration of friendship as a binding, binary statement – yes you are my friend or (no response). Unfriending someone is a political act which can have real implications. Even failing to respond to someone’s friend request can have social currency. The result is a tacit bias toward liberal friending policies, and a consequent need for filtering to control who are treated as friends and who are treated as potential friends, tolerated acquaintances, frienemies, etc. Google Plus offers a more explicit system for managing this non-consensual social entropy to more conveniently permit social asymmetry.

Twitter has wound up playing an unusual role in which privacy of elites is protected in one sense and exposed in another. Unlike other social networks, The 140 character limit on tweets, which came from the desire to make it compatible with SMS, has the unintentional consequence of providing a very fast stream with low investment of attention. For a celebrity who wants to retain their popularity and relevance, it is an ideal way to keep in touch with large numbers of fans without the expectation of social involvement that is implied by a richer communication system. It gives back some of the latitude which Facebook takes away – you don’t have friends on Twitter, you have Followers. It is not considered as much of a slight not to follow someone back, and it is not considered as a threat to follow someone that you don’t know. In a way, Twitter makes controlled stalking acceptable, just as Facebook makes being nosy about someone’s friends acceptable.

Hacktivist as hero, villain, genius, and clown.

There is more than enough that has been written on the subject of the changing attitudes toward technoverts (geeks, nerds, dorks, dweebs, et. al.) over the last three decades, but the most contentious figure to come out of the computer era has been the one who is skilled at wielding the power to reveal and conceal. Early on, in movies like Wargames and The Net, there was a sense of support for the individual underdog against the impersonal machine. Even R2D2 in the original Star Wars played David to the Death Star’s Goliath computer while connecting to it secretly. The tide began to turn it seems, in the wake of Napster, which unleashed a worldwide celebration of music sharing, to the horror of those who had previously enjoyed a monopoly over the distribution of music. Since then, names like Anonymous, Assange, and Snowden have aroused increasingly polarized feelings.

The counter-narrative of the hacker as villain, although always present within the political and financial power structures as a matter of protection, has become a new kind of arch-enemy in the eyes of many. It is very delicate territory to get into for the media. Journalism, like nobility, floats on a layer of politeness. To continue to be able to reveal some things, it must conceal its sources. The presence of an Assange or Snowden presents a complicated issue. If they friend the hacker/whistleblower/whistleblower-enabler, then become linked to their authority-challenging values, but if they villify them, then they indict their own methods and undermine their own moral authority and David vs Goliath reputation.

Of course, it’s not just the issues of whistleblowing in general but the specific character of the whistleblower and the organization they are exposing which are important. This is not a simple matter of legal principle since it really depends on who it is in society which we support as to whether the ability to access protected information is good, bad, lawful, chaotic, admirable, frivolous, etc. It all depends on whether the target of the breach is themselves good, bad, lawful, chaotic, etc. In America in particular we are of two minds about justice. We love the Dirty Harry style of vigilante justice on film, but in reality we would consider such acts to be terrorism. We like the idea of democracy in theory, but when it comes to actual exercises of freedom of speech and assembly in protest, we break out the tear gas and shake our heads at the naive idealists.

Twitter fits in here as well. It is as much the playground of celebrities to flirt with their audience as it is the authentic carrier of news beyond the control of the media. It too can be used for nefarious purposes. Individuals and groups can be tracked, disinformation and confusion can be spread. David and Goliath can both imitate each other, and the physics of privacy and publicity have given rise to a new kind of ammunition in a new kind of perpetual war.

Quora: What effect has the computer had on philosophy?

Quora: What effect has the computer had on philosophy?

What effect has the computer had on philosophy in general and philosophy of mind in particular?

Obviously, computing has facilitated a lot of scientific advances that allow us to study the brain. e.g., neuroscience research would not exist as it does today without the computer. However, I’m more interested in how theories of computation, computer science and computer metaphors have shaped brain research, philosophy of mind, our understanding of human intelligence and the big questions we are currently asking. (Quora)

I’m not familiar enough with the development of philosophy of mind in the academic sense to comment on it, but the influence of the computer on popular philosophy includes the relevance of themes such as these:

Simulation Dualism: The success of computer graphics and games has had a profound effect on the believability of the idea of consciousness-as-simulation. Films like The Matrix have, for better or worse, updated Plato’s Allegory of The Cave for the cybernetic era. Unlike a Cartesian style substance dualism, where mind and body are separate, the modern version is a kind of property dualism where the metaphor of the hardware-software relation stands in for the body-mind relation. As software is an ordered collection of the functional states of hardware, the mind or self is the similarly ordered collection of states of the brain, or neurons, or perhaps something smaller than that (microtubules, biophotons, etc).

Digital Emergence: From a young age we now learn, at least in a general sense, how the complex organization of pixels or bits leads to something which we see as an image or hear as music. We understand how combinations of generic digits or simple rules can be experienced as filled with aesthetic quality. Terms like ‘random’ and ‘virtual’ have become part of the vernacular, each having been made more relevant through experience with computers. The revelation of genetic sequences have further bolstered the philosophical stance of a modern, programmatic determinism. Through computational mathematics, evolutionary biology and neuroscience, a fully impersonal explanation of personhood seems imminent (or a matter of settled science, depending on who you ask). This emergence of the personal consciousness from impersonal unconsciousness is thought to be a merely semantic formality, rather than a physics or functional one. Just as the behavior of a flock of birds flying in formation can be explained as emerging inevitably from the movements of each individual bird responding to the bird in front of them, the complex swarm of ideas and feelings that we experience are thought to also emerge inevitably from the aggregate behavior of neuron processes.

Information Supremacy: One impact of the computer on society since the 1980’s has been to introduce Information Technology as an economic sector. This shift away from manufacturing and heavy industry seems to have paralleled a historic shift in philosophy from materialism to functionalism. It no longer is in fashion to think in terms of consciousness emerging from particular substances, but rather in terms of particular manipulations of data or information. The work of mathematicians and scientists such as Kurt Gödel, Claude Shannon, and Alan Turing re-defined the theory of what math can and cannot do, making information more physical in a sense, and making physics more informational. Douglas Hofstadter’s books such as Gödel, Escher, Bach: an Eternal Golden Braid continue to have a popular influence, bringing the ideas of strange loops and self referential logic to the forefront. Computationally driven ideas like Chaos theory, fractal mathematics, and Bayesian statistics also have gained traction as popular Big-Picture philosophical principles.

The Game of Life: Biologist Richard Dawkins’ The Blind Watchmaker (following his other widely popular and influential work, The Selfish Gene) utilized a program to illustrate how natural selection produces biomorphs from a few simple genetic rules and mutation probabilities. An earlier program Conway’s Game of Life, similarly demonstrates how life-like patterns evolve without input from a user, given only initial conditions and simple mathematical rules. Philosopher Daniel Dennett has been another extremely popular influence who maintains both a ‘brain-as-computer’ view and a ‘consciousness-as-pure-evolutionary-adaptation’ position. Dawkins coined the word ‘meme’ in The Selfish Gene, a word which has now itself become a meme. Dennett makes use of the concept as well, naming the repetitive power of memes as the blind architect of culture. Author Susan Blackmore further spread the meme meme with her book The Meme Machine. I see all of these ideas as fundamentally connected – the application of the information-first perspective to life and consciousness. To me they spell the farthest extent of the pendulum swing in philosophy to the West, a critique of naturalized subjectivity and an embrace of computational inevitability.

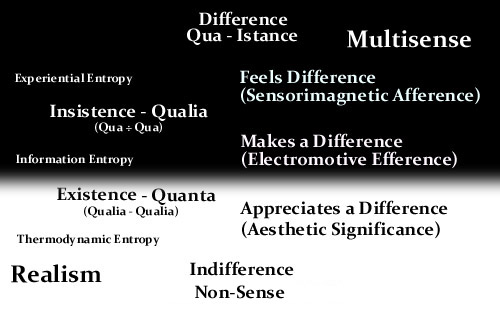

The Interior Strikes Back: When philosopher David Chalmers introduced the Hard Problem of Consciousness, he opened the door for a questioning of the eliminative materialism of Dawkins and Dennett. His contribution at that time, along with that of philosophers John Searle and Galen Strawson has been to show the limitation of mechanism. The Hard Problem asks innocently, why is there any conscious experience at all, given that these information processes are driven entirely by their own automatic agendas? Chalmers and Strawson have championed the consideration of panpsychism or panexperientialism – that consciousness is a fundamental ingredient in the universe like charge, or perhaps *the* fundamental ingredient of the universe. My own view, Multisense Realism is based on the same kinds of observations of Chalmers and Strawson, that physics and mathematics have a blind spot for some aspects, the most important aspects perhaps, of consciousness. Neuroscientist Raymond Tallis’ book Aping Mankind: Neuromania, Darwinitis and the Misrepresentation of Humanity provides a focused critique of the evidence upon which reductionist perspectives of human consciousness are built.

The bottom line for me is that computers, while wonderful tools, exploit a particular facet of consciousness – counting. The elaboration of counting into mathematics and calculus-based physics is undoubtedly the most powerful influence on civilization in the last 400 years, its success has been based on the power to control exterior bodies in public space. With the development of designer pharmaceuticals and more immersive internet experiences, we have good reason to expect that this power to control extends to our entire existence. With the computer’s universality as evidence that doing and knowing are indeed all there is to the universe, including ourselves, it is nonetheless difficult to ignore that beyond all that seems to exist, there is some thing or some one else who seems to ‘insist’. On further inspection, all of the simulations, games, memes, information, can be understood to supervene on a deeper level of nature. When addressing the ultimate questions, it is no longer adequate to take the omniscient voyeur and his ‘view from nowhere’ for granted. The universe as a program makes no sense without a user, and a user makes no sense for a program to develop for itself.

It’s not the reflexive looping or self-reference, not the representation or semiotics or Turing emulation that is the problem, it is the aesthetic presentation itself. We have become so familiar with video screens and keyboards that we forget that those things are for the user, not the computer. The computer’s world, if it had a world, is a completely anesthetic codescape with no plausible mechanism for or justification of any kind of aesthetic decoding as experience. Even beyond consciousness, computation cannot even justify a presentation of geometry. There is no need to draw a triangle itself if you already have the coordinates and triangular description to access at any time. Simulations need not actually occur as experiences, that would be magical and redundant. It would be like the government keeping a movie of every person’s life instead of just keeping track of drivers licenses, birth certificates, tax returns, medical records, etc. A computer has no need to actualize or simulate – again that is purely for the aesthetic satisfaction of the user.

Blue Roses, Blue Pills, and the Significance of the Imposter

What makes that which is authentic more significant than that which is fake or ‘false’? Why do proprietary qualities carry more significance than generic qualities? Commonality vs uniqueness is a theme which I come back to again and again. Even in this dichotomy of common vs unique, there is a mathematical meaning which portrays uniqueness as simply a common property of counting one out of many, and there is a qualitative sense of ‘unique’ being novel and unprecedented.

The notion of authenticity seems to carry a certain intensity all by itself. Like consciousness, authenticity can be understood on the one hand to be almost painfully self-evident. What does it really mean though, for someone or something to be original? To be absolutely novel in some sense?

The Western mindset tends toward extremism when considering issues of propriety. The significance of ownership is exaggerated, but ownership as an abstraction – generic ownership. Under Western commercialism, rights to own and control others are protected vigilantly, as long as that ownership and control is free from personal qualities.

The thing which makes a State more powerful than a Chiefdom is the same thing which makes the Western approach so invested in property rather than people. In a Chiefdom, every time the chief dies, the civilization is thrown into turmoil. In a State, no one person or group of people personifies the society, they are instead public officials holding public office for a limited time. Political parties and ideologies can linger indefinitely, policies can become permanent, but individual people flow through it as materially important, yet ultimately disposable resources.

The metaphysical and social implications of this shift from the personal to the impersonal are profound. The metaphysical implications can be modeled mathematically as a shift from the cardinal to the ordinal. In a Chiefdom, rule is carried out by specific individuals, so cardinality is the underlying character. In a State, ordinality is emphasized, because government has become more of a super-human function. It’s an ongoing sequential process, and the members within it (temporarily) are motivated by their own ambitions as they would be as part of a Chiefdom, but they are also motivated to defend the collective investment in the permanence of the hierarchy.

At the same time, cardinality can apply to the State, and ordinality would apply to a Chiefdom (or gang). The state imposes cardinality – mass producing and mass controlling through counting systems. Identification numbers are produced and recorded. Individuals under a State are no longer addressed as persons individually but as members of a demographic class within their databases. Lawbreaker, head of household, homeowner, student, etc. This information is never explicitly woven into a personal portrait of the living, laughing, loving person themselves, but rather is retained as skeletal evidence of activities. Addresses, family names, employment history, driver’s license, dental records. It is essential for control that identity be validated – but only in form, not in content. The personality of the consumer-citizen (consumiten?) is irrelevant, to an almost impossible degree – yet some ghost of conscience compels an appearance of sentiment to the contrary.

World War II, which really should be understood as the second half of the single war for control of human civilization on a global level for the first time, was a narrative about embodied mechanization and depersonalization. The narrative we got in the West was that Fascism, Communism, and Nazism were totalitarian ideologies of depersonalization. The threat was of authentic personhood eclipsed permanently by a ruthlessly impersonal agenda. Different forms of distilled Statehood, three diffracted shadow projections of the same underlying social order transitioning into cold automatism The mania for refining and isolating active ingredients in the 20th century, from DNA to LSD to quantum, ran into unexpected trouble when it was applied to humanity. Racist theories and eugenics, Social Darwinism, massive ethnic cleanses and purges. Were we unconsciously looking for our absent personhood, our authenticity which was sold to the collective, or rather, to the immortal un-collective? Did we project some kind of phantom limb of our evacuated self into the public world, hiding in matter, bodies, blood, and heredity?

So what is authenticity? What is an imposter? Does a blue rose become less important if it is dyed blue rather than if it grew that way? Why should it make a difference? (we tell ourselves, with our Westernized intellect, that it shouldn’t). If you never found out that the rose was ‘only’ dyed blue, would you be wrong for enjoying it as if it were genuine? Why would you feel fooled if you found out that you were wrong about it being genuine but feel good if you found out that you were wrong about it being ‘fake’.

Who is fake? Who is phoney? Who is sold out? (does anyone still call anyone a ‘sell out’ anymore, or are we now pretty comfortable with the idea that there is nobody left who would not happily sell out if they only had the chance?) These are terms of accusation, of righteous judgment against those who have become enemies of authenticity – who have forsaken humanity itself for some ‘mere’ social-political advantage.

There is a dialectic between pride and shame which connects the fake and the genuine, with that good feeling of finding the latter and the disgust and loss of discovering the former. The irony is that the fake is always perpetrated without shame, or with shame concealed, but the genuine is often filled with shame and vulnerability…that’s somehow part of what makes it genuine. It’s authority comes from within our own personal participation, not from indirect knowledge, not from the impersonal un-collective of the Market-state.

Where do we go now that both the personal and impersonal approaches have been found fatally flawed? Can we regain what has been lost, or is it too late? Does it even matter anymore? If mass media is any indication, we have begun not only to accept the imposter, but we have elevated its significance to the highest. What is an actor or a model if not a kind of template, a vessel for ideal personal qualities made impersonal? It is to be celebrated for acting like yourself, or being a character – a proprietary character, made generic by mass distribution of their likeness. Branded celebrity. A currency of deferred personalization – vanity as commodity. Perhaps in the long run, this was the killer app that the Nazis and the Russians and the Japanese didn’t have. The promiscuous use of mass media to reflect back super-saturated simulations of personhood to the depersonalized subjects of the Market-state.

More than nuclear weapons, it was Hollywood, and Mickey Mouse, and Levi’s and Coca Cola which won the world. Nuclear memes. Elvis and Marilyn Monroe. This process too has now become ultra-automated. The problem with the celebrity machine was that it depended on individual persons. Even though they could be disposed of and recycled, it was not until reality TV and the new generation of talent shows that the power to make fame was openly elevated above celebrity itself. Fame is seen to be increasingly elected democratically, but at the same time, understood to be a fully commercial enterprise, controlled by an elite. The solution to the problem of overcoming our rejection of the imposter has been a combination of (1) suppressing the authentic; (2) conditioning the acceptance of the inauthentic, and most importantly, (3) obscuring the difference between the two.

I’m not blaming anyone for this, as much as I might like to. I’m not a Marxist or Libertarian, and I’m not advocating a return to an idealized pre-State Anarchy (though all of those are tempting in their own ways). I’m not anti-Capitalist per-se, but Capitalism is one of the names we use to refer to some of the most pervasive effects of this post-Enlightenment pendulum swing towards quantitative supremacy. I see this arc of human history, lurching back from the collapse of the West’s version of qualitative supremacy in the wake of the Dark Ages, as a natural, if not inevitable oscillation. I can’t completely accept it, since the extremes are so awful for so long, but then again, maybe it has always been awful. Objectively, it would seem that our contemporary First World ennui is a walk in the park compared to any other large group in history – or is that part of the mythology of modernism?

It seems to me that the darkness of the contemporary world is more total, more asphyxiating than any which could be conceived of in history, but it also seems like it’s probably not that bad for most people, most of the time. Utopia or Oblivion – that’s what Buckminster Fuller said. Is it true though anymore, or is that a utopian dream as well? Is the singularity just one more co-opted meme of super-signification? Is it a false light at the end of the sold-out tunnel? An imposter for the resurrection? Is technology the Blue Pill? I guess if that’s true, having an Occidental spirituality which safely elevates the disowned authentic self into a science fiction is a big improvement over having it spill out as a compulsion for racial purity. A utopia driven by technology at least doesn’t require an impossible alignment of human values forever. Maybe this Blue Pill is as Red as it gets?

Making Sense of Computation

In my view, matter and energy are the publicly reflected tokens* of sense and motive respectively. As human experiences, we are a complicated thing to try to use as an example – like trying to learn arithmetic by starting with an enormous differential equation. When we look at a brain, we are using the eyes of a simian body. That’s what the experience of a person looks like when it is stepped all the way down from human experience, to animal experience, to cellular experience, to molecular experience, and all the way back up to the animal experience level. Plus we are seeing it from the wrong angle. If I’m right, experience is a measure of time, not space, so looking at the body associated with an experience that lasts 80 or 100 years from a sampling rate of a few milliseconds would be a radically truncated view, even if we were looking at it in its native, subjective form. Every moment we are alive, we are surfing on a wave that has been growing since our birth – growing not just in synch with clock time, but changing in response to the significance of the experiences in which we participate directly. This is what I mean by sense. A concretely real accumulation of experience, a single wave in constant modulation as the local surface of an arbitrarily deep ocean.

Information is not sense, and neither is it matter or energy. Information is the shadow of all of these, of their relation to each other, which is cast by sense. Information is like sense as far as it being neither substantial nor insubstantial, but it is the opposite of sense also. Matter, energy, and information are all opposite to each other and opposite to sense. They are the projections of sense. If you break down the word information into three bites, the “in” would be sensory input, the ‘form’ would be ‘matter-space’ and the ‘ation’ would be ‘energy-time’. When most people think about information though, they undersignify the input/output aspect, the “in”, – which is sense, and conflate consciousness with senseless formations. Formations with no participating perceiver are non-sense and no-thing.

The difference between sense and information is that sense is anchored tangibly in the totality of events in all of history. It is the meta-firmament; the Absolute, and it potentially makes sense of itself in every sense modality. Information only makes sense from one particular angle or method of interpretation. It is a facade. As soon as information is removed from its context, its ungrounded, superficial nature is exposed. Information so removed does not react or adapt to make itself understood – it is sterile and evacuated of feeling or being. It is purely a feeling being’s idea of doing or knowing and does not exist independently of its ‘host’. Because of this it is tempting to conceptualize information as self-directing memes, but that would only be true figuratively. In an absolute sense, memes are a figure-ground inversion, i.e it puts the cart before the horse and sucks us into strong computationalism and the Pathetic fallacy. From what I can see, information has no autonomy, no motive. It is an inert recording of past motives and sensations.

Previously, I have written about computation, numbers, mathematics as being the flattest category of qualia. Flattest in the sense of being almost purely an tool for knowing or doing that has to borrow rely on being output in some aesthetic form to yield any feeling or ‘being’.

Computation can be represented publicly through material things like positions of beads on an abacus, the turns of mechanical gears, the magnetic dispositions of microelectronic switches, the opening and closing of valves in a plumbing system, the timing and placement of traffic signals on a street grid, etc. All of these bodies rely on the ability to detect or sense each others passive states and to respond to them in some motor effect. It makes no difference how it is represented, because the function will be the same. This is precisely the opposite of consciousness, in which rich aesthetic details provide the motivation and significance. Evolutionary functions are never nakedly revealed as a-signifying generic processes. For humans, food and sex are profoundly aesthetic, social engagements, not just automatic functions.

Computation can also be represented publicly through symbols. One step removed from literally embodied aesthetics, computation can be transferred figuratively between a person’s thoughts and written symbols through the sensory-motor medium of mathematical literacy. We can imagine that there is a similar ferrying of meaning between the mathematician’s thoughts and some non-local source of arithmetic truth. Arithmetic truth seems to us certain, rational, internally consistent, universal but it is also impersonal. Arithmetic laws cannot be made proprietary or changed. They are eternal and unchanging. We can only borrow local copies of numbers for temporary use, but they cannot be touched or controlled. They represent disembodied knowledge, but no doing, no being, and no feeling.

In the first sense, mathematics is represented by mechanical positions of public bodies, and therefore almost completely ‘flat’ qualitatively. Binary interactions of go/on-stop/off have no sense to them other than loops and recursive enumeration. In the second sense, a written mathematical language adds more qualia, clothing the naked digital states in conceptual symbols. The language of mathematics allows the thinker to bridge the gap between public doing of machines and private knowing of arithmetic truth.

Although strong computationalists will disagree, it seems to me that a deeper understanding reveals of computation reveals that arithmetic truth itself requires an even deeper set of axioms which are pre-arithmetic. The third sense of mathematics is the first sense we encounter. Before there is mathematical literacy, there is counting. Counting to three gives way to counting on fingers (digits), as we learn the essential skills of mental focus required. As we learn more about odd and even numbers, addition and subtraction, the aesthetics of symmetry and succession are not so much introduced into the psyche as foreign concepts, but are recovered by the psyche as natural, familiar expectations. Math, like music, is felt. Before we can use it to help us know essential truths or to cause existential effects, we have to be able to participate in counting and the solving of problems in our mind. When we do these kinds of problems, our awareness must be very focused. We are accessing an impersonal level of truth. Our human bodies and lives are distractions. Machines and computers have always been conspicuously lacking in what people refer to as ‘soul’, or ‘warmth’, feeling, empathy, personality, etc. This is consistent with the view of computation that I am trying to explain. Whatever warmth or personality it can carry must originate in a being – an experience which is anchored in the aesthetic presentation of sense rather than the infinite representation of information.

*or orthomodular inversions to be more precise

Ehh, How Do You Say…

The use of fillers in language are not limited to spoken communication.

In American Sign Language, UM can be signed with open-8 held at chin, palm in, eyebrows down (similar to FAVORITE); or bilateral symmetric bent-V, palm out, repeated axial rotation of wrist (similar to QUOTE).

This is interesting to me because it helps differentiate communication which is unfolding in time and communication which is spatially inscribed. When we speak informally, most people use a some filler words, sounds, and gestures. Some support for embodied cognition theories has come from studies which show that

“Gestural Conceptual Mapping (congruent gestures) promotes performance. Children who used discrete gestures to solve arithmetic problems, and continuous gestures to solve number estimation, performed better. Thus, action supports thinking if the action is congruent with the thinking.”

The effective gestures that they refer to aren’t exactly fillers, because they mimic or indicate conceptual experiences in a full-body experience. The body is used as a literal metaphor. Other gestures however, seem relatively meaningless, like filler. There seems to be levels of filler usage which range in frequency and intensity from the colorful to the neurotic in which generic signs are used as ornament/crutch, or like a carrier tone to signify when the speaker is done speaking, (know’am’sayin?’).

In written language, these fillers are generally only included ironically or to simulate conversational informality. Formal writing needs no filler because there is no relation in real time between participating subjects. The relation with written language was traditionally as an object. The book can’t control whether the reader continues to read or not, so there is no point in gesturing that way. With the advent of real time text communication, we have experimented with emoticons and abbreviations to animate the frozen medium of typed characters. In this article, John McWhorter points out that ‘LOL isn’t funny anymore’ – that it has entered sort of a quasi-filler state where it can mean many different things or not much of anything.

In terms of information entropy, fillers are maximally entropic. Their meaning is uncertain, elastic, irrelevant, but also, and this is cryptic but maybe significant…they point to the meta-conversational level. They refer back to the circumstance of the conversation rather than the conversation itself. As with the speech carrier tone fillers like um… or ehh…, or hand gestures, they refer obliquely to the speaker themselves, to their presence and intent. They are personal, like a signature. Have you ever noticed that when people you have known die that it is their laugh which is most immediately memorable? Or their quirky use of fillers. High information entropy ~ High personal input. Think of your signature compared to typing your name. Again, signatures are occurring in real time, they represent a moment of subjective will being expressed irrevocably. The collapse of information entropy which takes place in formal, traditional writing is a journey from the private perpetual here of subjectivity to the world of public objects. It is a passage* from the inner semantic physics, through initiative or will, striking a thermodynamically irreversible collision with the page. That event, I think, is the true physical nature of public time – instants where private affect is projected as public effect.

Speakers who are not very fluent in a language seem to employ a lot of fillers. For one thing they buy time to think of the right word, and they signal an appeal for patience, not just on a mechanical level (more data to come, please stand by), but on a personal level as well (forgive me, I don’t know how to say…). Is it my imagination or are Americans sort of an exception to the rule, preferring stereotypically to yell words slowly rather than using the ‘ehh’ filler. Maybe that’s not true, but the stereotype is instructive as it implies an association between being pushy and adopting the more impersonal, low-entropy communication style.

This has implications for AI as well. Computers can blink a cursor or rotate an hourglass icon at you, and that does convey some semblance of personhood to us, I think, but is it real? I say no. The computer doesn’t improve its performance by these gestures to you. What we might subtly read as interacting with the computer personally in those hourglass moments is a figment of the Pathetic fallacy rather than evidence of machine sentience. It has a high information entropy in the sense that we don’t know what the computer is doing exactly, if it’s going to lock up or what, but it has no experiential entropy. It is superficially animated and reflects no acknowledgement to the user. Like the book, it is thermodynamically irreversible as far as the user is concerned. We can only wait and hope that it stops hourglassing.

The meanings of filler words in different languages are interesting too. They say things like “you see/you know”, “it means”, “like”, “well”, and “so”. They talk about things being true or actual. “Right?” “OK?”. Acknowledgment of inter-subjective synch with the objective perception. Agreement. Positive feedback. “Do you copy?” relates to “like”…similarity or repetition. Symmetric continuity. Hmm.

*orthomodular transduction to be pretentiously precise

Entropy, Extropy, and Solitopy

By my reckoning, ‘order’ can only be an aesthetic consideration, which further means that it is dependent on the sensitivity of a given participant. Thermodynamic entropy, therefore, while overlapping on information entropy by some measures, does not in others.

I use the example of compressing a video of a glass of ice melting to illustrate how the image of ice changing over time, being more complex and therefore requiring more resources to compress with a general-purpose video codec, defies the underlying thermodynamic change from low entropy to high entropy. If you compressed a before and after video, the after video would encounter lower information entropy in executing the compression than the melting ice.

All this demonstrates is that not every expression or description of a phenomenon can be reduced to a quantitative expectation. The optical qualities of melting ice from a macroscopic perspective are not isomorphic to other levels, perspectives, and sense modalities.

Anyhow, my conjecture uses a term ‘solitropy’ which refers to the aesthetic drive which is similar to what has been called extropy or Eros, but solitropy is conceived as a quality which is not diametrically opposed to entropy. Simple negentropy would be a reduction in noise, but would not insist on the content of the signal itself improving qualitatively. You can make the type as clear as you like, but that won’t improve what is being said. Solitropy would extend entropy-extropy into a private quality of physics, so that we would be able to formalize in physics, for instance, the difference between the entropy produced by burning down a city and boiling water.

The Future of Computing — Reuniting Bits and Atoms

A great presentation on computing with hardware and software which are homomorphic to each other. Logical automata (explained 11:51 to 15:50) is just this sort of a WYSIWYG architecture where the software is executed from a bulk raw material, i.e., not Gb of memory or number of processor cores, but square feet or pounds of programmable matter. Atoms, or groups of atoms, are here used directly as bits.

Gershenfeld compares how our current approach of computing requires multiple stages of compiling disparate formats, but that to more closely match nature, we should strive to imitate nature in the sense of having a consistent zoomable format on every scale.

In nature, the map is the same graphically regardless of the scope of magnification. Marrying these two concepts, the idea is to design shapes which are themselves instructions, i.e. physical interactions with other physical shapes, so that computation is not encoded but rather, embodied. As in biochemistry, the output is a material product, and the machine is itself is a self fabricating machine tool as opposed to a manufacturer of inert objects.

I think that he is probably right that this is the direction of the future in general, “computing aligned with nature” which brings computation into matter. It is compelling to imagine that this kind of embodied computing could be the Holy Grail of nano-engineering, giving us control over virtually anything eventually.

At the same time I can see that there is something which has been overlooked. To quote Deleuze:

“Representation fails to capture the affirmed world of difference. Representation has only a single center, a unique and receding perspective, and in the consequence a false depth. It mediates everything, but mobilizes and moves nothing.”

– Gilles Deleuze, Difference and Repetition, p56

(source http://sleepinginthegreenery.blogspot.co.uk/)

To understand why this universe of embodied computation is not the universe that we live in, Difference is the key. An overhead map is only so useful to us. Even if we can zoom down to the human scale level, we really need to switch to a first person street view to make the transition from outside-looking-down to inside-looking-around. To get further into the subjective view, we would have to have access to feelings and thoughts, so that at some level of description the model of zoomable shapes is less than useless. In our personal awareness, the appearance of neurological structures in our view would be hallucination.

The assumption then of this uniformly computational matter, while fantastic for our purposes as human beings, would be a catastrophe for the universe in general. It would be the ultimate monoculture, with everything and anything reinvented as collections of positionable nano-Legos. The problem of conformity to a generic, universal structure is not that it won’t work, but that if it does, there will be no Difference possible.

Given Deleuze’s assertion that representation moves nothing, this intention of “reuniting bits with atoms’ seems to presume that they were united to begin with but doesn’t address why they were ever separated. Indeed, if this method of embodied computation is the way of nature, how and why could it ever seem otherwise? Where have these Different perspectives on different levels emerged from, and for what purpose?

I think that on closer inspection, even though this new approach is brilliant and revolutionary in some important ways, it still is founded on a sloppy assumption. It presumes that hardware which looks and acts like the software is identical to it. If this were the case, instead of images we would see the shapes of ganglia and retinal cells, but we would see the same thing instead of smells, sounds, and feelings. We could not feel dizzy, but rather be informed of some vestibular condition by means of these same shapes – which we think of visually or tangibly, but without the Difference, there really is no reason to assume anything perceptual at all.

Once again, even though I am impressed with the futuristic thinking, it still takes us away from the missing piece in physics – the privacy and interiority and qualia. Buying into the universe as undifferentiated plenum of self-machining bubbles we are betting that there is no difference between biology, chemistry, and physics. It’s all physics, all surfaces and volumes in public spaces. Is that really what the universe is though? Could our experience be understood that way if we didn’t have our own familiarity with it already? I think if we lived in a universe that was really all about universal computation, then we would never have separated bits from atoms in the first place. We never would have approached bits as encoded abstractions because we would have been comfortable already with the universal format.

Instead, the universe appears to be the opposite. On every level, even though there are repeating themes and forms, it is never exactly the same presentation. A whirlpool would not be mistaken for a galaxy and a grain of salt is not the same thing as a cube of ice. In the universe we actually live in, the only thing which seems truly universal is Difference. More universal than mathematics and physics is the variety of sensory qualities and modalities. It is not just formations or embodied information, but direct experience.

Recent Comments