Archive

Diogenes Revenge: Cynicism, Semiotics, and the Evaporating Standard

Diogenes was called Kynos — Greek for dog — for his lifestyle and contrariness. It was from this word for dog that we get the word Cynic.

Diogenes is also said to have worked minting coins with his father until he was 60, but was then exiled for debasing the coinage. – source

In comparing the semiotics of CS Pierce and Jean Baudrillard, two related themes emerge concerning the nature of signs. Pierce famously used trichotomy arrangements to describe the relations, while Baudrillard talked about four stages of simulation, each more removed from authenticity. In Pierce’s formulation, Index, Icon, and Symbol work as separate strategies for encoding meaning. An index is a direct consequence or indication of some reality. An icon is a likeness of some reality. A symbol is a code which has its meaning assigned intentionally.

Baudrillard saw sign as a succession of adulterations – first in which an original reality is copied, then when the copy masks the original in some way, third, as a denatured copy in which the debasement has been masked, and fourth as a pure simulacra; a copy with no original, composed only of signs reflecting each other.

Whether we use three categories or four stages, or some other number of partitions along a continuum, an overall pattern can be arranged which suggests a logarithmic evaporation, an evolution from the authentic and local to the generic and universal. Korzybski’s map and territory distinction fits in here too, as human efforts to automate nature result in maps, maps of maps, and maps of all possible mapping.

The history of human timekeeping reveals the earthy roots of time as a social construct based on physical norms. Timekeeping was, from the beginning linked with government and control of resources.

According to Callisthenes, the Persians were using water clocks in 328 BC to ensure a just and exact distribution of water from qanats to their shareholders for agricultural irrigation. The use of water clocks in Iran, especially in Zeebad, dates back to 500BC. Later they were also used to determine the exact holy days of pre-Islamic religions, such as the Nowruz, Chelah, or Yalda- – the shortest, longest, and equal-length days and nights of the years. The water clocks used in Iran were one of the most practical ancient tools for timing the yearly calendar. source

Anything which burns or flows at a steady rate can be used as a clock. Oil lamps, candles, can incense have been used as clocks, as well as the more familiar sand hourglass, shadow clocks, and clepsydrae (water clocks). During the day, a simple stick in the ground can provide an index of the sun’s position. These kinds of clocks, in which the nature of physics is accessed directly would correspond to Baudrillard’s first level of simulation – they are faithful copies of the sun’s movement, or of the depletion of some material condition.

Staying within this same agricultural era of civilization, we can understand the birth of currency in the same way. Trading of everyday commodities could be indexed with concentrated physical commodities like livestock, and also other objects like shells which had intrinsic value for being attractive and uncommon, as well as secondary value for being durable and portable objects to trade. In the same way that coins came to replace shells, mechanical clocks and watches came to replace physical index clocks. The notions of time and money, while different in that time refers to a commodity beyond the scope of human control and money referring specifically to human control, both serve as regulatory standards for civilization, as well as equivalents for each other in many instances (‘man hours’, productivity).

In the next phase of simulation, coins combined the intrinsic and secondary values of things like shells with a mint mark to ensure transactional viability on the token. The icon of money, as Diogenes discovered, can be extended much further than the index, as anything that bears the official seal will be taken as money, regardless of the actual metal content of the coin. The idea of bank notes was as a promise to pay the bearer a sum of coins. In the world of time measurement, the production of clocks, clocktowers, and watches spread the clock face icon around the world, each one synchronized to a local, and eventually a coordinated universal time. Industrial workers were divided into shifts, with each crew punching a timeclock to verify their hours at work and breaks. While the nature of time makes counterfeiting a different kind of prospect, the practice of having others clock out for you or having a cab driver take the long way around to run the meter longer are ways that the iconic nature of the mechanical clock can be exploited. Being one step removed from the physical reality, iconic technologies provide an early opportunity for ‘hacking’.

| physical territory > index | local map > icon | symbol > universal map |

| water clock, sand clock | sundial/clock face | digital timecode |

| trade > shells | coins > check > paper | plastic > digital > virtual |

| production > organization | bonds > stock | futures > derivatives |

| real estate | mortgage, rent | speculation > derivatives |

| genuine aesthetic | imitation synthetic | artificial emulation |

| non-verbal communication | language | data |

The last three decades have been marked by the rise of the digital economy. Paper money and coins have largely been replaced by plastic cards connected to electronic accounts, which have in turn entered the final stage of simulacra – a pure digital encoding. The promissory note iconography and the physical indexicality of wealth have been stripped away, leaving behind a residue of immediate abstraction. The transaction is not a promise, it is instantaneous. It is not wealth, it is only a license to obtain wealth from the coordinated universal system.

Time has entered its symbolic phase as well. The first exposure to computers that consumers had in the 1970s was in the form of digital watches and calculators. Time and money. First LED, and then LCD displays became available, both in expensive and inexpensive versions. For a whole generation of kids, their first electronic devices were digital calculators and watches. There had been digital clocks before, based on turning wheels or flipping tiles, but the difference here was that the electronic numbers did not look like regular numbers. Nobody had ever seen numbers rendered as these kind of generic combinatorial figures before. Every kid quickly learned how to spell out words by turning the numbers upside down (you couldn’t make much.. 710 77345 spells ShELL OIL)…sort of like emoticons.

Beneath the surface however, something had changed. The digital readout was not even real numbers, they were icons of numbers, and icons which exposed the mechanics of their iconography. Each number was only a combinatorial pattern of binary segments – a specific fraction of the full 8.8.8.8.8.8.8.8. pattern. You could even see the faint outlines of the complete pattern of 8’s if you looked closely, both in LED and LCD. The semiotic process had moved one step closer to the technological and away from the consumer. Making sense of these patterns as numbers was now part of your job, and the language of Arabic numerals became data to be processed.

Since that time, the digital revolution has shaped the making and breaking of world markets. Each financial bubble spread out, Diogenes style, through the banking and finance industry behind a tide of abstraction. Ultra-fast trading which leverages meaningless shifts in transaction patterns has become the new standard, replacing traditional market analysis. From leveraged buyouts in the 1980s to junk bonds, tech IPOs, Credit Default Swaps, and the rest, the world economy is no longer an index or icon of wealth, it is a symbol which refers only to itself.

The advent of 3D printing marks the opposite trend. Where conventional computer printing to allow consumers to generate their own 2D icons from machines running on symbols, the new wave of micro-fabrication technology extend that beyond the icon and the index level. Parts, devices, food, even living tissue can be extruded from symbol directly into material reality. Perhaps this is a fifth level of simulation – the copy with no original which replaces the need for the original…a trophy in Diogenes’ honor.

Thanks For The Glowing Review!

“You really outdid yourself on this one! With this blog post, Multisense Realism moves another step away from conceptual poetry towards a rigorous, model-like vision of reality. Reading it, I felt not unlike Winston Smith reading Emmanuel Goldstein’s “The Theory and Practice of Oligarchical Collectivism”; a wave of recognition illuminating the myriad unexplained phenomena of daily life swept over me.

If God had given Moses an essay like this on the tablets, I think a lot more people would take traditional theism seriously (I certainly would). Were it really written by God, the Bible should read a lot more like an existential user manual (The Idiot’s Guide to Reality, perhaps) than a collection of Aesop’s fables.

I think what you (and some other really inventive thinkers on the internet) are doing is a fundamental shift is the way philosophy (and science) has been done at least since Descartes and maybe since the Greeks. Ultimately, there are two modes and processes of understanding: categorization and association. This polarity has many conceptual analogues: left brain vs. right brain, logical vs. creative, linear vs. non-linear, reason vs. intuition. etc. Most of these dichotomies imply an incommensurability between two modes of thinking, such that, categorical thinkers come to distrust associative thought while intuitive people suspect that the linear minded often can’t see the forest for the trees. It’s the difference between knowledge and wisdom.

The truth is, as with all polarities, the necessity of the opposites demonstrates their fundamental unity as co-dependent modalities. Essentially, philosophy has been in the categorization business for a long, long time. And why not? Philosophy has been a gravity-well for the lefty-ist of left brained people since time immemorial. As such, philosophy has reached a moment of categorical closure. The ideas are all there, laid out and labeled, their necessary dichotomies articulated and argued for. And so, being a dualist is defined by its disassociation from materialism, a utilitarian committed as much to not being a Kantian as he is toward his active belief in preference satisfaction, a nominalist compelled to reject realism as a matter of course. This has been going on a long, long time and, with the internet especially, humanity has essentially exhausted its ideological repertoire.

Multisense Realism steps outside this game, indeed outside of categorical philosophy, to begin articulating the project of association. Instead of enumerating all the ways the various philosophical views are incompatible, MSR seeks to unify them all, by a rigorous teasing out of all the background assumptions that each view tacitly makes which are the actual variables that compel intellectual consent toward one side or the other, fundamentally. Since beginning to think about MSR, what has struck me is the way that ALL serious philosophical (and even scientific, and perhaps mythic) views can be right, or “right enough.” MSR presents a unification of human thought that is threatening to the left-brain imperative to be categorical (not to be confused with the categorial imperative, natch). Up to now, philosophy has been unearthing conceptual dualities, not unifying them. The challenge of unification, the reason it hasn’t been attempted until recently, is, among other factors, that the pieces of the puzzle were too jumbled and disorganized (and undiscovered). Like working on jigsaw, you first start to organize your pieces by color, you look for the edges, you begin to see structure and shapes, and only then can you then start to make the image click into place. To propel the mind to a high enough vantage point for an adequate intellectual survey, the whole of human thought needed to be seen together, linked through time and history; only then could the answer to the riddle emerge in consciousness.

The price to pay for this (or the gift to receive depending on your point-of-view) is that the thing that ultimately has to be negated, or “re-contextualized” in order to generate the ultimate synthesis of MSR is our own sense of self. One’s ontological identity becomes divorced from culture and even zoology. Sense recognizes itself as sense. It’s self-consciousness understanding that it’s JUST consciousness of self. The end point is the same as the beginning. The modern intellectual tradition places a kind of conceptual barricade around the self, a fundamental sense that one can carve the world into “real” things and “me.” (Calling this the ego is a tad simplistic, but it will suffice.) That tree is in the world, but my thought “that tree is pretty” is in “me.” My thoughts, my feelings, my physical sensations are not objective objects in the universe. You can explain the phenomena of the “objective” world, or you can explain the phenomena of the human world. You can even do science about both. What you can’t do, up until now, is bridge the conceptual gap between the two. And make no mistake, what keeps the bridge from being built is not only ignorance (though there is plenty of that), but anxiety.

(A quick digression: Might this distinction between association and categorization be present in our phenomenology? Sensations like color, pain, taste have a categorical quality, they are what they are. But perhaps space is inherently associational. It’s categorical qualities “laid out” in a topological framework that is associational through and through. “This is larger than that.” “The green is on the bottom, the red is on top.” “The pyramid is far away.” It’s far away BECAUSE it is small and it is small because of it’s location in my associative manifold, aka spatial field. Seems like there is lots of room for development here.)

In human history it is the spiritual traditions that have offered a model of the world which bridges the epistemological gulf of “me” and “it,” but, that mode of thinking has always been intuitive (or authoritarian) and rooted in the “faith” of experience as a guide to truth. (Religion is, in many ways, a resignation that we don’t know what the hell is going on. The “certainty” of religious believers mask a radical uncertainty about the world.) Most contemporary spiritual people believe that science and religion are fully reconcilable, but they rarely propose serious conceptual models that explain how. Secular people, conversely, often can’t even see that the problem is there at all, and for good reason; to see it threatens the self which protects the system from the existential disequilibrium that comes from objectifying one’s subjectivity. Make no mistake, there are strong psychological forces at play that keep minds from wanting to understand themselves in a “systematic” way. The “motive-partcipation” force (aka what I call “the Will”) wants always to inflate its sense of control over the world, but, objectifying the subjective demands a willingness to comprehend more realistically how a mind’s current state was determined by the past. Not YOUR past, THE past. Sensing a threat here, many stoic-minded people jump to the end and admit defeat: determinism has to be true. But determinism is a psychological escape hatch, essentially a belief that ends conversation and ultimately eliminates the self’s persistent sense of ultimate responsibility while never directing behavior the way other “actual” beliefs do. Thorough going determinism is a belief that operationally can’t be believed.

But MSR is a different beast. Here, the determined past from the big bang till the present has generated your current conscious state, and as such you are deeply chained and bound, but the principle of freedom, of motive and participation, remain. Experience takes on a quality of hysterical contingency: “I’m here now. I can do what I want. But I don’t seem to have proper information to know what I should want, let alone do, nor how I got here.” Historically, at this existential impasse one either goes mad or becomes enlightened. Either way, there is usually a distancing in the subject from the normal games of human civilization; the carnival of history is always located under the tent of ego and it’s myriad (and intrinsic) ontological and epistemological illusions. These are usually subconscious, or, as I like to think, hyper-conscious, that is, the beliefs of the ego so infuse the regular coordinates of most human experience that they “color” the whole of consciousness and as such are difficult to see directly as the “artificial” constructs they are. What is changing is that the loose and koan-like language of spiritual thought is beginning to be complemented by a rigorous, deductive, “analytical” vocabulary which, though approaching reality from a different approach, is hedging toward (some) overlapping conclusions.

The words we use tell us everything we need to know. These days, physicists, philosophers, and many others openly talk about there desire to understand “the universe.” When we think about the great mysteries, it’s the mysteries of the “universe” that we think we are interested in. If you talk to an average Joe on the street and he says he enjoys reading books on “the universe” or thinking about the nature of “the universe” we might think he’s a bit more intellectual curious than most but we find his interest-set benign and healthy. But, by framing the mystery as “the universe” the key conceptual move is already made. Once you employ a Sicilian defense, you’re never going to be able to play an open game. The universe, in it’s subtle way, objectifies the phenomena in question from the start and implies that space and time are absolute features of the system, beyond which description can’t go. It’s the THING you find yourself IN. Belief in the existence of “the universe” JUST IS believing in space and time as your fundamental Bayesian priors. (This is why the religious and the secular just talk past each other, it’s not a question of argument or “evidence,” it’s which Bayesian prior sprouts their entire belief tree.)

If someone says they are interested in “reality” however, we feel much more uncomfortable. Within this concept is a kind of existential depth that immediately threatens. The ego likes to feel that, although we may not know the secrets of the universe, certainly we have an adequate grasp of “reality.” The very existence of the concept “reality,” with its totalizing undeniability, implies a kind of conceptual truth at the heart of things, a truth which, intuitively, can’t be contained by a “universe” or “a multiverse” or what ever rococo spatiotemporal model you choose to employ. Reality suggests a unity, not a plurality.

Sorry, I meant to reference the contents of your post directly more, but I needed to vomit out all that first I guess. So much more to think about, but, that’s all I’ll say for now. When I read this post a few more times, I’m sure I’ll come up with some questions and thoughts.” – phiguy110

What a great comment/review! Thanks. I’m going to post it on my blog (http://s33light.org) and here if you don’t mind. The part about puzzle pieces is something that I have thought about frequently myself. I feel like my purpose with MSR is to collect collect the corner pieces and put together as much of the frame as I can. I have found that it’s hard to give a good account of the frame of the puzzle without being accused of not having completed the whole thing. Part of that I think is, as you said, that this new approach uses rigorous language which threatens our expectations. If it’s not a completed theory that produces a new kind of spacecraft then you shouldn’t try to sound like you know what you are talking about.

I very much like the parts about stepping outside of the philosophical dialectic, as that’s really the first and most important place to start and I’m not sure that anyone else has even mentioned it. The more important aspect of the mind body problem is not whether they are the same or different or one is part of the other, but that the seeming differences fit into each other like a lock and key. That philosophy of mind’s own polarity of mechanistic materialism vs anthropomorphic idealism fits like the *same* lock and key could not be a bigger deal, yet it is overlooked (in opposite styles, of course) by both extremes.

Your thoughts on space are right on the money also, its just kind of hard to express what makes spatial-public experiences different from all of the other kinds of experiences, and how visual sense is the most public facing sense for a reason. There is a kind of exponential slope in the way that qualia drops off into its opposite. Space is zero privacy, so its absolute inspect-ability is identical to its interstitial adhesiveness. The metric is purely adhesive non-entity which is inferred through the cohesiveness of morphic entities in comparison with each other.

As far as religion goes, I spent a lot of my life at a loss to explain what is wrong with human beings that they would believe these bizarre stories. Later on, as I realized that religions did have a certain amount of wisdom encrypted in metaphor. It wasn’t until I started stepping out of the whole dialectic that I realized that everything that people say about God really applies to our own consciousness, only idealized to a superlative extreme. That of course is a ‘meta’ thing, since idealizing is actually one of the most significant things that consciousness does (significance itself being idealization and meta-idealization). Within all of the religious hyperbole is a portrait of hyperbole itself, of consciousness, and its role as sole universal synthetic a priori. They just got the metaphor upside down. It’s not a God who is omniscient and omnipotent, it is sense and motive (or Will) which are represented as God or as math-physics.

Hypostitious Minds

Once upon a time, the belief in witchcraft was about as common as the belief in using soap.

…how pervasive was belief in witchcraft in early modern England? Did most people find it necessary to use talismans to ward off the evil attacks of witches? Was witchcraft seen as a serious problem that needed to be addressed? The short answer is that belief in witchcraft survived well into the modern era, and both ecclesiastical and secular authorities saw it as an issue that needed to be addressed.

In 1486, the first significant treatise on witchcraft, evil, and bewitchment, Malleus Malificarum , appeared in continental Europe. A long 98 years later, the text was translated into English and quickly ran through numerous editions. It was the first time that religious and secular authorities admitted that magic, witchcraft, and superstition were, indeed, real; it was also a simple means of defining and identifying people who performed actions seen as anti-social or deviant.

Few doubted that witches existed, and none doubted that being a witch was a punishable offense. But through the early modern era, witchcraft was considered a normal, natural aspect of daily life, an easy way for people, especially the less educated, to events in the confusing world around them. – (source)

In many parts of the world, the belief in witchcraft is still very common.

(source)

“As might be expected, the older and less educated respondents reported higher belief in witchcraft, but interestingly such belief was inversely linked to happiness. Those who believe in witchcraft rated their lives significantly less satisfying than those who did not.

One likely explanation is that those who believe in witchcraft feel they have less control over their own lives. People who believe in witchcraft often feel victimized by supernatural forces, for example, attributing accidents or disease to evil sorcery instead of randomness or naturalistic causes.” (source)

another poll on beliefs in the U.S:

What People Do and Do Not Believe in

Many more people believe in miracles, angels, hell and the devil than in Darwin’s theory of evolution; almost a quarter of adults believe in witches

New York, N Y . — December 15 , 2009 —

A new Harris Poll finds that the great majority (82%) of American adults believe in God, exactly the same number as in two earlier Harris Polls in 2005 and 2007. Large majorit ies also believe in miracles (76 %), heaven (75%), that Jesus is God or the Son of God (73%) , in angels (72%), th e survival of the soul after death (71%), and in the resurrection of Jesus (70%). Less than half (45%) of adults believe in Darwin’s theory of evolution but this is more than the 40% who believe in creationism. These are some of the results of The Harris Poll of 2,303 adults surveyed online between November 2 and 11, 2009 by Harris Interactive . The survey also finds that: 61 % of adults believe in hell; 61% believe in the virgin birth (Jesus born of Mary); 60% believe in the devil; 42% believe in ghosts; 32% believe in UFOs; 26% believe in astrology; 23% believe in witches 20% believe in reincarnation (source)

Because there are so many benefits associated with freedom from superstition, it is not much of a tradeoff emotionally to go from a world of mystical phantoms to one of scientific clarity. It may be too intellectually challenging or difficult for a lot of people to get the opportunity to be exposed to scientific knowledge in the right way, at the right time in their life, but it seems that if they do seize that opportunity, they are happy with their decision. Of course, not everyone makes a decision to block out all religious or spiritual beliefs when they accept scientific truths, and even though there are probably more people alive today who believe in sorcery than there were in 1600, there are more people now who also believe in germs, powered flight, and heliocentric astronomy.

There is little argument that scientific knowledge and its use as a prophylactic against the rampant spread of superstition is a ‘good thing’. Is it possible though, to have too much of a good thing? Is there a limit to how much we should insist upon determinism and probability to explain everything?

The Over-Enlightenment

The City Dark is a recent documentary about the subtle and not-so-subtle effects of light pollution. Besides new and unacknowledged health dangers from changed sleeping habits in people and ecological upheaval in other species, the show makes the case that the inescapable blur of light which obscures our view of the night sky is quietly changing our view of our own lives. The quiet importance of the vast heavens in setting our expectations and limiting the scale of our ego has been increasingly dissolved into a haze of metal halide. In just over a century, night-time illumination has gone from a simple extension of visibility into the evening, into a 24 hour saturation coverage of uninhabited parking lots, residential neighborhoods, and office buildings. The connection between the power to see, do, and know, is embodied literally in our history as the Enlightenment, Industrial Age, and Information Age.

The 20th century was cusp of the Industrial and Information ages, beginning with Edison and Einstein redefining electricity, light, and energy, peaking with the midcentury Atomic age when radiation became a household word and microwave ovens began to cook with invisible light rather than heat. Television became an artificial light source which we used not only as silent companions with which to see the world, but as a kind of hypnotic signal emitter which we stare directly into – the home version of that earlier invention which came into its own in the 20th century, the motion picture. The century which tracked the spread of electricity and light from urban centers to the suburbs ended with the internet and mobile phones bringing CRT, LED, and LCD light into our personal space. Where once electronic devices were confined to living rooms and cars, we are now surrounded by tiny illuminated dots and numbers, and a satellite connection is hardly ever out of arms reach.

A Life Sentence

In Foucault’s Discipline and Punish, he details the history of prison and the rise of disciplinary culture in Europe as it spread from monasteries through the hospitals, military, police, schools, and industry. He discusses how the concept of justice evolved from the whim of the king to torture and publicly execute whoever he pleased, to kangaroo courts of simulated justice, to the modern expectation of impartiality and evidence in determining guilt.

The shift of punishment style from dismemberment to imprisonment reflected the change in focus from the body to the mind. The Reformation gave Western Europe a taste of irreverence and self-determination, at the same time, the monastic lifestyle was adopted throughout pre-Modernity. To be a hospital patient, student, soldier, prisoner, or factory laborer was to enter a world of strict regulation, immaculate uniforms, and constant inspection. Inspection is a central theme which Foucalt examines. He describes how an obsessive regimen of meticulous inspection and monitoring, and standardized testing reached an ultimate expression in the panopticon architecture. Through this central-eye floor plan, the population is exposed and personally vulnerable while the administration retains the option to remain concealed and anonymous.

Circumstantial Evidence

Tying these themes of inspection, enlightenment, and illumination together with witchcraft is the concept of evidence. What could be more scientific than evidence.

evident (adj.)

late 14c., from Old French evident and directly from Latin evidentem (nominative evidens) “perceptible, clear, obvious, apparent” from ex- “fully, out of” (see ex-) + videntem (nominative videns), present participle of videre “to see” (see vision)

The Salem Witch Trials famously victimized those who were targeted as witches by subjecting them to what seem to us now as ludicrous tests. This gives us a good picture of the transition from pre-scientific to scientific practices in society. This adolescent point between the two reveals a budding need to rationalize harsh punishments intellectually, but not enough to prevent childish impatience and blame from running the show.

The idea of circumstantial evidence – evidence which is only coincidentally related to a crime, marks a shift in thinking which is echoed in the rise of the scientific method. As the mindset of those in power became more modern, the validity of all forms of intuition and supernatural sources came into question. Where once witchcraft and spirits were taken seriously, now there was a radical correction. It was belief in the supernatural which was revealed to be obsolete and suspicious. The default position had changed from one which assumed spirits and omens to one which assumed coincidence, exaggeration, and mistaken impressions. Beyond even the notion of innocent until proven guilty, it was the notion that proof mattered in the first place which was the Enlightenment’s gift to the cause of human liberation.

Few would argue that this new dis-belief system which brought us out of savagery is a good thing, but also, as Foucault intimates, we cannot assume that it is all good. Is incarceration really the human and effective way of discouraging crime that we would like to think, or is it a largely hypocritical enactment of a fetish for control? Does the desire to predict and control lead to an insatiable desire to dictate and invade others?

Cold Readings

There have been many exposes on psychics and mediums over the years where stage magicians and others have run down the kinds of tricks that can be used to gather unexpected intelligence from an audience and use it to fool them. The cold reading is a way of cheating a mark into thinking that the psychic has supernatural powers, when in fact they have had an assistant look through their purse earlier.

Ironically, these techniques are the same techniques used in science, except that they are intended to reveal the truth rather than instigate a fraud. Statistical analyses and reductive elimination are key aspects of the scientific method, giving illumination to hidden processes. In neuroscience, for instance, an fMRI is not really telling us about how a person thinks or feels, rather they physiological changes that we can measure are used to produce a kind of cold reading of the subject’s experience, based on our own familiarity with our personal experience.

This is all fantastic stuff, of course, but there seems to be a point where the methods of logical inference from evidence crosses over into its own kind of pathology. The etymology of superstition talks about “prophecy, soothsaying, excessive fear of the gods”. The suffix ‘-stition’ is from the same root as ‘-standing’ in understanding. There is a sense of the mind compulsively over-reaching for explanations, jumping to conclusions, and rendered stupid by naivete.

The converse pathology does not have a popular name like that, although people use the word pseudoskeptical to emphasize a passionately prejudiced attitude toward the unproved rather than a scientifically impartial stance. The neologism I am using here, hypostition, puts the emphasis on the technical malfunction of the scientific impulse run amok. Where superstition is naive, hypostition is cynical. Where superstition jumps to conclusions, hypostition resists any conclusion, no matter how clear and compelling, in which the expectations of the status quo are called into question.

Tests in Life

Much of what is meant by witchcraft can be boiled down to an effort to access secret knowledge and power. The witch uses divination to receive guidance and prophecy intuitively, often by studying patterns of coincidence and invoking a private intention to find its way to a public expression. Superstition swims in the same waters, reading into coincidence and projecting their own furtive impulses outwardly. Beyond that, we talking about herbal medicine, folk psychology, and rituals mythologizing nature.

The goal of the science and technology is similarly an effort to extract knowledge and power from nature, but to do so without falling into the trap of magical thinking. Instead of making a pact with occult forces, the scientist openly experiments to expose nature. Along the way, there are often lucky coincidences which lead to breakthroughs, and challenges which seem tailor made to derail the work. These trials and tribulations, however, are not supported by science. If we adopt the hypostitious frame of mind, there can be no narrative to our experience, no fortunate people, places, or times, beyond the allowable margins of chance.

We have come full circle on coincidence, where we obliged to doubt even the most life-altering synchronicity as mere statistical inevitability.

In place of superstition we have neuroses. Our triumph over the fear of the unknown has become an insidious phobia of the known. Even to recognize this would be to admit some kind of narrative pattern in human history. Recognition of such a pattern is discouraged. The tests which we face are not allowed to make that kind of sense, unless it can be justified by the presence of a chemical in the body, or a behavior in another species.

A Way Out

For me, the recognition of the two poles of superstition and hypostition are enough to realize that the way forward is to avoid the extremes most of the time. Intuition and engineering both have their place, and the key is not to always try to squeeze one into the other. At this point, the world seems to be nightmarishly extreme in both directions at the same time, but maybe it has always seemed that way?

The challenge I suppose is to try to find a way to escape each other’s insanity, or to contribute in some way toward improving what we can’t escape from. With some effort and luck, our fear of the dark and insensitivity to the light might be transformed into a full range of perception. Nah, probably not.

Superpositioned Aion Hypothesis

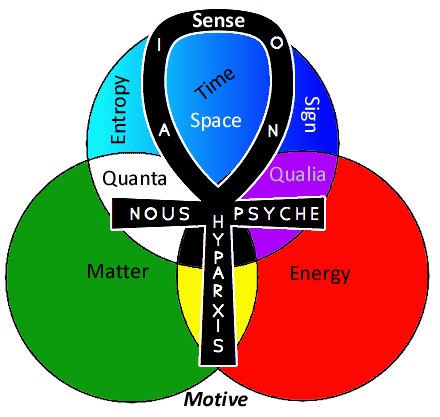

Above, an ankh appropriated from here, superimposed on the MSR diagram. I have included Sense and Motive, reflecting the circuitous, super-personal nature of the Aion/Eternity (collective/Absolute sense) and the cross of what Bennett calls ‘Time’ (I call personal sensory awareness) vs what he calls Hyparxis (“an ableness-to-be”, aka motive participation).

“The English philosopher John G. Bennett posited a six-dimensional Universe with the usual three spatial dimensions and three time-like dimensions that he called time, eternity and hyparxis. Time is the sequential chronological time that we are familiar with. The hypertime dimensions called eternity and hyparxis are said to have distinctive properties of their own. Eternity could be considered cosmological time or timeless time. Hyparxis is supposed to be characterised as an ableness-to-be and may be more noticeable in the realm of quantum processes.” – source

- Aion and Gaia with four children, perhaps the personified seasons, mosaic from a Roman villa in Sentinum, first half of the 3rd century BCE, (Munich Glyptothek, Inv. W504)

The word aeon, also spelled eon, originally means “life” or “being”, though it then tended to mean “age”, “forever” or “for eternity”. It is a Latin transliteration from the koine Greek word (ho aion), from the archaic (aiwon). In Homer it typically refers to life or lifespan. Its latest meaning is more or less similar to the Sanskrit word kalpa and Hebrew word olam. A cognate Latin word aevum or aeuum for “age” is present in words such as longevity and mediaeval. source

The universe seems to want to be understood both in two contradictory presentations:

1. As the timeless eternity within which experiences are rationed out in recombinations of irreducible elements.

2. As the creative flow of authentically novel experiences, whose recombination is impossible.

If we can swallow the idea of superposition on the microcosmic level, why not the astrophysical-cosmological level? Whether the universe seems to be gyrating in a direction that paints your life in a meaningful and integrated light, or it has you struggling to swim against a sea of chaotic scorns is as universal an oscillator of probabilities as any quantum wavefunction.

These fateful cyclings of our personal aion are reflected also in the micro and macro of the Aion at large . With the unintentional dice game of quantum mechanics flickering in and out of existence far beneath us, and fate’s private wheel of fortune seemingly spinning in and out of our favor intentionally just beyond us, the ultimate superposition is that of the eternal and the new.

How can the universe be a relativistic body in which all times and spaces are objectively present, and an expanding moment of being which not only perpetually imagines new universes, but imagines imagination as well? Why not apply superposition?

“In physics and systems theory, the superposition principle, also known as superposition property, states that, for all linear systems, the net response at a given place and time caused by two or more stimuli is the sum of the responses which would have been caused by each stimulus individually. So that if input A produces response X and input B produces response Y then input (A + B) produces response (X + Y).” source

The universe is generic and proprietary, eternally closed but momentarily open and eternally open but momentarily closed. It/we are always changing in some sense, but remaining the same in every other. Within the Aion, all opposites are superpositioned – bothness, neitherness, and only-one-and-not-the-other ness.

Consciousness, in Black and White

It occurs to me that it might be easier to explain my view of consciousness and its relation to physics if I begin at the beginning. In this case, I think that the beginning was in asking ‘What if the fundamental principle in the universe were a simple form of awareness rather than something else?’

Our choices in tracing the lineage of consciousness back seem to be limited. Either it ’emerged’ from complexity, at some arbitrary stage of biological evolution, or its complexity evolved without emergence, as elaboration of a simple foundational panpsychic property.

In considering which of these two is more likely, I suggest that we first consider the odd, unfamiliar option. The phenomenon of contrast as a good place to start to characterize the theme of awareness. Absolute contrasts are especially compelling. Full and empty, black and white, hot and cold, etc. Our language is replete with evidence of this binary hyperbole. Not only does it seem necessary for communication, but there seems also to be an artistic satisfaction in making opposites as robust as possible. Famously this tendency for exaggeration clouds our thinking with prejudice, but it also clarifies and makes distinction more understandable. In politics, mathematics, science, philosophy, and theology, concepts of a balance of opposites can be found as the embodiment of its essential concepts.

For this reason alone, I think that we can say with certainty that consciousness has to do with a discernment of contrasts. Beneath the linguistic and conceptual embodiments of absolute contrasts are the more zoological contrasting pairs – hungry and full, alive and dead, tired and alert, sick and healthy, etc. At this point we should ask, is consciousness complex or is it simple? Is the difference between pain and pleasure something that should require billions of cellular interactions over billions of years of evolution to arrive at accidentally, or does that seem like something which is so simple and primordial that nothing could ever ‘arrive’ at it?

Repetition is a special form of contrast, because whether it is an event which repeats cyclically through a sequence or a form which repeats spatially across a pattern, the underlying nature of what repeats is that it is in some sense identical or similar, and in another sense not precisely identical as it can be located in memory or position as a separate instance.

I use the phrase “repeats cyclically through a sequence” instead of “repeats sequentially through time” because if we take our beginning premise of simple qualities and capacities of awareness as preceding even physics, then the idea of time should be grounded in experience rather than an abstract metric. Instead of conceiving of time as a dimension in which events are contained, we must begin with the capacity of events to ‘know’ each other or in some way retain their continuity while allowing discontinuity. An event which repeats, such as a heartbeat or the circadian rhythms of sunlight, is fundamentally a rhythm or cycle. That is the actual sense experience. Regular, frequent, variation. Modulation of regularity.

Likewise, I use the phrase “repeats spatially across a pattern” instead of “repeats as a pattern across space” because again, we must flip the expectation of physics if we are to remain consistent to the premise of sense-first. What we see is not objects in space, it is shapes separated by contrasting negative shapes. What we can touch are solids, liquids, and gases separated from each other by contrasting sense of their densities. Here too, the sense of opposites dominates, separating the substantial from the insubstantial, heavy from light, hard from soft.

An important point to make here is that we are adapted, as human beings with bodies of a particular density and size, to feel the world that relates appropriately to our body. It is only through the hard lessons like plague and radiation that we have learned that indeed things which are too small for us to see or feel can destroy our bodies and kill us. The terror of this fact has inspired science to pursue knowledge with an aggressive urgency, and justifiably so. Scientists are heroes, informing medicine, transportation, public safety, etc as never before in the history of the world and inspiring a fantastic curiosity for knowledge about reality rather than ideas about God or songs about love. The trauma of that shattering of naive realism haunts our culture as whole, and has echoes in the lives of each generation, family, and individual. Innocence lost. The response to this trauma varies, but it is hard to remain neutral about. People either adapt to the cold hard world beyond themselves with fear or with anger. It’s an extension of self-consciousness which seems uniquely human and often associated with mortality. I think that it’s more than confronting their own death that freaks out the humans, it’s the chasm of unknowable impotence which frames our entire experience on all sides. We know that we don’t really know.

The human agenda becomes not merely survival and reproduction, but also to fill the existential chasm with answers, or failing answers, to at least feel fulfilled with dramatic feelings – with entertainments, achievements, and discoveries. We want something thrilling and significant to compensate for our now unforgettable discovery of our own insignificance. With modernism came a kind of Stockholm syndrome turn. We learned how to embrace the chasm, or at least to behave that way.

At the same time that Einstein began to call the entire foundation of our assumptions about physics into question, the philosophy of Neitzsche, along with the science of Darwin and Freud had begun to sink in politically. Revolutions from both the Left and Right rocked the world, followed in some nations by totalitarianism and total war. The arts were transformed by an unprecedented radicalism as well, from Duchamp, Picasso, and Malevich to Stravinsky and Le Corbusier. After all of the pageantry and tradition, all of the stifling politeness and patriarchy, suddenly Westerners stopped giving a shit about the past. All at once, the azimuth of the collective psyche pitched Westward all the way, toward annihilation in a glorious future. If humans could not live forever, then we will become part of whatever does live forever. The human agenda went transhuman, and everyone became their own philosophical free agent. God was indeed dead. For a while. But the body lives on.

The point of this detour was to underscore the importance of what we are in the world – the size and density of our body, to what we think that the world is. Not only do we only perceive a narrow range of frequencies of light and sound, but also of events. Events which are too slow or too fast for us to perceive as events are perceived as permanent conditions. What we experience exists as a perceptual relativity between these two absolutes. Like the speed of light, c, perception has aesthetic boundaries. Realism is personal, but it is more than personal also. We find agreement in other people and in other creatures which we can relate to. Anything which has a face earns a certain empathy and esteem. Anything that we can eat has a significance to us. Sometimes the two overlap, which gives us something to think about. Consciousness, at least the consciousness which is directed outwardly from our body, is all about these kinds of judgment calls or bets. We are betting that animals that we eat are not as significant as we are, so we enjoy eating them, or we are betting that such a thought is immoral so we abstain. Society reflects back these judgments and amplifies them through language, customs, belief systems, and laws. Since the modernist revolution, the media has blanketed the social landscape with mass production of cliches and dramatizations, which seems to have wound up leaking a mixture of vanity and schadenfreude, with endless reenactments, sequels, and series.

It is out of this bubble of reflected self-deflection that the current philosophies rooted in both reductionism and emergentism find their appeal. Beginning with the assumption of mechanism or functionalism as the universal principle, the task of understanding our own consciousness becomes a strictly empirical occupation. Though the daunting complexity of neuroscience cannot be overstated, the idea is that it is inevitable that we eventually uncover the methods and means by which data takes on its fancy experiential forms. The psyche can only be a kind of evolutionary bag of tricks which has developed to serve the agenda of biological repetition. Color, flavor, sound, as well as philosophy and science are all social peacock displays and data-compressing virtual appendages. The show of significance is an illusion, an Eloi veneer of aesthetics over the Morlock machinations of pure function.

To see oneself as a community of insignificance in which an illusion of significance is invested is a win-win for the postmodern ego. We get to claim arbitrary superiority over all previous incarnations, while at the same time claiming absolute humility. It’s a calculated position, and like a game theory simulation, it aims to minimize vulnerability. Facts are immutable and real, experiences are irrelevant. From this voyeuristic vantage point, the holder of mechanist views about free will is free to deny that he has it without noticing the contradiction. The emergent consciousness can speak glowingly out of both sides of its mouth of its great knowledge and understanding in which all knowledge and understanding is rendered void by statistical mechanics. Indeed the position offers no choice, having backed itself into a corner, but to saw off its own limbs with one hand and reattach them with another when it is not looking.

What is gained from this exercise in futility beyond the comfort that comes with conformity to academic consensus is the sense that whatever happens, it can be justified with randomness or determinism. The chasm has been tamed, not by filling it in or denying it, but by deciding that we are simply not present in the way that we think. DNA acts, neurons fire, therefore we are not thinking. Death is no different than life which has paused indefinitely. An interesting side effect is that as people are reduced to emergent machines, machines are elevated to sentient beings, and the circle is complete. We are not, but our products are. It seems to me the very embodiment of suburban neuroses. The vicarious society of invisible drones.

Just as 20th century physics exploded the atom, I would like to see 21st century physics explode the machine. Instead of releasing raw energy and fragmentation, I see that the blasting open of mathematical assumptions will yield an implosion into meaning. Pattern recognition, not information, is the true source of authenticity and significance. They are the same thing ultimately. The authenticity of significance and the significance of authenticity speak to origination and individuation over repetition. Not contrast and dialectic, not forces and fields, but the sense in which all of these facets are yoked together. Sense is the meta-syzygy. It is the capacity to focus multiplicity into unity (as in perception or afference) and the capacity for unity to project into multiplicity (participation or efference).

These are only metaphorical descriptions of function however. What sense really is and what it does can only be experienced directly. You make sense because everything makes sense…in some sense. That doesn’t happen by accident. It doesn’t mean there has to be a human-like deity presiding over all of it, to the contrary, only half of what we can experience makes sense intentionally, the other half (or slightly less) makes sense unintentionally, as a consequence of larger and smaller sequences which have been set in motion intentionally. We are the evidence. Sense is evident to us and there is nothing which can be evident except through sense and sense making.

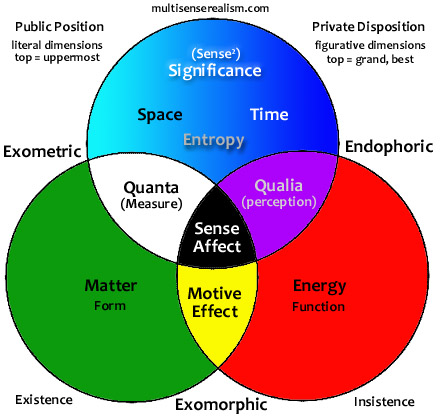

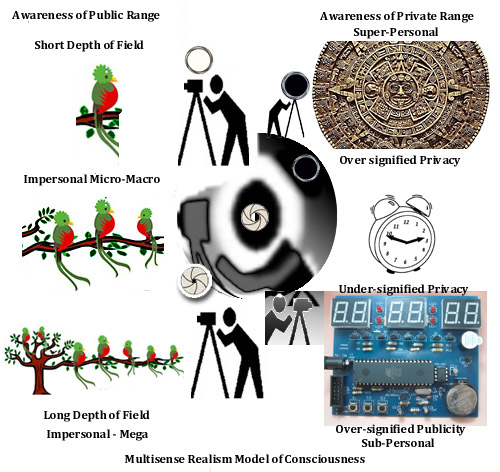

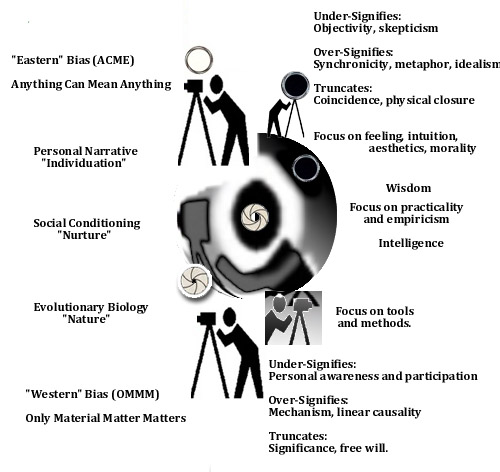

Public Space, Private Time, and the Aperture of Consciousness

In the first diagram, I’m trying to show the relation between public and private physics, and how the aperture of consciousness modulates which range is emphasized. Contrary to the folk model of time that we currently use, multisense realism proposes that time is only conceivable from the perspective of a experiential narrative. Time cannot be translated literally into the public range of experience, only inferred figuratively by comparing the positions of objects.

Through general relativity, we can understand spacetime as a single entity defined by gravity and acceleration – to quote Einstein, a

“non-rigid reference-body, which might appropriately be termed a “reference-mollusk,” is in the main equivalent to a Gaussian four-dimensional co-ordinate system chosen arbitrarily”.

While space and time can indeed be modeled that way successfully, what has been overlooked is the opportunity to see another profoundly fundamental symmetry. What GR does is to spatialize time. This is a great boon to physics since physics has focused exclusively on public phenomena (for good reason, initially), GR has enabled accurate computations on astronomical scales, taught us how to make cell phone networks work on a global scale, send satellites into orbit, etc. Einstein accomplished this by collapsing the subjective experience of time passing (which can change depending on how you feel about what’s going on) into a one dimensional vector of ‘observation’. Not any special kind of observation, just a point of reference without aesthetic dimensions of feeling, hearing, tasting – only a generic sense of position and acceleration. This is the public perspective of privacy, i.e. not private at all, but a footprint which points to the privacy which has been overlooked but assumed.

This is great for modeling some aspects of public phenomena, but in reality, there is no actual public perspective that we can conceive of. There is no voyeur’s view from nowhere which defines perspectives without any mode of sensory description. That view from ‘out there’ is purely an intellectual abstraction, a hypothetical vantage point. Why is this a big deal? It’s not until you want to really understand subjectivity in its own terms – private terms. By spatializing time, GR strips out the orthogonal symmetry of space vs time which we experience and redefines it as an illusion. Our native experience of time is as much the opposite of space as it is similar. Time is autobiographical, it is memory and anticipation. We can stay in the same place while time passes. Our time also moves with us, with our thoughts and actions.

Space, by contrast, is a public field in which we are tangibly located. If we want our thoughts to stay somewhere, we must leave some material trace – write a note or make a sign. When we want to meet someone, establishing the spatial coordinate for the meeting is based on a literal location – a physical address or reference (by the palm tree in the South Square Mall). The time coordinate is more figurative. We look at clocks with made up numbers which we have intentionally synchronized, or pick an event in our shared narrative experience (after the movie is over). If our watches are wrong, it doesn’t matter as long as they are both wrong in the same way. If we actually need to be a specific palm tree, it doesn’t matter if we are both wrong in the same way, we will still be at the wrong location. Time, in this sense is a social convention, while space is an objective fact.

Looking at the diagram, I have put this sense of time as a social convention in the center right, as the clip art alarm clock. This is the familiar sense of time as personal commodity. Running out of time. The bells emphasize the intrusive nature of this face of time – our behavior is constrained by conflicting agendas between self and others, home and school or work, etc. There is a pie to be allotted and when the clock strikes X, the agenda is expected to follow the X schedule. The label just under this clock marks the point of punctuality, where the time that you care about personally no longer matters, and the public expectation of time takes over.

Above this personal, work-a-day agenda sense of time, I have included a Mayan calendar to reference a super-personal sense of time. Time which stretches from eternity to the eternal now. Time which is measured in fleeting flashes and awe-inspiring syzygies. Time as cosmological poetry, shedding light on experience through experience. This is time as a dance with wholeness.

Beneath the alarm clock I have used the guts of a digital clock to emphasize the sub-personal sense of time. The alarm clock face of time collapses the mandala-calendar’s eternal cycle into personal cycles, but the digital clock breaks down even the numbers themselves into spatial configurations. Time is no longer moving forward or even cycling, but blinking on and off instantaneously.

This all correlates to the diagram, where I tried to juxtapose the public space side of the camera with the private experience side. The subjective disposition of our awareness contracts and dilates to influence our view. At the subjective extreme, the view is near sighted publicly and far sighted privately. For the objective-minded individuals and cultures, the view outside is clear and deep, but the interior view is purely technical. The little icons have some subtle details that came out serendipitously too – with the headless guy on top vs the camera guy on the bottom, but I won’t go into that…rabbit hole alert. The last few posts on psychedelics and language relate…it’s all about how spacetime extends intentionality from private aesthetics to public realism through diffraction of experience.

Why can’t the world have a universal language? Part II

This is more of a comment on Marc Ettlinger‘s very good and thought provoking answer (I have reblogged it here and here). In particular I am interested in why pre-verbal expressions do not diverge in the same way as verbal language. I’m not sure that something like a smile, for instance, is literally universal to every human society, but it seems nearly so, and even extends to other animal species, or so it appears.

What’s interesting to me is that you have this small set of gestures which are even more intimate and personal than verbal signals – more inseparable from identity, which then gets expressed in this interpersonal linguistic way which is at once lower entropy and higher entropy. What I mean is that language has the potential both to carry a more highly articulated, complex meaning, but also to carry more ambiguity than a common gesture.

When a foreigner tries to communicate with a native without having common language, they resort to pre-verbal gestures. Rather than developing that into a universal language, we, as you say, opt for a more proprietary expression of ourselves, our culture, etc… except that in close contact, the gestures would actually be just as personally expressive if not more. There’s all kinds of nuance loaded into that communication, of individual personality as well as social and cultural (and species) identity.

So why do we opt for the polyglot approach for verbal symbols but not for raw emotive gestures? I think that the key is in the nature of boundary between public and private experiences. I think there are two levels of information entropy at work. Something like a grunt or a yell is a very low entropy broadcast on an intro-personal level and a high entropy broadcast on an extra-personal level. If something makes a loud noise at you, whether it’s a person or a bear, the message is clear – “I am not happy with you, go away.”. These primal emotions need not be simple either. Grief, pride, jealousy, betrayal, etc might be quite elusive to define in non-emotional terms, full of complexity and counter-intuitive paradox. If we want to communicate something which is about something other than private states of the interacting parties, however, the grunt or scowl is a very highly entropic vehicle. What’s he yelling about? Enter the linguistic medium.

The human voice is perhaps the most fantastically articulated instrument which Homo sapiens has developed, second only to the cortex itself. The hand is arguably more important perhaps, in the early hominid era, but without the voice, the development of civilization would have undoubtedly stalled. It’s like the paleolithic internet. Mobile, personal yet social, customizable, creative. It’s a spectacular thing to have whether you’re hunting and gathering or settling in for nice long hierarchical management of surplus agricultural production.

The human voice is the bridge between the private identity in a world based on very local and intimate concerns, and a public world of identity multiplicities. To repurpose the lo-fi private yawps and howls with more high fidelity vocalizations requires a trade off between directness and immediacy for a more problematic but intelligent code. One of the key features is that once a word is spoken, it cannot be taken back as easily. A growl can be retracted with a smile, but a word has a ‘point’ to make. It is thermodynamically irreversible. One it has been uttered in public, it cannot be taken back. A decision has been made. A thought has become a thing.

Inscribing language in a written form takes this even one step clearer, and there is a virtuous cycle between thought, speech, actions, and writing which was like the Cambrian explosion for the human psyche. Unlike private gestures which only recur in time, public artifacts, spoken or written, are persistent across space. They become an archeological record of the mind – the library is born. Why can’t the world have a universal language? Because we can’t get rid of the ones that we’ve got already, or at least not until recently. Public artifacts persist spatially. Even immaterial artifacts like words and phrases are spread by human vectors as the settle, migrate, concentrate and disperse.

Because language originates out of public discourse which is local to specific places, events, and people, the aesthetics of the language actually embody the qualities of those events. This is a strange topic, as yet virtually untouched by science, but it is a level of anthropology which has profound implications for the physics of privacy itself – of consciousness. Language is not only identity and communication, I would say that it is also a view of the entire human world. Within language, the history of human culture as a whole rides right along side the feelings and thoughts of individuals, their lives, and their relation with nature as it seemed to them. The power of language to describe, to simulate, and to evoke fiction makes each new word or phrase a kind of celebration. The impact of technology seems to be accelerating both the extension of language and its homogenization. At the same time, as instant translation becomes more a part of our world, the homogenization may suddenly drop off as people are allowed to receive everything in their own language.

Why can’t the world have a universal language?

Answer by Marc Ettlinger:

To answer this question we need to consider why we have multiple languages in the first place.

Presumably at some point, about 100,000-200,000 years ago, Homo Sapiens started using language in the way we mean language now. At the time, we were spread out over a relatively confined space on the globe and it is practically impossible that language spontaneously arose in more than a handful of places.

Our route out of Africa

So, at one point, there were some limited number of languages among groups of people that had some amount of geographic proximity. It could have been relatively easy for one language to emerge then or that everyone all spoke the same mother tongue anyway and for things to have stayed that way till now.

But that didn’t happen. In fact, the opposite happened. As humans spread across the globe and popultion growth exploded exponentially, so too did the number of languages. In fact, it’s estimated that there were approximately 10,000 languages spoken only a couple of hundred years ago.

There are two reasons for this. The first is that languages change. The second is that language is identity.

It’s easy to see that languages change. Remember struggling through Shakespeare? Yeah, me too, and that demonstrates the change that English has undergone over the past few hundred years. When that continues to happen and the same language changes in different ways in different geographical regions, you eventually get new languages. The most obvious example is Vulgar Latin dialects turning into the Romance Languages. From one language to many.

So, the first part of the answer is that the general tendency is for languages to propagate and diverge.

Your response may be that we are in a new world order, now, with globalization homogenizing the entire world into one common culture, facilitated by internet technology. America’s melting pot writ large.

The world as melting pot?

This is where part two of the answer comes in. Language is not simply a means for communicating. Language is also identity. We know that people communicate more than ideas with their language, they communicate who they are, what they believe and where they’re from. Subconsciously. So the obstacles to one language are similar to the obstacle to us all wearing the same clothes. It would certainly be cheaper and more efficient, but it’s not how people behave. And we see that empirically in studies of how Americans’ accents have not homogenized with the advent of TV (Why do some people not have accents?).

The same applies with languages — in the face of globalization, we see renewed interest in native languages, for example the rise of Gaelic (Irish language) in the face of the EU.

Having said that, colonialism and statism have lead to a decline in number of languages from its peak of 10,000 to about 6,000+ today, which you can read about here: Is English killing other languages?

Therein you’ll also see discussion of your question. The conclusion there, and what I’d similarly suggest here is that “so long as countries exist, English won’t encroach further.” In other words, the world doesn’t really want a universal language.

As long as humans aspire to have their own distinct identities and form different groups, the same aspirations that drive them to wave different flags, root for different teams, listen to different music and have different cultures, they’ll continue to have different languages.

S33: Interesting to think about how language changes prolifically even though what language represents often doesn’t change. I’ll have to think about that, re: diffraction of private experience into public spacetime. The cognitve level is more generic than the emotional level of expression – a more impersonal aesthetic. Ironically, the intent is to make gesture more enduring and objective, yet the result is more changes over time and space, while the language of gesture is more universal. Of course, verbal expression offers many more advantages through its motility through public media.

Quora: What effect has the computer had on philosophy?

Quora: What effect has the computer had on philosophy?

What effect has the computer had on philosophy in general and philosophy of mind in particular?

Obviously, computing has facilitated a lot of scientific advances that allow us to study the brain. e.g., neuroscience research would not exist as it does today without the computer. However, I’m more interested in how theories of computation, computer science and computer metaphors have shaped brain research, philosophy of mind, our understanding of human intelligence and the big questions we are currently asking. (Quora)

I’m not familiar enough with the development of philosophy of mind in the academic sense to comment on it, but the influence of the computer on popular philosophy includes the relevance of themes such as these:

Simulation Dualism: The success of computer graphics and games has had a profound effect on the believability of the idea of consciousness-as-simulation. Films like The Matrix have, for better or worse, updated Plato’s Allegory of The Cave for the cybernetic era. Unlike a Cartesian style substance dualism, where mind and body are separate, the modern version is a kind of property dualism where the metaphor of the hardware-software relation stands in for the body-mind relation. As software is an ordered collection of the functional states of hardware, the mind or self is the similarly ordered collection of states of the brain, or neurons, or perhaps something smaller than that (microtubules, biophotons, etc).

Digital Emergence: From a young age we now learn, at least in a general sense, how the complex organization of pixels or bits leads to something which we see as an image or hear as music. We understand how combinations of generic digits or simple rules can be experienced as filled with aesthetic quality. Terms like ‘random’ and ‘virtual’ have become part of the vernacular, each having been made more relevant through experience with computers. The revelation of genetic sequences have further bolstered the philosophical stance of a modern, programmatic determinism. Through computational mathematics, evolutionary biology and neuroscience, a fully impersonal explanation of personhood seems imminent (or a matter of settled science, depending on who you ask). This emergence of the personal consciousness from impersonal unconsciousness is thought to be a merely semantic formality, rather than a physics or functional one. Just as the behavior of a flock of birds flying in formation can be explained as emerging inevitably from the movements of each individual bird responding to the bird in front of them, the complex swarm of ideas and feelings that we experience are thought to also emerge inevitably from the aggregate behavior of neuron processes.

Information Supremacy: One impact of the computer on society since the 1980’s has been to introduce Information Technology as an economic sector. This shift away from manufacturing and heavy industry seems to have paralleled a historic shift in philosophy from materialism to functionalism. It no longer is in fashion to think in terms of consciousness emerging from particular substances, but rather in terms of particular manipulations of data or information. The work of mathematicians and scientists such as Kurt Gödel, Claude Shannon, and Alan Turing re-defined the theory of what math can and cannot do, making information more physical in a sense, and making physics more informational. Douglas Hofstadter’s books such as Gödel, Escher, Bach: an Eternal Golden Braid continue to have a popular influence, bringing the ideas of strange loops and self referential logic to the forefront. Computationally driven ideas like Chaos theory, fractal mathematics, and Bayesian statistics also have gained traction as popular Big-Picture philosophical principles.

The Game of Life: Biologist Richard Dawkins’ The Blind Watchmaker (following his other widely popular and influential work, The Selfish Gene) utilized a program to illustrate how natural selection produces biomorphs from a few simple genetic rules and mutation probabilities. An earlier program Conway’s Game of Life, similarly demonstrates how life-like patterns evolve without input from a user, given only initial conditions and simple mathematical rules. Philosopher Daniel Dennett has been another extremely popular influence who maintains both a ‘brain-as-computer’ view and a ‘consciousness-as-pure-evolutionary-adaptation’ position. Dawkins coined the word ‘meme’ in The Selfish Gene, a word which has now itself become a meme. Dennett makes use of the concept as well, naming the repetitive power of memes as the blind architect of culture. Author Susan Blackmore further spread the meme meme with her book The Meme Machine. I see all of these ideas as fundamentally connected – the application of the information-first perspective to life and consciousness. To me they spell the farthest extent of the pendulum swing in philosophy to the West, a critique of naturalized subjectivity and an embrace of computational inevitability.

The Interior Strikes Back: When philosopher David Chalmers introduced the Hard Problem of Consciousness, he opened the door for a questioning of the eliminative materialism of Dawkins and Dennett. His contribution at that time, along with that of philosophers John Searle and Galen Strawson has been to show the limitation of mechanism. The Hard Problem asks innocently, why is there any conscious experience at all, given that these information processes are driven entirely by their own automatic agendas? Chalmers and Strawson have championed the consideration of panpsychism or panexperientialism – that consciousness is a fundamental ingredient in the universe like charge, or perhaps *the* fundamental ingredient of the universe. My own view, Multisense Realism is based on the same kinds of observations of Chalmers and Strawson, that physics and mathematics have a blind spot for some aspects, the most important aspects perhaps, of consciousness. Neuroscientist Raymond Tallis’ book Aping Mankind: Neuromania, Darwinitis and the Misrepresentation of Humanity provides a focused critique of the evidence upon which reductionist perspectives of human consciousness are built.

The bottom line for me is that computers, while wonderful tools, exploit a particular facet of consciousness – counting. The elaboration of counting into mathematics and calculus-based physics is undoubtedly the most powerful influence on civilization in the last 400 years, its success has been based on the power to control exterior bodies in public space. With the development of designer pharmaceuticals and more immersive internet experiences, we have good reason to expect that this power to control extends to our entire existence. With the computer’s universality as evidence that doing and knowing are indeed all there is to the universe, including ourselves, it is nonetheless difficult to ignore that beyond all that seems to exist, there is some thing or some one else who seems to ‘insist’. On further inspection, all of the simulations, games, memes, information, can be understood to supervene on a deeper level of nature. When addressing the ultimate questions, it is no longer adequate to take the omniscient voyeur and his ‘view from nowhere’ for granted. The universe as a program makes no sense without a user, and a user makes no sense for a program to develop for itself.

It’s not the reflexive looping or self-reference, not the representation or semiotics or Turing emulation that is the problem, it is the aesthetic presentation itself. We have become so familiar with video screens and keyboards that we forget that those things are for the user, not the computer. The computer’s world, if it had a world, is a completely anesthetic codescape with no plausible mechanism for or justification of any kind of aesthetic decoding as experience. Even beyond consciousness, computation cannot even justify a presentation of geometry. There is no need to draw a triangle itself if you already have the coordinates and triangular description to access at any time. Simulations need not actually occur as experiences, that would be magical and redundant. It would be like the government keeping a movie of every person’s life instead of just keeping track of drivers licenses, birth certificates, tax returns, medical records, etc. A computer has no need to actualize or simulate – again that is purely for the aesthetic satisfaction of the user.

Recent Comments