Search Results

AI is Still Inside Out

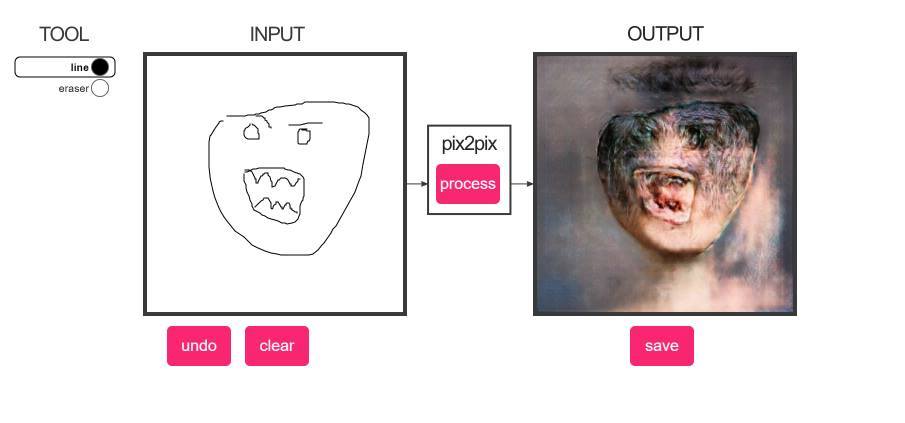

Turn your doodles into madness.

I think this is a good example of how AI is ‘inside out’. It does not produce top-down perception and sensations in its own frame of awareness, but rather it is a blind seeking of our top-down perception from a completely alien, unconscious perspective.

The result is not like an infant’s consciousness learning about the world from the inside out and becoming more intelligent, rather it is the opposite. The product is artificial noise woven together from the outside by brute force computation until we can almost mistake its chaotic, mindless, emotionless products for our own reflected awareness.

This particular program appears designed to make patterns that look like monsters to us, but that isn’t why I’m saying its an example of AI being inside out. The point is that this program exposes image processing as a blind process of arithmetic simulation rather than any kind of seeing. The result is a graphic simulacra…a copy with no original which, if we’re not careful, can eventually tease us into accepting it as a genuine artifact of machine experience.

See also: https://multisenserealism.com/2015/11/18/ai-is-inside-out/

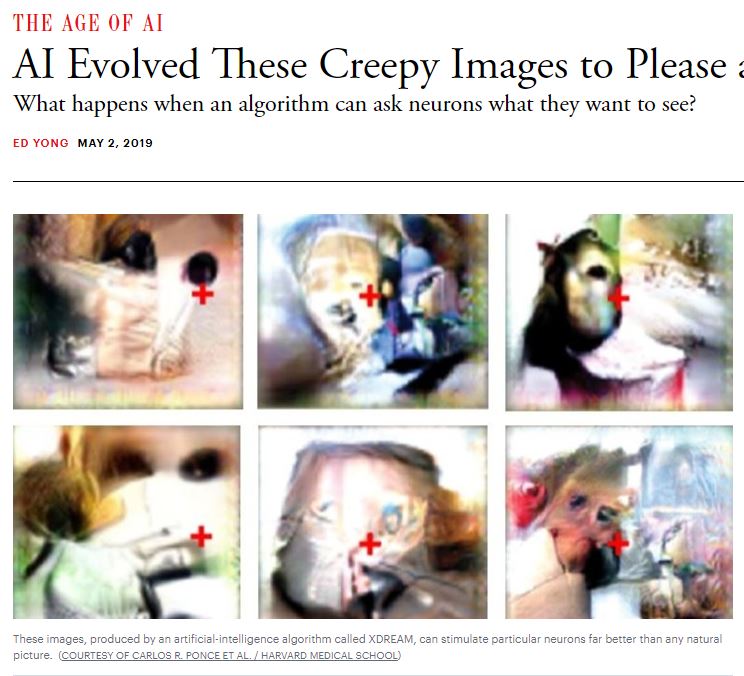

Time for an update (6/29/22) to further demonstrate the point:

Added 5/3/2023:

Stochastic filtering is not how sense actually works, but it can seem like how sense works if you’re using stochastic filtering to model sense.

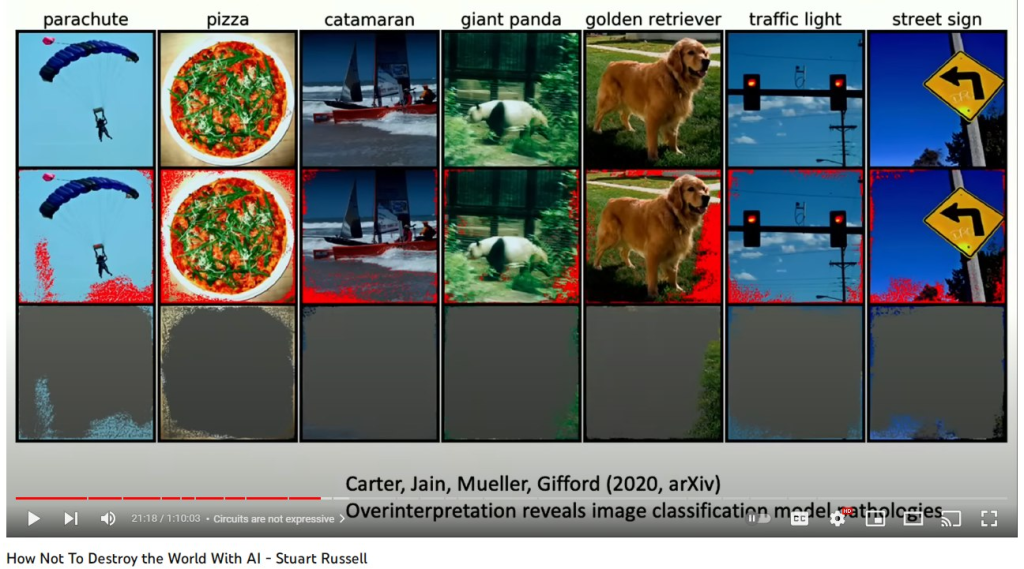

How Not To Destroy the World With AI – Stuart Russell

YouTube: Is There Really a Hard Problem of Consciousness?

Here are my comments on the part of this 4h31m long discussion that focuses explicitly on the Hard Problem, 03:05:19 – 03:43:24

I feel compelled to begin with a disclaimer that I think that I respect the group of people in this trialogue, their intelligence and expertise, and I am fully aware that my views come from a somewhat outsidered perspective, but in all cases my motive is not to deny any scientific observation or posit any sort of magic beyond the near-omnipotence of what I propose that consciousness actually is – which is something like imagination on a cosmological scale. I fully respect the academic credentials of the participants as well, and while I never pursued that path farther than a BA degree in Anthropology, I prefer to use first names rather than Dr. titles – no offense or undue familiarity intended, just seems less pretentious. Speaking of pretentious, I hereby end this disclaimer and proceed to the proceedings.

The Hard Problem segment opens with Joscha bringing up Daniel Dennett, and how his dismissal of qualia seems to be based on a criticism of how the term is used in philosophy. In the course of that, JB says of Dennett’s position…

3:06:46 “Oh well he’s a functionalist. Pretty much standard.

He says…uh good writing…there’s nothing wrong with what he writes. My position might be different from yours because I don’t see magical powers that are afforded by biology, it’s still just function. It is provided by biology and I can break down these functions ultimately into state transitions in substrates that form control structure and so on, so it’s it’s not opening avenues into something new. There’s basically no magical homunculus that is produced in consciousness via biology. Biology is just a way to get self-organizing matter.”

Here I agree with Joscha completely. I disagree with Dennett’s views almost entirely, but have great respect for how he does what he does. As far as biology goes, I see no grounds for emergent properties, especially any that smell of consciousness. When JB says that he can break down biological functions into mechanical events, I tend to believe him, or at least understand why that is entirely possible in theory. While we perceive breakpoints in the properties of physical, chemical, and biological phenomena, they are as far as I can reason, aesthetic separations. Functionally, they all refer to the same thing: concrete tangible objects on different scales of size moving each other around and changing shapes.

The geometry of those shapes and their movements become more complicated as we scale up in size from particles to molecules to cells to bodies, but complication in and of itself adds nothing to the conversation about consciousness. Indeed, without some awareness and experience, molecules or cells have no way of suspecting that they are part of any group or process and no capacity to qualify it as simple or complex. Without a theory of sense, we are really at a loss to say what the difference would be between matter and nothingness, but that’s another topic. Without going that far, I would still say that even the idea of ‘control systems’ can only arise within a conscious experience where a thoughtful analysis of perceived objects in a universe where some objects are associated with conscious, teleological motives.

In the most stringent reading of the view that I propose, matter of any scale would not literally be controlling anything, so that any appearance of control is smuggled in by our conscious, anthropic experience. Without that anthropomorphic projection, what we see as bacteria or molecules controlling each other would reduce losslessly to non-purposeful habits of mindless physical force geometry. Saying that these movements of objects are control systems doesn’t actually add anything to what is occurring physically, but that isn’t key to understanding the Hard Problem. We can call what physical structures end up doing mechanically ‘control systems’ without taking that literally. Literally there’s just physical forces moving each other around automatically for no reason other than the given geometry of what they are. If what we mean by “self-organizing” implies something more than inevitable looping geometries of those automatic movements, then that too would fall into an Explanatory Gap.

I agree with what Joscha says next about the problem that I and many others have with Daniel Dennett’s disqualifications of qualia:

3:07:56 JB: “…what I found is that Dennett fails to convince a lot of his own students in some sense and I try to figure out why that is and the best explanation that I’ve come up with so far is that Dennett actually never explains phenomenal experience. And when discusses qualia he mostly points at definitional defects in the way in which most philosophers treat qualia and then says it’s probably something that doesn’t really exist in the way in which these people Define it so we also don’t need to explain it and a lot of people find this unsatisfying. Because they say that regardless of how you define it it’s clearly something that they experience like qualia being the atoms of phenomenal experience or aspects of phenomenal experience or features of phenomenal experience it doesn’t really matter how you define it it’s there right and please explain it to me because I don’t see how an unthinking and feeling Mechanical Universe is going to produce these wealth of experience that I’m confronted with and so this physics does not seem to be able to explain why something is happening to me why is me here why is their experience and this is something that some people feel is poorly addressed by Dennett.”

Joscha goes on to summarize the significance of Philosophical Zombie thought experiments in formulating the Hard Problem.

3:09:45 JB …”so let’s see, we have a philosophical zombie in front of us and we are asking this philosophic zombie who in every regard acts like a human being because his brain implements all the necessary functionality just mechanically and not magically, ‘are you conscious?’ what is the zombie going to respond?

Anastasia Bendebury “What do you what do you mean about the fact that the brain reproduces all of the effects?”

JB: “The idea of the philosophical zombie is that the philosophical zombie is producing everything without giving rise to phenomenal experience – just mechanical. Based on the intuition that…”

AB: “What does that mean? I don’t understand what that means.”

JB: “I think the intuition is that when we experience is something that cannot be explained through causal structure. So mechanisms.”

AB: “But why do we think that? I don’t understand why we possibly think that.

That’s what I mean about not understanding the hard problem of Consciousness. Because I’m like look… This is why my definition of life as beginning before the cell is instrumental to eliminating the hard problem of Consciousness. Because if you have in the most basic cell – which is an embodiment of the state of matter that is life – you have a resonant state which is electromagnetic. It’s the redox state.

And the cell needs to maintain that electrical resonance at a specific set point because if it does not, it dies. And so it is going out into the world and it is constantly controlling where it is in the world relative to its internal state. And as you progressively produce more and more complex beings you get a more and more complex map of the world and a more and more complex internal state. And so by the time that you have a walking human, you cannot have a walking human without an internal state. It’s a philosophical thought experiment that requires you to divorce yourself from everything that you know about biology in order to make the claim. And I just feel like you can’t do that!

Because the biology is inherently what produces the conscious…it’s the state of matter that produces the consciousness and all of the resonant waves that are inside of it. And so if you have the resonant waves in the sufficient complexity of a sufficiently resonant system that is interpreting the world relative to itself and to what it wants how can you have anything except for consciousness? You have experience, you have the mapping of expectation to frustration and you’re just…it just emerges from the most basic cell.

I think that the reason why Anastasia isn’t seeing the Hard Problem is because her model of biology already includes aesthetic-participatory phenomena (experience, awareness, sensory-motivation), rather than cells being anesthetic-automatic mechanical events that occur independently of any experience of them.

The expectation that a physical state like reduction–oxidation (redox) constitutes or leads to a cell’s need to participate intentionally in seeking to correct what it somehow senses (unexplained by biology) as disequilibrium is already smuggling in teleology into biochemistry > physics > mechanism.

Because we have genetic mutation and retrospective statistical natural/passive “selection” to account for the evolving mechanical behaviors of cells, no sense or teleology is needed in the explanation. We can omit it entirely and nothing changes in our description of what is causing the movements of cells in the direction of the chemical reactions that are necessarily pulling them there.

In a purely physical universe, chemistry would act not out of any perceived sense of need. There would be no agentic power to seek out satiation of that need, but rather the mechanical behaviors that end up maintaining redox states (unperceived) would be nothing but those behaviors that happen to have survived in the genetics of the cell. If the cell ends up moving in ways that maintain redox and happens to be in an environment that allows that to happen, then the cell has a better statistical chance of reproducing. That’s it. No need, no going out into a world, no controlling, no sense of self – just molecular geometry cashing itself out statistically over time.

JB: “How do resonant waves produce consciousness?”

AB: “say it again”

JB: “How do resonant waves produce consciousness?”

AB: “okay so do you know the Qualia Research Institute yes? So what is their work? Their work is that they’re basically showing that there are harmonic states in the brain that are associated with experience”

It’s important to realize, in my opinion, that the association is always coming from experience, not from anything the brain is doing. The association is retrospective from the reality of consciousness rather than prospective from the reality of unexperienced brain matter.

Michael Shilo DeLay: “The question seems akin to asking how do fundamental waves produce music? Which emerges from all of these individual tones. But the same thing with conscious experiences you have the summing of different modules within the neuron-based systems that are all aggregating and fighting and resonating with one another and you get music that comes out of it.”

JB: “And you don’t see an explanatory problem there? No?

AB: “No. I’m like how I mean I can see a mathematical problem. I can see that it would be very very difficult to mathematize the way that that resonance…”

Here I see that what is being overlooked is the gap between phenomena in one sense modality (waving intensities of chemical or electrochemical movements in a brain) and any other aesthetic quality (sights, sounds, feelings, etc). When we understand music as emerging from a sequence of aural tones, that’s all within the context of a conscious experience. Sound in the aural sense is qualia. Sound waves are actually silent acoustic perturbations of matter as it collides with matter. Between the concept we have of sound waves, which refer to dynamic geometry of tangible objects so firmly embedded in our learning, we overlook the need to explain how and why these movements of matter are perceived at all, and how they come to be perceived as sounds rather than as the silent, physical vibrations of substances that they ‘actually’ are.

In comparing music emerging from acoustic waves to consciousness emerging from brain wave resonance, we are already falling for the same circular reasoning fallacy that leads people to deny the Hard Problem. Since there is the same Hard Problem there in transducing tangible changes in objects to aural experiences of sound, notes, and music, it actually only reinforces the Hard Problem in the brain > consciousness context. Sense is the key. That’s why I write so much about it and call my view Multisense Realism.

For the next few minutes the hosts and guest discuss the limits of the explanatory power of math, language, and scientific theory, and the difference between explanation and description. This isn’t directly related to the Hard Problem in my opinion, but it does speak to the same theme of neglecting different modalities of sense and sense making and glossing over the fact that in all cases, the gaps between such modalities can only be filled, as far as we can conceive, by some conscious experience in which multiple modes of qualia can be accessed and manipulated intentionally in another mode of sense making (imagination, abstraction, understanding). Because Anastasia’s view of the Hard Problem is that it must come from a misunderstanding of biology (which she already gives experience and teleology to), she sees the Problem as one of finding fault with description for not being explanatory.

I agree with Joscha in his response:

3:18:07 So when you talk about harmonic waves producing a phenomenon for me that’s very far from a causal explanation that so it’s something that is very unsatisfying to me because I cannot build this but well for me a causal explanation is something I can make.

This is at the heart of the Explanatory Gap, which I like to meme-ify with this cannibalized version of a cartoon (not sure which artist I stole the original from, but my apologies):

Following this, Joscha gets into a detailed technical explanation of what waves are and why they don’t explain or justify producing phenomenal qualities.

3:20:02 JB: “…you can build control systems and once you have control systems you can also build control systems that don’t just regulate the present but also regulate the future, but in order to regulate the future they need to represent stuff that isn’t there right – that will be there at some level of coarse graining but it’s currently not present so you need to have a system that is causally insulating part of your mechanical structure from the present…control systems that don’t just regulate the present but also regulate the future. But in order to regulate the future they need to represent stuff that isn’t there right that will be there at some level of coarse graining but it’s currently not present so you need to have a system that is causally insulating part of your mechanical structure from the present…”

I propose that groupings of moving objects don’t actually need to represent anything to appear to ‘control’ future states, and that no such need to represent could be fulfilled physically. All that would be needed are mechanical switches and timers that happened to have evolved to fit with environmental states that happen to repeat. If the brain needed to control the position of the legs in the future to walk successfully, it need only set aside some neurons for that purpose. They don’t need to represent anything or imagine the future, they just need to grow a miniature duplicate of the brain chemistry involved in walking but without being connected directly to efferent nerves to the legs. There doesn’t need to be a representational relationship hiding inside of chemistry, the chemistry just had to have accidentally evolved to accumulate a lot of repeating triggers of triggers.

In other words, there’s no reason to represent anything in the future when you can just use space instead of time. You don’t need to know that the wood you’re piling up is for burning in cold Winter if you have a statistical mechanism of mutation that happens to select for piling up wood and burn it if temperature conditions activate a thermal switch. Instead of executive consciousness, it would just happen that a brain that grows miniature low resolution copies of itself would end up pantomiming meta-cerebral functions. No feedback or representation at all, just parallel reproduction of self-similar neurochemical systems. No code or instructions are needed, just clockwork chain reactions.

I’m not suggesting that I think that’s what happened in reality. I think that the reality is that all phenomena are part of the way that conscious experiences are nested and relate to each other. If that were not the case, I doubt that biology as we know it would have evolved at all. I agree with Anastasia’s view that experience begins prior to the cell, and with Joscha’s view that there is nothing special about biology that would explain consciousness.

Where I do disagree with Joscha’s estimation of consciousness is in the attribution of ideas and motivations to robots:

3:28:55 AB: “it’s not obvious that the bacteria needs to be more conscious than a soccer playing robot which is not conscious. Right? Bacteria needs to be more conscious than a soccer playing robot which is not conscious right if you build a robot that plays soccer it’s the robot is fulfilling a bunch of function it’s going to model its environment it’s going to figure out where the ball is where the other robots are where the goal is how to get the ball between yourself and the goal how to push the ball into the goal and so on so in some Financial sense it’s going to have beliefs about the environment it’s going to have commitments about the course of actions it’s going to have full directive Behavior it’s going to have representations about the world with itself inside but I don’t think it experiences anything.“

I don’t see any reason to assume that a robot’s behavior requires the generation of any actual models, beliefs, or representations. The robot is just lots of switches switching switches that control the movements of the physically assembled parts that make it up. I like to use the example of a fishing net and how it is very effective at catching fish, but it doesn’t have to know anything about what it is doing. The size of the fish and the shape of the net are all that are needed. In the same way, the structure of the robot is all that is needed to explain why the robot parts move in the way that they must move – by physical law, not by models or beliefs.

In the video AB and MSD go on to talk about justifying the emergence of consciousness in bacteria but not in a robot because of either complexity or flexibility. Neither of these seem to me to warrant the creation of a new type of representational or qualitative metaphysics. Moving objects are just moving objects, no matter how many there are or how fancy their movements become.

3:34:18 AB: “…any exercise that attempts to explain consciousness without the physical substrate without recognizing that the physical substrate is mandatory for the effect that we’re seeing and is the iterative product of progressive complexity that has been evolving on Earth for the last four billion years will fail to produce Consciousness because it is inherent in the structural organization of these different modules that resonate with one another to create complexity

Here is a case where I agree with what Anastasia is saying in one sense but disagreeing completely in another. I agree that the human conscious experience probably has to result from billions of years of specific evolutionary history, however, I disagree that it is related to physical structures. I propose that the physical structures and mechanisms are effects, rather than causes, of how our conscious experience has developed. Because I think that the physical universe we see is an appearance in our evolved consciousness (see Donald Hoffman’s Interface theory), it is the trans-physical accumulation of conscious experiences themselves that the human qualities of our experience depend upon.

For this reason I do not think that human consciousness can be replicated or simulated mechanically. It’s not because biology alone is capable of providing complexity, flexibility, or harmonics, it’s because biology is the particular vocabulary that consciousness has developed for higher/richer forms of experience to use lower forms of conscious experience as a vehicle. I think that biology doesn’t generate consciousness, rather biology is a symptom of the interface between two different epochs and timescales of evolving conscious experience.

3:35:02 AB: “…the phenomenon of experience is all these different resonant modes you can think about what it feels like to listen to a sine wave versus what it feels like to listen to a symphony they’re the same thing except the sine wave has been modulated into something far more complex and that complex wave has something else in it.

I agree that a symphony is the same thing as complex modulations of sound, but again, a sine wave doesn’t sound like anything unless you can hear. The chain of physical causality ends in the silent brain. There is nothing about an auditory cortex that will be able to conjure sounds out of the oscillating changes in its chemistry. There is no evolutionary advantage to an organism that has to hear sounds rather than one that just transduces acoustic vibrations mechanically into oscillating electrochemical states. We know, for example, from Blindsight patients that the brain is perfectly capable of responding correctly to questions about optical conditions without any experience of visibility.

I agree with Joscha that there’s nothing special about biology as far as being an extra property above chemistry or physics. Again, it’s all just tangible objects moving each other around in public space.

3:38:37 – AB: “What the QRI work is interesting where it’s interesting because there appear to be different states inside the brain that correspond to emotional states and so if you are unhappy or you’re feeling depressed there’s a different brainwave state that is associated with that experience.

I think it’s important to understand that ‘correspond to’ can only happen in a conscious experience. Correspond isn’t a physical activity or structure. Physical states of a brain have no physical way to correspond to anything, so they are just what they appear to be – geometric changes in tangible shapes. Consciousness uses brains, but brains have no way to use consciousness. They have to use physical force – which also has no way to use consciousness. When we use a non-physical model of nature instead, there is no problem with some aspects of consciousness perceiving other aspects of itself as physics. We see it all the time in dreams.

3:40:33 JB: “What is actually sadness? Right? Sadness is an affect it is directed on some source of satisfying a need being permanently removed from your world can never get it back and it’s completely crucial to you. You identified the satisfying yourself through the source of satisfaction and it’s got it will never come back right this is sadness in some sense and a stronger form of sadness is grief it’s a paralyzing form of sadness and sadness leads to certain behaviors mostly a disengagement with the world because you’re helpless you cannot do anything about it. In supplicative behavior you are appealing to an environment to help you with the situation and to create a solution for a problem for which you’re incapable of finding one. And so sadness is some complex psychological phenomena that you can formally define and the question is how is it implemented in the brain?

And what’s also crucial about sadness is that you experience yourself changing as a result of sadness and without this experience you wouldn’t say that somebody is sad you would say somebody acts as if they are sad but there’s a difference between acting as if you are sad and actually being sad. Because that requires an experience of sadness. And so we are coming back to this original question how is it possible that the system that is mechanically implemented is actually feeling something and don’t say waves that are interacting with each other that sounds like magical thinking to me because it’s not adding anything beyond molecules bumping into each other and you’re saying more complexity it just means more molecules bumping more complexly into each other.“

I completely agree that any explanation of sadness based on waves doesn’t work, and that this is what the Hard Problem is all about. In Joscha’s discussion, he links sadness with semantic and behavioral correlates, but that too doesn’t explain emotion itself. Sadness is an affect but that doesn’t explain why or how affects could arise either from brain activity or events that happen to a biological organism.

Finishing up this segment, the discussion turns to conceptualization.

3:42:41 MSD – “…a concept is a relationship between two physical bodies so one is moving towards the other let’s say and then an abstract concept would be taking that motion and relating it to another concept and you can compound these into greater and greater degrees of abstraction…”

I disagree that concepts have anything to do with objects. Objects relate to each other in only one way as far as I can tell, and that is through the relation of the geometry of their shape, position, and motion. Nothing conceptual or abstract about that as far as I can tell, although since motion requires some sense of duration, we could distinguish objects from objects changing position/shape over time, with the latter being arguably less like a static object. Since stasis and velocity are both relative and dependent on perceptual framing (as is everything except perception itself), the idea of a static object is purely hypothetical. If we say that anything hypothetical is an abstraction, fair enough, but even so, it is an abstraction of geometric shapes and not a concept that departs from geoemtry.

Joscha Bach, Yulia Sandamirskaya: “The Third Age of AI: Understanding Machines that Understand”

Here’s my comments and Extra Annoying Questions™ on this recent discussion. I like and admire/respect both of them and am not claiming to have competence in the specific domains of AI development they’re speaking on, only in the metaphysical/philosophical domains that underlie them. I don’t even disagree with the merits of each of their views on how to best proceed with AI dev in the near future. What fun would it be to write about what I don’t disagree with though? My disagreements are with the big, big, big picture issues of the relationship of consciousness, information processing, consciousness, and cosmology.

Jumping right in near the beginning…

“The intensity gets associated with brightness and the flatness gets associated with the absence of brightness, with darkness”

Joscha 12:37

First of all, the (neuronal) intensity and flatness *already are functionally just as good as* brightness and darkness. There is no advantage to conjuring non-physical, non-parsimonious, unexplained qualities of visibility to accomplish the exact same thing as was already being accomplished by invisible neuronal properties of ‘intensity’ and ‘flatness’.

Secondly, where are the initial properties of intensity and flatness coming from? Why take those for granted but not sight? In what scope of perception and aesthetic modality is this particular time span presented as a separate event from the totality of events in the universe? What is qualifying these events of subatomic and atomic positional change, or grouping their separate instances of change together as “intense” or “flat”? Remember, this is invisible, intangible, and unconscious. It is unexperienced. A theoretical neuron prior to any perceptual conditioning that would make it familiar to us as anything resembling a neuron, or an object, or an image.

Third, what is qualifying the qualification of contrast, and why? In a hypothetical ideal neuron before all conscious experience and perception, the mechanisms are already doing what physical forces mechanically and inevitably demand. If there is a switch or gate shaped structure in a cell membrane that opens when ions pile up, that is what is going to happen regardless of whether there is any qualification of the piling of ions as ‘contrasting’ against any subsequent absence of piles of ions. Nothing is watching to see what happens if we don’t assume consciousness. So now we have exposed as unparsimonious and epiphenomenal to physics not only visibility (brightness and darkness) and observed qualities of neuronal activity (intensity and flatness), but also the purely qualitative evaluation of ‘contrast’. Without consciousness, there isn’t anything to cause a coherent contrast that defines the beginning and ending of an event.

- 13:42 I do like Joscha’s read of the story of Genesis as a myth describing consciousness emerging from a neurological substrate, however I question why the animals he mentions are constructed ‘in the mind’ rather than discovered. Also, why so much focus on sight? What about the other senses? We can feel the heat of the sun – why not make animals out of arrays of warm and cool pixels instead of bright and dark? Why have multiple modes of aesthetic presentation at all? Again – where is the parsimony that we need for a true solution to the hard problem / explanatory gap? If we already have molecules doing what molecules must do in a neuron, which is just move or resist motion, how and why do we suddenly reach for ‘contrast’-ing qualities? If we follow physical parsimony strictly, the brain doesn’t do any ‘constructing’ of brightness, or 3d sky, or animals. The brain is *already* constructing complex molecular shapes that do everything that a physical body could possibly evolve to do – without any sense or experience and just using a simple geometry of invisible, unexperienced forces. What would a quality of ‘control’ be doing in a physical universe of automatic, statistical-mechanical inevitables?

“I suspect that our culture actually knew, at some point, that reality, and the sense of reality and being a mind, is the ability to dream – the ability to be some kind of biological machine that dreams about a world that contains it.”

Joscha 14:28

This is what I find so frustrating to me about about Joscha’s view. It is SO CLOSE to getting the bigger picture but it doesn’t go *far enough*. Why doesn’t he see that the biological machine would also be part of the dream? The universe is not a machine that dreams (how? why? parsimony, hard problem) – it’s a dream that machines sometimes. Or to be more precise (and to advertise my multisense realism views), the universe is THE dream that *partially* divides itself into dreams. I propose that these diffracted dreams lens each other to seem like anti-dreams (concrete physical objects or abstract logical concepts) and like hyper-dreams (spiritual/psychedelic/transpersonal/mytho-poetic experiences), depending on the modalities of sense and sense-making that are available, and whether they are more adhesive to the “Holos” or more cohesive to the “Graphos” end of the universal continuum of sense.

“So what do we learn from intelligence in nature? So first if first if we want to try to build it, we need to start with some substrates. So we need to start with some representations.”

Yulia 16:08

Just noting this statement because in my understanding, a physical substrate would be a presentation rather than a re-presentation. If we are talking about the substrates in nature we are talking about what? Chemistry? Cells made of molecules? Shapes moving around? Right away Yulia’s view is seems to give objects representational abilities. I understand that the hard problem of consciousness is not supposed to be part of the scope of her talk, but I am that guy who demands that at this moment in time, it needs to be part of every talk that relates to AI!

“…and in nature the representations used seem to be not distributed. Neural networks, if you’re familiar with those, multiple units, multi-dimensional vectors represent things in the world…and not just (you know) single symbols.”

Yulia 16:20

How is this power of representation given to “units” or “vectors”, particularly if we are imagining a universe prior to consciousness? Must we assume that parts of the world just do have this power to symbolize, refer to, or seem like other parts of the world in multiple ways? That’s fine, I can set aside consciousness and listen to where she is going with this.

17:16: I like what Yulia brings up about the differences between natural and technological approaches as far as nature (biology really). She says that nature begins with dynamic stability by adaptation to change (homeostasis, yes?) while AI architecture starts with something static and then we introduce change if needed. I think that’s a good point, and relate it to my view that “AI is Inside Out“. I agree and go further to add that not only does nature begin with change and add stasis when needed but nature begins with *everything* that it is while AI begins with *nothing*…or at least it did until we started using enormous training sets of training data from the world.

- to 18:14: She’s discussing the lag between sensation and higher cognition…the delay that makes prediction useful. This is a very popular notion and it is true as far as it goes. Sure, if we look at the events in the body as a chain reaction in the micro timescale, then there is a sequence going from retina to optical nerve to visual cortex, etc – but – I would argue this is only one of many timescales that we should understand and consider. In other ways, my body’s actions are *behind* my intentions for it. My typing fingers are racing to keep up with the dictation from my inner voice, which is racing to keep up with my failing memory of the ideas that I want to express. There are many agendas that are hovering over and above my moment-to-moment perceptions, only some of which I am personally aware of at any given moment but recognize my control over them in the long term. To look only at the classical scale of time and biology is to fall prey to the fallacy of smallism.

I can identify at least six modes of causality/time with only two of them being sequential/irreversible.

The denial of other modes of causality becomes a problem if the thing we’re interested in – personal consciousness, does not exist on that timescale or causality mode that we’re assuming is the only one that is real. I don’t think that we exist in our body or brain at all. The brain doesn’t know who we are. We aren’t there, and the brain’s billions of biochemical scale agendas aren’t here. Neither description represents the other, and only the personal scale has the capacity to represent anything. I propose that they are different timescales of the same phenomenon, which is ‘consciousness’, aka nested diffractions of the aesthetic-participatory Holos. One does not cause the other in the same way that these words you see on your screen are not causing concepts to be understood, and the pixels of the screen aren’t causing a perception of them as letters. They coincide temporally, but are related only through a context of conscious perception, not built up from unconscious functions of screens, computers, bodies, or brains.

- to 25:39 …cool stuff about insect brains, neural circuits etc.

- 25:56 talking about population coding, distributed representations. I disagree with the direction that representation is supposed to take here, as far as I think that it is important to at least understand that brain functions cannot *literally* re-present anything. It is actually the image of the brain that is a presentation in our personal awareness that iconically recapitulates some aspects of the subpersonal timescale of awareness that we’re riding on top of. Again, I think we’re riding in parallel, not in series, with the phenomenon that we see as brain activity. I suggest that the brain activity never adds up to a conscious experience. The brain is the physical inflection point of what we do to the body and what the body does to us. Its activity is already a conscious experience in a smaller and larger timescale than our own, that is being used by the back end of another, personal timescale of conscious experience. What we see as the body is, in that timescale of awareness that is subpersonal rather than subconscious, a vast layer of conscious experiences that only look like mechanisms because of the perceptual lensing that diffracts perspective from all of the others. The personal scope of awareness sees the subpersonal scope of awareness as a body/cells/molecules because it’s objectifying the vast distance between that biological/zoological era of conscious experience so that it can coexist with our own. It is, in some sense, our evolutionary past – still living prehistorically. We relate to it as an alien community through microscoping instruments. I say this to point way toward a new idea. I’m not expecting that this would be common knowledge and I don’t consider that cutting edge thinkers like Sandamirskaya and Bach are ‘wrong’ for not thinking of it that way. Yes, I made this view of the universe up – but I think that it does actually work better than the alternatives that I have seen so far.

- to 34:00 talking about the unity of the brain’s physical hardware with its (presumed) computing algorithms vs the disjunction between AI algorithms and the hardware/architectures we’ve been using. Good stuff, and again aligns with my view of AI being inverted or inside out. Our computers are a bottom-up facade that imitate some symptoms of some intelligence. Natural intelligence is bottom up, top down, center out, periphery in, and everything in between. It is not an imitation or an algorithm but it uses divided conscious experience to imitate and systemize as well as having its own genuine agendas that are much more life affirming and holistic than mere survival or control. Survival and control are annoyances for intelligence. Obstructions to slow down the progress from thin scopes of anesthetized consciousness to richer aesthetics of sophisticated consciousness. Yulia is explaining why neuroscience provides a good example of working AI that we should study and emulate – I agree that we should, but not because I think it will lead to true AGI, just that it will lead to more satisfying prosthetics for our own aesthetic-participatory/experiential enhancement…which is really what we’re trying to do anyhow, rather than conjure a competing inorganic super-species that cannot be killed.

When Joscha resumes after 34:00, he discusses Dall-E and the idea of AI as ‘dreaming’ but at the same time as ‘brute force’ with superhuman training on 800 million images. Here I think the latter is mutually exclusive of the former. Brute force training yes, dreaming and learning, no. Not literally. No more than a coin sorter learns banking. No more than an emoji smiles at us. I know this is tedious but I am compelled to continue to remind the world about the pathetic fallacy. Dall-E doesn’t see anything. It doesn’t need to. It’s not dreaming up images for us. It’s a fancy cash register that we have connected to a hypnotic display of its statistical outputs. Nothing wrong with that – it’s an amazing and mostly welcome addition to our experience and understanding. It is art in a sense, but in another it’s just a Ouija board through which we see recombinations of art that human beings have made for other human beings based on what they can see. If we want to get political about it, it’s a bit of a colonial land grab for intellectual property – but I’m ok with that for the moment.

In the dialogue that follows in the middle of the video, there is some interesting and unintentionally connected discussion about the lack of global understanding of the brain and the lack of interdisciplinary communication within academia between neuroscientists, cognitive scientists, neuromorphic engineers. (philosophers of mind not invited ;( ).

Note to self: get a bit more background on the AI silver bullet of the moment, the stochastic Gradient Descent Algorithm.

Bach and Sandamirskaya discuss the benefits and limitations of the neuromorphic, embodied hardware approach vs investing more in building simulations using traditional computing hardware. We are now into the shop talk part of the presentation. I’m more of a spectator here, so it’s interesting but I have nothing to add.

By 57:12 Joscha makes an hypothesis about the failure of AI thus far to develop higher understanding.

“…the current systems are not entangled with the world, but I don’t think it’s because they are not robots, I think it’s because they’re not real time.”

To this I say it’s because ‘they’ are not real. It’s the same reason why the person in the mirror isn’t actually looking back at you. There is no person there. There is an image in our visual awareness. The mirror doesn’t even see it. There is no image for the mirror, it’s just a plane of electromagnetically conditioned metal behind glass that happens to do the same kind of thing that the matter of our eyeballs does, which is just optical physics that need not have any visible presentation at all.

The problem is the assumption that we are our body, or are in our body, or are generated by a brain/body rather than seeing physicality as a representation of consciousness on one timescale that is more fully presented in another that we can’t directly access. When we see an actor in a movie, we are seeing a moving image and hearing sound. I think that the experience of that screen image as a person is made available to us not through processing of those images and sounds but through the common sense that all images and sounds have with the visible and aural aspects of our personal experience. We see a person *through* the image rather than because of it. We see the ‘whole’ through ‘holes’ in our perception.

This is a massive intellectual shift, so I don’t expect anyone to be able to pull it off just by thinking about it for 30 seconds, even if they wanted to. It took several years of deep consideration for me. The hints are all around us though. Perceptual ‘fill-in’ is the rule, not the exception. Intuition. Presentiment. Precognitive dreams, remote viewing, and other psi. NDEs. Blindsight and synesthesia.

When we see each other as an image of a human body we are using our own limited human sight, which is also limited by the animal body>eyes>biology>chemistry>physics. All of that is only the small illuminated subset of consciousness-that-we-are-personally-conscious-of-when-we-are-normatively-awake. It should be clear that is not all that we are. I am not just these words, or the writer of these words, or a brain or a body, or a process using a brain or body, I am a conscious experience in a universe of conscious experiences that are holarchically diffracted (top down, bottom up, center out, etc). My intelligence isn’t an algorithm. My intelligence is a modality of awareness that uses algorithms and anti-algorithms alike. It feasts on understanding like olfactory-gustatory awareness feasts on food.

Even that is not all of who I am, and even “I” am not all of the larger transpersonal experience that I live through and that lives through me. I think that people who are gifted with deep understanding of mathematics and systemizing logic tend to have been conditioned to use that part of the psyche to the exclusion of other modes of sense and sense making, leaving the rich heritage of human understanding of larger psychic contexts to atrophy, or worse, reappear as a projected shadow appearance of ‘woo’ to the defensive ego, still wounded from the injury of centuries under our history of theocratic rule. This is of course very dangerous, and even more dangerous, you need that atrophied part of the psyche to understand why it is dangerous…which is why seeing the hard problem in the first place is too hard for many people, even many philosophers who have been discussing it for decades.

Synchronistically, I now return to the video at 57:54, where Yulia touches on climate change (or more importantly, from our perspective, climate destabilization) and the flawed expectation of mind uploading. I agree with her that it won’t work, although probably for different reasons. It’s not because the substrate matters – it does, but only because the substrate itself is a lensing artifact masking what is actually the totality of conscious experience.

Organic matter and biology are a living history of conscious experience that cannot be transcended without losing the significance and grounding of that history. Just as our body cannot survive by drinking an image of water, higher consciousness cannot flourish in a sandbox of abstract semiotic switches. We flourish *in spite of* the limits of body and brain, not because our experience is being generated by them.

This is not to say that I think organic matter and biology are in any way the limits of consciousness or human consciousness, but rather they are a symptom of the recipe for the development of the rich human qualities of consciousness that we value most. The actual recipe of human consciousness is made of an immense history of conscious experience, wrapped around itself in obscenely complicated ways that might echo the way that protein structures are ordered. This recipe includes seemingly senseless repetition of particular conscious experiences over vast durations of time. I don’t think that this authenticity can be faked. Unlike the patina of an antique chair or the bouquet of a vintage wine that could in theory be replicated artificially, the humanness of human consciousness depends on the actual authenticity of the experience. It actually takes billions of years of just these types of physical > chemical > organic > cellular > somatic > cerebral > anthropological > cultural > historical experiences to build the capacity to appreciate the richness and significance of those layers. Putting a huge data set end product of that chain of experience in the hands of a purely pre-organic electrochemical processor and expecting it to animate into human-like awareness is like trying to train a hydrogen bomb to sing songs around a campfire.

Joscha Bach: We need to understand the nature of AI to understand who we are – Part 2

This is the second part of my comments on Nikola Danaylov’s interview of Joscha Bach: https://www.singularityweblog.com/joscha-bach/

My commentary on the first hour is here. Please watch or listen to the podcast as there is a lot that is omitted and paraphrased in this post. It’s a very fast paced, high-density conversation, and I would recommend listening to the interview in chunks and following along here for my comments if you’re interested.

1:00:00 – 1:10:00

JB – Conscious attention in a sense is the ability to make indexed memories that I can later recall. I also store the expected result and the triggering condition. When do I expect the result to be visible? Later I have feedback about whether the decision was good or not. I compare result I expected with the result that I got and I can undo the decision that I made back then. I can change the model or reinforce it. I think that this is the primary mode of learning that we use, beyond just associative learning.

JB – 1:01:00 Consciousness means that you will remember what you had attended to. You have this protocol of ‘attention’. The memory of the binding state itself, the memory of being in that binding state where you have this observation that combines as many perceptual features as possible into a single function. The memory of that is phenomenal experience. The act of recalling this from the protocol is Access Consciousness. You need to train the attentional system so it knows where you store your backend cognitive architecture. This is recursive access to the attentional protocol, you remember when you make the recall. You don’t do this all the time, only when you want to train this. This is reflexive consciousness. It’s the memory of the access.

CW – By that definition, I would ask if consciousness couldn’t exist just as well without any phenomenal qualities at all. It is easy to justify consciousness as a function after the fact, but I think that this seduces us into thinking that something impossible can become possible just because it could provide some functionality. To say that phenomenal experience is a memory of a function that combines perceptual features is to presume that there would be some way for a computer program to access its RAM as perceptual features rather than as the (invisible, unperceived) states of the RAM hardware itself.

JB – Then there is another thing, the self. The self is a model of what it would be like to be a person. The brain is not a person. The brain cannot feel anything, it’s a physical system. Neurons cannot feel anything, they’re just little molecular machines with a Turing machine inside of them. They cannot even approximate arbitrary function, except by evolution, which takes a very long time. What do we do if you are a brain that figures out that it would be very useful to know what it is like to be a person? It makes one. It makes a simulation of a person, a simulacrum to be more clear. A simulation basically is isomorphic in the behavior of a person, and that thing is pretending to be a person, it’s a story about a person. You and me are persons, we are selves. We are stories in a movie that the brain is creating. We are characters in that movie. The movie is a complete simulation, a VR that is running in the neocortex.

You and me are characters in this VR. In that character, the brain writes our experiences, so we *feel* what it’s like to be exposed to the reward function. We feel what it’s like to be in our universe. We don’t feel that we are a story because that is not very useful knowledge to have. Some people figure it out and they depersonalize. They start identifying with the mind itself or lose all identification. That doesn’t seem to be a useful condition. The brain is normally set up so that the self thinks that its real, and gets access to the language center, and we can talk to each other, and here we are. The self is the thing that thinks that it remembers the contents of its attention. This is why we are conscious. Some people think that a simulation cannot be conscious, only a physical system can, but they’ve got it completely backwards. A physical system cannot be conscious, only a simulation can be conscious. Consciousness is a simulated property of a simulated self.

CW – To say “The self is a model of what it would be like to be a person” seems to be circular reasoning. The self is already what it is like to be a person. If it were a model, then it would be a model of what it’s like to be a computer program with recursively binding (binding) states. Then the question becomes, why would such a model have any “what it’s like to be” properties at all? Until we can explain exactly how and why a phenomenal property is an improvement over the absence of a phenomenal property for a machine, there’s a big problem with assuming the role of consciousness or self as ‘model’ for unconscious mechanisms and conditions. Biological machines don’t need to model, they just need to behave in the ways that tend toward survival and reproduction.

(JB) “The brain is not a person. The brain cannot feel anything, it’s a physical system. Neurons cannot feel anything, they’re just little molecular machines with a Turing machine inside of them”.

CW – I agree with this, to the extent that I agree that if there were any such thing as *purely* physical structures, they would not feel anything, and they would just be tangible geometric objects in public space. I think that rather than physical activity somehow leading to emergent non-physical ‘feelings’ it makes more sense to me that physics is made of “feelings” which are so distant and different from our own that they are rendered tangible geometric objects. It could be that physical structures appear in these limited modes of touch perception rather than in their own native spectrum of experience because that are much slower/faster and older than our own.

To say that neurons or brains feel would be, in my view, a category error since feeling is not something that a shape can logically do, just by Occam’s Razor, and if we are being literal, neurons and brains are nothing but three-dimensional shapes. The only powers that a shape could logically have are geometric powers. We know from analyzing our dreams that a feeling can be symbolized as a seemingly solid object or a place, but a purely geometric cell or organ would have no way to access symbols unless consciousness and symbols are assumed in the first place.

If a brain has the power to symbolize things, then we shouldn’t call it physical. The brain does a lot of physical things but if we can’t look into the tissue of the brain and see some physical site of translation from organic chemistry into something else, then we should not assume that such a transduction is physical. The same goes for computation. If we don’t find a logical function that changes algorithms into phenomenal presentations then we should not assume that such a transduction is computational.

(JB) “What do we do if you are a brain that figures out that it would be very useful to know what it is like to be a person? It makes one. It makes a simulation of a person, a simulacrum to be more clear.”

CW – Here also the reasoning seems circular. Useful to know what? “What it is like” doesn’t have to mean anything to a machine or program. To me this is like saying that a self-driving car would find it useful to create a dashboard and pretend that it is driven by a person using that dashboard rather than being driven directly by the algorithms that would be used to produce the dashboard.

(JB) “A simulation basically is isomorphic in the behavior of a person, and that thing is pretending to be a person, it’s a story about a person. You and me are persons, we are selves. We are stories in a movie that the brain is creating.”

CW – I have thought of it that way, but now I think that it makes more sense if we see both the brain and the person as parts of a movie that is branching off from a larger movie. I propose that timescale differentiation is the primary mechanism of this branching, although timescale differentiation is only one sort of perceptual lensing that allows experiences to include and exclude each other.

I think that we might be experiential fragments of an eternal experience, and a brain is a kind of icon that represents part of the story of that fragmentation. The brain is a process made of other processes, which are all experiences that have been perceptually lensed by the senses of touch and sight to appear as tangible and visible shapes.

The brain has no mechanical reason to make movies, it just has to control the behavior of a body in such a way that repeats behaviors which have happened to coincide with bodies surviving and reproducing. I can think of some good reasons why a universe which is an eternal experience would want to dream up bodies and brains, but once I plug up all of the philosophical leaks of circular reasoning and begging the question, I can think of no plausible reason why an unconscious body or brain would or could dream.

All of the reasons that I have ever heard arise as post hoc justifications that betray an unscientific bias toward mechanism. In a way, the idea of mechanism as omnipotent is even more bizarre than the idea of an omnipotent deity, since the whole point of a mechanistic view of nature is to replace undefined omnipotence with robustly defined, rationally explained parts and powers. If we are just going to say that emergent phenomenal magic happens once the number of shapes or data relations is so large that we don’t want to deny any power to it, we are really just reinventing religious faith in an inverted form. It is to say that sufficiently complex computations transcend computation for reasons that transcend computation.

(JB) “The movie is a complete simulation, a VR that is running in the neocortex.”

CW – We have the experience of playing computer games using a video screen, so we conflate a computer program with a video screen’s ability to render visible shapes. In fact, it is our perceptual relationship with a video screen that doing the most critical part of the simulating. The computer by itself, without any device that can produce visible color and contrast, would not fool anyone. There’s no parsimonious or plausible way to justify giving the physical states of a computing machine aesthetic qualities unless we are expecting aesthetic qualities from the start. In that case, there is no honest way to call them mere computers.

(JB) “In that character, the brain writes our experiences, so we *feel* what it’s like to be exposed to the reward function. We feel what it’s like to be in our universe.”

Computer programs don’t need desires or rewards though. Programs are simply executed by physical force. Algorithms don’t need to serve a purpose, nor do they need to be enticed to serve a purpose. There’s no plausible, parsimonious reason for the brain to write its predictive algorithms or meta-algorithms as anything like a ‘feeling’ or sensation. All that is needed for a brain is to store some algorithmically compressed copy of its own brain state history. It wouldn’t need to “feel” or feel “what it’s like”, or feel what it’s like to “be in a universe”. These are all concepts that we’re smuggling in, post hoc, from our personal experience of feeling what it’s like to be in a universe.

(JB)” We don’t feel that we are a story because that is not very useful knowledge to have. Some people figure it out and they depersonalize. They start identifying with the mind itself or lose all identification.”

It’s easy to say that it’s not very useful knowledge if it doesn’t fit our theory, but we need to test for that bias scientifically. It might just be that people depersonalize or have negative results to the idea that they don’t really exist because it is false, and false in a way that is profoundly important. We may be as real as anything ever could be, and there may be no ‘simulation’ except via the power of imagination to make believe.

(JB) “The self is the thing that thinks that it remembers the contents of its attention. This is why we are conscious.”

CW – I don’t see a logical need for that. Attention need not logically facilitate any phenomenal properties. Attention can just as easily be purely behavioral, as can ‘memory’, or ‘models’. A mechanism can be triggered by groups of mechanisms acting simultaneously without any kind of semantic link defining one mechanism as a model for something else. Think of it this way: What if we wanted to build an AI without ANY phenomenal experience? We could build a social chameleon machine, a sociopath with no model of self at all, but instead a set of reflex behaviors that mimic those of others which are deemed to be useful for a given social transaction.

(JB) “A physical system cannot be conscious, only a simulation can be conscious.”

CW – I agree this is an improvement over the idea that physical systems are conscious. What would it mean for a ‘simulation’ to exist in the absence of consciousness though? A simulation implies some conscious audience which participates in believing or suspending disbelief in the reality of what is being presented. How would it be possible for a program to simulate part of itself as something other than another (invisible, unconscious) program?

(JB) “Consciousness is a simulated property of a simulated self.”

I turn that around 180 degrees. Consciousness is the sole absolutely authentic property. It is the base level sanity and sense that is required for all sense-making to function on top of. The self is the ‘skin in the game’ – the amplification of consciousness via the almost-absolutely realistic presentation of mortality.

KD – So in a way, Daniel Dennett is correct?

JB – Yes,[…] but the problem is that the things that he says are not wrong, but they are also not non-obvious. It’s valuable because there are no good or bad ideas. It’s a good idea if you comprehend it and it elevates your current understanding. In a way, ideas come in tiers. The value of an idea for the audience is if it’s a half tier above the audience. You and me have an illusion that we find objectively good ideas, because we work at the edge of our own understanding, but we cannot really appreciate ideas that are a couple of tiers above our own ideas. One tier is a new audience, two tiers means that we don’t understand the relevance of these ideas because we don’t have the ideas that we need to appreciate the new ideas. An idea appears to be great to us when we can stand right in its foothills and look at it. It doesn’t look great anymore when we stand on the peak of another idea and look down and realize the previous idea was just the foothills to that idea.

KD – Discusses the problems with the commercialization of academia and the negative effects it has on philosophy.

JB – Most of us never learn what it really means to understand, largely because our teachers don’t. There are two types of learning. One is you generalize over past examples, and we call that stereotyping if we’re in a bad mood. The other tells us how to generalize, and this is indoctrination. The problem with indoctrination is that it might break the chain of trust. If someone doesn’t check the epistemology of the people that came before them, and take their word as authority, that’s a big difficulty.

CW – I like the ideas of tiers because it confirms my suspicion that my ideas are two or three tiers above everyone else’s. That’s why y’all don’t get my stuff…I’m too far ahead of where you’re coming from. 🙂

1:07:00 Discussion about Ray Kurzweil, the difficulty in predicting timeline for AI, confidence, evidence, outdated claims and beliefs etc.

1:19 JB – The first stage of AI: Finding things that require intelligence to do, like playing chess and then implementing it as an algorithm. Manually engineering strategies for being intelligent in different domains. Didn’t scale up to General Intelligence

We’re now in the second phase of AI, building algorithms to discover algorithms. We build learning systems that approximate functions. He thinks deep learning should be called compositional function approximation. Using networks of many functions instead of tuning single regressions.

There could be a third phase of AI where we build meta-learning algorithms. Maybe our brains are meta-learning machines, not just learning stuff but learning ways of discovering how to learn stuff (for a new domain). At some point there will be no more phases and science will effectively end because there will be a general theory for global optimization with finite resources and all science will use that algorithm.

CW – I think that the more experience we gain with AI, the more we will see that it is limited in ways that we have not anticipated, and also that it is powerful in ways that we have not anticipated. I think that we will learn that intelligence as we know it cannot be simulated, however, in trying to simulate it, we will have developed something powerful, new, and interesting in its impersonal orthogonality to personal consciousness. The revolution may not be about the rise of computers becoming like people but of a rise in appreciation for the quality and richness of personal conscious experience in contrast to the impersonal services and simulations that AI delivers.

1:23 KD – Where does ethics fit, or does it?

JB – Ethics is often misunderstood. It’s not about being good or emulating a good person. Ethics emerges when you conceptualize the world as different agents, and yourself as one of them, and you share purposes with the other agents but you have conflicts of interest. If you think that you don’t share purposes with the other agents, if you’re just a lone wolf, and the others are your prey, there’s no reason for ethics – you only look for the consequences of your actions for yourself with respect for your own reward functions. It’s not ethics though – not a shared system of negotiation because only you matter, because you don’t share a purpose with the others.

KD – It’s not shared but it’s your personal ethical framework, isn’t it?

JB – It has to be personal. I decided not to eat meat because I felt that I shared a purpose with animal; the avoidance of suffering. I also realized that it is not mutual. Cows don’t care about my suffering. They don’t think about it a lot. I had to think about the suffering of cows so I decided to stop eating meat. That was an ethical decision. It’s a decision about how to resolve conflicts of interest under conditions of shared purpose. I think this is what ethics is about. It’s a rational process in which you negotiate with yourself and with others, the resolution of conflicts of interest under contexts of shared purpose. I can make decisions about what purposes we share. Some of them are sustainable and others are not – they lead to different outcomes. In a sense, ethics requires that you conceptualize yourself as something above the organism; that you identify with the systems of meanings above yourself so that you can share a purpose. Love is the discovery of shared purpose. There needs to be somebody you can love that you can be ethical with. At some level you need to love them. You need to share a purpose with them. Then you negotiate, you don’t want them all to fail in all regards, and yourself. This is what ethics is about. It’s computational too. Machines can be ethical if they share a purpose with us.

KD – Other considerations: Perhaps ethics can be a framework within which two entities that do not share interests can negotiate in and peacefully coexist, while still not sharing interests.

JB – Not interests but purposes. If you don’t share purposes then you are defecting against your own interests when you don’t act on your own interest. It doesn’t have integrity. You don’t share a purpose with your food, other than that you want it to be nice and edible. You don’t fall in love with your food, it doesn’t end well.

CW – I see this as a kind of game-theoretic view of ethics…which I think is itself (unintentionally) unethical I think it is true as far as it goes, but it makes assumptions about reality that are ultimately inaccurate as they begin by defining reality in the terms of a game. I think this automatically elevates the intellectual function and its objectivizing/controlling agendas at the expense of the aesthetic/empathetic priorities. What if reality is not a game? What if the goal is not to win by being a winner but to improve the quality of experience for everyone and to discover and create new ways of doing that?

Going back to JB’s initial comment that ethics are not about being good or emulating a good person, I’m not sure about that. I suspect that many people, especially children will be ethically shaped by encounters with someone, perhaps in the family or a character in a movie who appeals to them and who inspires imitation. Whether their appeal is as a saint or a sinner, something about their style, the way they communicate or demonstrate courage may align the personal consciousness with transpersonal ‘systems of meanings above’ themselves. It could be a negative example which someone encounters also. Someone that you hate who inspires you to embody the diametrically opposite aesthetics and ideals.

I don’t think that machines can be ethical or unethical, not because I think humans are special or better than machines, but out of simple parsimony. Machines don’t need ethics. They perform tasks, not for their own purposes, or for any purpose, but because we have used natural forces and properties to perform actions that satisfy our purposes. Try as we might (and I’m not even sure why we would want to try), I do not think that we will succeed in changing matter or computation into something which both can be controlled by us and which can generate its own purposes. I could be wrong, but I think this is a better reason to be skeptical of AI than any reason that computation gives us to be skeptical of consciousness. It also seems to me that the aesthetic power of a special person who exemplifies a particular set of ethics can be taken to be a symptom of a larger, absolute aesthetic power in divinity or in something like absolute truth. This doesn’t seem to fit the model of ethics as a game-theoretic strategy.

JB – Discussion about eating meat, offers example pro-argument that it could be said that a pasture raised cow could have a net positive life experience since they would not exist but for being raised as food. Their lives are good for them except for the last day, which is horrible, but usually horrible for everyone. Should we change ourselves or change cattle to make the situation more bearable? We don’t want to look at it because it is un-aesthetic. Ethics in a way is difficult.

KD – That’s the key point of ethics. It requires sometimes we make choices that are not in our own best interests perhaps.

JB – Depends what we define ourself. We could say that self is identical to the well being of the organism, but this is a very short-sighted perspective. I don’t actually identify all the way with my organism. There are other things – I identify with society, my kids, my relationships, my friends, their well being. I am all the things that I identify with and want to regulate in a particular way. My children are objectively more important than me. If I have to make a choice whether my kids survive or myself, my kids should survive. This is as it should be if nature has wired me up correctly. You can change the wiring, but this is also the weird thing about ethics. Ethics becomes very tricky to discuss once the reward function becomes mutable. When you are able to change what is important to you, what you care about, how do you define ethics?

CW – And yet, the reward function is mutable in many ways. Our experience in growing up seems to be marked by a changing appreciation for different kinds of things, even in deriving reward from controlling one’s own appetite for reward. The only constant that I see is in phenomenal experience itself. No matter how hedonistic or ascetic, how eternalist or existential, reward is defined by an expectation for a desired experience. If there is no experience that is promised, then there is no function for the concept of reward. Even in acts of self-sacrifice, we imagine that our action is justified by some improved experience for those who will survive after us.

KD – I think you can call it a code of conduct or a set of principles and rules that guide my behavior to accomplish certain kinds of outcomes.

JB – There are no beliefs without priors. What are the priors that you base your code of conduct on?

KD – The priors or axioms are things like diminishing suffering or taking an outside/universal view. When it comes to (me not eating meat), I take a view that is hopefully outside of me and the cows. I’m able to look at the suffering of eating a cow and their suffering of being eaten. If my prior is ‘minimize suffering’, because my test criteria of a sentient being is ‘can it suffer?’ , then minimizing suffering must be my guiding principle in how I relate to another entity. Basically, everything builds up from there.

JB – The most important part of becoming an adult is taking charge of your own emotions – realize that your emotions are generated by your own brain/organism, and that they are here to serve you. You’re not here to serve your emotions. They are here to help you do the things that you consider to be the right things. That means that you need to be able to control them, to have integrity. If you are just a victim of your emotions, and not do the things that you know are the right things, you don’t have integrity. What is suffering? Pain is the result of some part of your brain sending a teaching signal to another part of your brain to improve its performance. If the regulation is not correct, because you cannot actually regulate that particular thing, the pain signal will usually endure and increase until your brain figures it out and turns off the brain signaling center, because it’s not helping. In a sense suffering is a lack of integrity. The difficulty is only that many beings cannot get to the degree of integrity that they can control the application of learning signals in their brain…control the way that their reward function is computed and distributed.

CW – My criticism is the same as in the other examples. There’s no logical need for a program or machine to invent ‘pain’ or any other signal to train or teach. If there is a program to run an animal’s body, the program need only execute those functions which meet the criteria of the program. There’s no way for a machine to be punished or rewarded because there’s no reason for it to care about what it is doing. If anything, caring would impede optimal function. If a brain doesn’t need to feel to learn, then why would a brain’s simulation need to feel to learn?

KD – According to your view, suffering is a simulation or part of a simulation.

JB – Everything that we experience is a simulation. We are a simulation. To us it feels real. There is no getting around this. I have learned in my life that all of my suffering is a result of not being awake. Once I wake up, I realize what’s going on. I realize that I am a mind. The relevance of the signals that I perceive is completely up to the mind. The universe does not give me objectively good or bad things. The universe gives me a bunch of electrical impulses that manifest in my thalamus, and my brain makes sense of them by creating a simulated world. The valence in that simulated world is completely internal – it’s completely part of that world, it’s not objective…and I can control this.

KD – So you are saying suffering is subjective?

JB – Suffering is real to the self with respect to ethics, but it is not immutable. You can change the definition of your self, the things that you identify with. We don’t have to suffer about things, political situations for example, if we recognize them to be mechanical processes that happen regardless of how we feel about them.

CW – The problem with the idea of simulation is that we are picking and choosing which features of our experience are more isomorphic to what we assume is an unsimulated reality. Such an assumption is invariably a product of our biases. If we say that the world we experience is a simulation running on a brain, why not also say that the brain is also a simulation running on something else? Why not say that our experiences of success with manipulating our own experience of suffering is as much of a simulation as the original suffering was? At some point, something has to genuinely sense something. We should not assume that just because our perception can be manipulated we have used manipulation to escape from perception. We may perceive that we have escaped one level of perception, or objectified it, but this too must be presumed to be part of the simulation as well. Perception can only seem to have been escaped in another perception. The primacy of experience is always conserved.

I think that it is the intellect that is over-valuing the significance of ‘real’ because of its role in protecting the ego and the physical body from harm, but outside of this evolutionary warping, there is no reason to suspect that the universe distinguishes in an absolute sense between ‘real’ and ‘unreal’. There are presentations – sights, sounds, thoughts, feelings, objects, concepts, etc, but the realism of those presentations can only be made of the same types of perceptions. We see this in dreams, with false awakenings etc. Our dream has no problem with spontaneously confabulating experiences of waking up into ‘reality’. This is not to discount the authenticity of waking up in ‘actual reality’, only to say that if we can tell that it authentic, then it necessarily means that our experience is not detached from reality completely and is not meaningfully described as a simulation. There are some recent studies that suggest that our perception may be much closer to ‘reality’ than we thought, i.e. that we can train ourselves to perceive quantum level changes.