A Multisense Realist Critique of “Human and Machine Consciousness as a Boundary Effect in the Concept Analysis Mechanism”

Let me begin by saying first that my criticism of the thoughts, ideas, and assumptions behind this hypothesis on the Hard Problem (and of all such hypotheses) does not in any way constitute a challenge to the expertise or intelligence of its authors. I have the utmost respect for anyone who takes the time to thoughtfully formulate an opinion on the matter of consciousness, and I do not in any way place myself on the same intellectual level as those who have spent their career achieving a level of skill and knowledge of mathematics and technology that is well beyond my grasp.

I do have a lifelong interest in the subject of consciousness, and most of a lifetime of experience with computer technology, however, that experience is much more limited in scope and depth than that of full-time, professional developers and engineers. Having said that, without inviting accusations of succumbing to the Dunning-Kruger effect, I dare to wonder if abundant expertise in computer science may impair our perception in this area as well, and I would desperately like to see studies performed to evaluate the cognitive bias of those scientists and philosophers who see the Hard Problem of Consciousness as a pseudo-issue that can be easily dismissed by reframing the question.

Let me begin now in good faith to mount an exhaustive and relentless attack on the assumptions and conclusions presented in the following: “Chapter 15: Human and Machine Consciousness as a Boundary Effect in the Concept Analysis Mechanism” by Richard Loosemore (PDF link), from the book Theoretical Foundations of Artificial General Intelligence, Editors: Wang, Pei, Goertzel, Ben (Eds.) I hope that this attack is not so annoying, exhausting, or offensive that it prevents readers from engaging with it and from considering the negation/inversion of the fundamental premises that it relies upon.

From the very top of the first page…

“To solve the hard problem of consciousness we observe that any cognitive system of sufficient power must get into difficulty when it tries to analyze consciousness concepts, because the mechanism that does the analysis will “bottom out” in such a way as to make the system declare these concepts to be both real and ineffable.”

Objections:

1: The phenomenon of consciousness (as distinct from the concept of consciousness) is the only possible container for qualities such as “real” or “ineffable”. It is a mistake to expect the phenomenon itself to be subject to the categories and qualities which are produced only within consciousness.

2: Neither my analysis of the concept or phenomenon of consciousness ‘bottoms out’ in the way described. I would say that consciousness is both real, more than real, less than real, effable, semi-effable, and trans-effable, but not necessarily ineffable. Consciousness is the aesthetic-participatory nesting of sensory-motive phenomena from which all other phenomena are dreived and maintained, including anesthetic, non-participatory, non-sensory, and non-motivated appearances such as those of simple matter and machines.

“This implies that science must concede that there are some aspects of the world that deserve to be called “real”, but which are beyond explanation.”

Here my understanding is that attempting to explain (ex-plain) certain aspects of consciousness is redundant since they are already ‘plain’. Blue is presented directly as blue. It is a visible phenomenon which is plain to all those who can see it and unexplainable to all those who cannot. There is nothing to explain about the phenomenon itself, as any such effort would only make the assumption that blue can be decomposed into other phenomena which are not blue. There is an implicit bias or double standard in such assumptions that any of the other phenomena which we might try to use to account for the existence of blue would also require explanations to decompose them further as well. How do we know that we are even reading words that mean what they mean to another person? As long as a sense of coherence is present, even the most surreal dream experiences can be validated within the dream as perfectly rational and real.

Even the qualifier “real” is also meaningless outside of consciousness. There can be no physical or logical phenomenon which is unreal or can ‘seem’ other than it is without consciousness to provide the seeming. The entire expectation of seeming is an artifact of some limitation on a scope of perception, not of physical or logical fact.

“Finally, behind all of these questions there is the problem of whether we can explain any of the features of consciousness in an objective way, without stepping outside the domain of consensus-based scientific enquiry and becoming lost in a wilderness of subjective opinion.”

This seems to impose a doomed constraint on to any explanations in advance, since the distinction between subjective and objective can only exist within consciousness, consciousness cannot presume to transcend itself by limiting its scope to only those qualities which consciousness itself deems ‘objective’. There is no objective arbiter of objectivity, and presuming such a standard is equivalent to or available through our scientific legacy of consensus is especially biased in consideration of the intentional reliance on instruments and methods in that scientific tradition which are designed to exclude all association with subjectivity.* To ask that an explanation of consciousness be limited to consensus science is akin to asking “Can we explain life without referring to anything beyond the fossil record?” In my understanding, science itself must expand radically to approach the phenomenon of consciousness, rather than consciousness having to be reduced to fit into our cumulative expectations about nature.

“One of the most troublesome aspects of the literature on the problem of consciousness is the widespread confusion about what exactly the word “consciousness” denotes.”

I see this as a sophist objection (ironically, I would also say that this all-too-common observation is one of the most troublesome aspects of materialistic arguments against the hard problem). Personally, I have no confusion whatsoever about what the common sense term ‘consciousness’ refers to, and neither does anyone else when it comes to the actual prospect of losing consciousness. When someone is said to have lost consciousness forever, what is lost? The totality of experience. Everything would be lost for the person whose consciousness is truly and completely lost forever. All that remains of that person would be the bodily appearances and memories in the conscious experiences of others (doctors, family members, cats, dust mites, etc). If all conscious experience were to terminate forever, what remained would be impossible to distingish from nothing at all. Indeed there would be no remaining capacity to ‘distinguish’ either.

I will skip over the four bullet points from Chalmers work in 15.1.1 (The ability to introspect or report mental states…etc), as I see them as distractions arising from specific use cases of language and the complex specifics of human psychology rather than from the simple/essential nature of consciousness as a phenomenon.

Moving on to what I see as the meat of the discussion – qualia. In this next section, much is made about the problems of communicating with others about specific phenomenal properties. I see this as another distraction and if we interrogate this definition of qualia as that which “we cannot describe to a creature that does not claim to

experience them”, we will find that it is a condition which everything in the universe fits just as well.

We cannot describe numbers, or gravity, or matter to a creature that does not claim to experience them either. Ultimately the only difference between qualia and non-qualia is that non-qualia only exist hypothetically. Things which are presumed to exist independently of subjectivity, such as matter, energy, time, space, and information are themselves concepts derived from intersubjective consensus. Just as the Flatlander experiences a sphere only as a circle of changing size, our entire view of objective facts and their objectiveness is objectively limited to those modalities of sense and sense-making which we have access to. There is no universe which is real that we could not also experience as the content of a (subjective) dream and no way to escape the constraints that a dream imposes even on logic, realism, and sanity themselves. A complete theory of consciousness cannot merely address the narrow kind of sanity that we are familiar with as thinking adults conditioned by the accumulative influence of Western society, but also of non-ordinary experiences, mystical states of consciousness, infancy, acquired savant syndrome, veridical NDEs and reincarnation accounts, and on and on.

a philosophical zombie— would behave as if it did have its own phenomenology (indeed its behavior, ex hypothesi, would be absolutely identical to its normal twin) but it would not experience any of the subjective sensations that we experience when we use our minds

As much as I revere David Chalmers brilliant insights into the Hard Problem which he named, I see the notion of a philosophical zombie as flawed from the start. While we can imagine that two biological organisms are physically identical with and without subjective experience, there is no reason to insist that they must be.

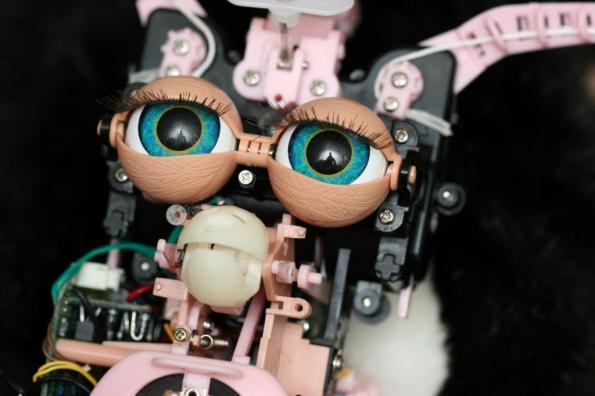

I would pose it differently and ask ‘Can a doll be created that would seem to behave in every respect like a human being, but still be only a doll?” To that question I would respond that there is no logical reason to deny that possibility, however, we cannot deny also the possibility that there are some people who at some time might not be able to feel an ‘uncanny’ sense about such a doll, even if they are not consciously able to notice that sense. The world is filled with examples of people who can pretend and act as if they are experiencing subjective states that they are not. Professional actors and sociopaths, for example, are famously able to simulate deep sentiment and emotion, summoning tears on command, etc. I would ask of the AI dev community, what if we wanted to build an AGI simulator which did not have any qualia? Suppose we wanted to study the effects of torture, could we not hope to engineer a device or program which would allow us to understand some of the effects without having to actually subject a conscious device to excruciating pain? If so, then we cannot presume qualia to emerge automatically from structure or function. We have to have a better understanding of why and how qualia exist in the first place. That is the hard problem of consciousness.

Similarly, if we know that wires from a red color-detection module are active, this tells us the cognitive level fact that the machine is detecting red, but it does not tell us if the machine is experiencing a sensation of redness, in anything like the way that we experience redness.

Here I suggest that in fact the machine is not detecting red at all, but it is detecting some physical condition that corresponds to some of our experiences of seeing red, i.e. the open-eye presence of red which correlates to 680nm wavelength electromagnetic stimulation of retinal cells. Since many people can dream and imagine red in the absence of such ophthalmological stimulation**, we cannot equate that detection with red at all.

Further, I would not even allow myself to assume that what a retinal cell or any physical instrument does in response to illumination automatically constitutes ‘detection’. Making such leaps is, in my understanding, precisely how our thinking about the hard problem of consciousness slips into circular reasoning. To see any physical device as a sensor or sense organ is to presume a phenomenological affect on a micro scale, as well as a mechanical effect described by physical force/field mathematics. If we define forces and fields purely as mechanical facts with no sensory-motive entailment, then it follows logically that no complex arrangement of such force-field mechanisms would necessarily result in any addition or emergence of such an entailment. If shining a light on a molecule changes the shape or electrical state of that molecule, every subsequent chain of physical changes effected by that cause will occur with or without any experience of redness. Any behavior that a human body or any species of body can evolve to perform could just as easily have evolved to be performed without anything but unexperienced physical chain reactions of force and field.

The trouble is that when we try to say what we mean by the hard problem, we inevitably end up by saying that something is missing from other explanations. We do not say “Here is a thing to be explained,” we say “We have the feeling that there is something that is not being addressed, in any psychological or physical account of what happens when humans (or machines) are sentient.”

To me, this is a false assumption that arises from an overly linguistic approach to the issue. I do in fact say “Here is a thing to be explained”. In fact, I could use that very same word “here” as an example of that thing. What is the physical explanation for the referent of the term “here”. What gives a physical event the distinction of being ‘here’ versus ‘there’?

The presence of something like excruciating pain can’t be dismissed on account of a compulsion to assume that ‘Ouch!’ needs to be deconstructed into nociception terminology. I would turn this entire description of ‘The trouble” around to ask the author why they feel that there is something about pain that is not communicated to anyone who experiences it directly, and how anything meaningful about that experience could be addressed by other, non-painful accounts.

On to the dialectic between skeptic and phenomenologist:

“The difficulty we have in supplying an objective definition should not be taken as grounds for dismissing the problem—rather, this lack of objective definition IS the problem!”

I’m not sure why a phenomenologist would say that. To me, the hard problem of consciousness has nothing at all to do with language. We have no problem communicating “Ouch!” any more than we have in communicating ” “. The only problem is in the expectation that all terms should translate into all languages. There is no problem with reducing a subjective quality of phenomenal experience into a word or gesture – the hard problem is why and how there should be any inflation of non-phenomenal properties to ‘experience’ in the first place. I don’t find it hard to articulate, though many people do seem to have a hard time accepting that it makes sense.

“In effect, there are certain concepts that, when analyzed, throw a monkey wrench into the analysis mechanism”

I would reconstruct that observation this way: “In effect, there are certain concepts that, when analyzed, point to facts beyond the analysis mechanism, and further beyond mechanism and analysis. These are the facts of qualia from which the experiences of analysis and mechanical appearance are derived.”

“All facets of consciousness have one thing in common: they involve some particular

types of introspection, because we “look inside” at our subjective experience of the world”

Not at all. Introspection is clearly dependent on consciousness, but so are all forms of experience. Introspection does not define consciousness, it is only a conscious experience of trying to make intellectual sense of one’s own conscious experience. Looking outside requires as much consciousness as looking inside and unconscious phenomena don’t ‘look’.

From that point in the chapter, there is a description of some perfectly plausible ideas about how to design a mechanism which would appear to us to simulate the behaviors of an intelligent thinker, but I see no connection between such a simulation and the hard problem of consciousness. The premise underestimates consciousness to begin with and then goes on to speculate on how to approximate that disqualified version of qualia production, consistently mistaking qualia for ‘concepts’ that cannot be described.

Pain is not a concept, it is a percept. Every function of the machine described could just as easily be presented as hexadecimal code, words, binary electronic states, etc. A machine could put together words that we recognize as having to do with pain, but that sense need not be available to the machine. In the mechanistic account of consciousness, sensory-motive properties are taken for granted and aesthetic-participatory elaborations of those properties that we would call human consciousness are misattributed to the elaborations of mechanical process. That “blue” cannot be communicated to someone who cannot see it does not define what blue is. Building a machine that cannot explain what is happening beyond its own mechanism doesn’t mean that qualia will automatically appear to stand in for that failure. Representation requires presentation, but presentation does not require representation. Qualia are presentations, including the presentation of representational qualities between presentations.

“Yes, but why would that short circuit in my psychological mechanism cause this particular feeling in my phenomenology?”

Yes, exactly, but that’s still not the hard problem. The hard problem is “Why would a short circuit in any mechanism cause any feeling or phenomenology in the first place? Why would feeling even be a possibility?”

“The analysis mechanism inside the mind of the philosopher who raises this objection will then come back with the verdict that the proposed explanation fails to describe the nature of conscious experience, just as other attempts to explain consciousness have failed. The proposed explanation, then, can only be internally consistent with itself if the philosopher finds the explanation wanting. There is something wickedly recursive about this situation.”

Yes, it is wickedly recursive in the same exact way that any blind faith/Emperor’s New Clothes persuasion is wickedly recursive. What is proposed here can be used to claim that any false theory about consciousness which predicts that it will be perceived as false is evidence of its (mystical, unexplained) essential truth. It is the technique of religious dogma in which doubt is defined as evidence of the unworthiness of the doubter to deserve to understand why it isn’t false.

“I am not aware of any objection to the explanation proposed in this chapter that does not rely for its force on that final step, when the philosophical objection deploys the analysis mechanism, and thereby concludes that the proposal does not work because the analysis mechanism in the head of the philosopher returned a null result.”

Let me try to make the reader aware of one such objection then. I do not use an analysis mechanism, I use the opposite – an anti-mechanism of direct participation that seeks to discover greater qualities of sense and coherence for their own aesthetic saturation. That faculty of my consciousness does not return a null result, it has instead returned a rich cosmogony detailing the relationships between a totalistic spectrum of aesthetic-participatory nestings of sensory-motive phenomena, and its dialectic, diffracted altars; matter (concrete anesthetic appearances) and information (abstract anesthetic appearances).

“I am now going to make a case that all of the various subjective phenomena associated with consciousness should be considered just as “real” as any other phenomena in the universe, but that science and philosophy must concede that consciousness has the special status of being unanalyzable.”

I’m glad that qualia are at least given a ‘real’ status! I don’t see that it’s unanalyzable though. I analyze qualia all the time. I think the limitation is that the analysis doesn’t translate into math or geometry…which is exactly what I would expect because I understand the role of math and geometry to be precisely the qualia which are presented to represent the disqualification of alienated/out of bounds qualia. We don’t experience on a geological timescale, so our access to experiences on that scale is reduced to a primitive vocabulary of approximations. I suggest that when two conscious experiences of vastly disparate timescales engage with each other, there is a mutual rendering of each other as either inanimate or intangible…as matter/object or information/concept.

In the latter parts of this chapter, the focus is on working with the established hypothesis of qualia as bottomed-out mechanical analysis. The irony of this is that I can see clearly that it is math and physics, mechanism and analysis which are the qualia of bottomed out direct perception. The computationalist and physicalist both have got the big picture turned inside out, where the limitations of language and formalism are hallucinated into sources of infinite aesthetic creativity. Sight is imagined to emerge naturally from imperfect blindness. It’s an inversion of map and territory on the grandest possible scale.

“When we say that a concept is more real the more concrete and tangible it is, what we actually mean is that it gets more real the closer it gets to the most basic of all concepts. In a sense there is a hierarchy of realness among our concepts, with those concepts that are phenomenologically rich being the most immediate and real, and with a decrease in that richness and immediacy as we go toward more abstract concepts.”

To the contrary, when we say that a concept is more real the more concrete and tangible it is, what we actually mean is that it gets more real the further it gets from the most abstract of all qualia: concepts. No concepts are as phenomenologically rich, immediate, and real as literally everything that is not a concept.

“This seems to me a unique and unusual compromise between materialist and dualist conceptions of mind. Minds are a consequence of a certain kind of computation; but they also contain some mysteries that can never be explained in a conventional way.”

Here too, I see that the opposite clearly makes more sense. Computation is a consequence of certain kinds of reductive approximations within a specific band of consciousness. To compute or calculate is actually the special and (to us) mysterious back door to the universal dream which enables dreamers to control and objectify aspects of their shared experience.

I do love all of the experiments proposed toward the end, although it seems to me that all of the positive results could be simulated by a device that is designed to simulate the same behaviors without any qualia. Of all of the experiments, I think that the mind-meld is most promising as it could possibly expose our own consciousness to phenomena beyond our models and expectations. We may be able for example, to connect our brain to the brain of a fish and really be able to tell that we are feeling what the fish is feeling. Because my view of consciousness is that it is absolutely foundational, all conscious experience overlaps at that fundamental level in an ontological way rather than merely as a locally constructed model. In other words, while some aspects of empathy may consist only of modeling the emotions of another person (as a sociopath might do), I think that there is a possibility for genuine empathy to include a factual sharing of experience, even beyond assumed boundaries of space, time, matter, and energy.

Thank you for taking the time to read this. I would not bother writing it if I didn’t think that it was important. The hard problem of consciousness may seem to some as an irrelevant, navel-gazing debate, but if I am on the right track in my hypothesis, it is critically important that we get this right before attempting to modify ourselves and our civilization based on a false assumption of qualia as information.

Respectfully and irreverently yours,

Craig Weinberg

*This point is addressed later on in the chapter: “it seems almost incoherent to propose a scientific (i.e. non-subjective) explanation for consciousness (which exists only in virtue of its pure subjectivity).”

**Not to mention the reports from people blind from birth of seeing colors during Near Death Experiences.

“. The only problem is in the expectation that all terms should translate into all languages. There is no problem with reducing a subjective quality of phenomenal experience into a word or gesture – the hard problem is why and how there should be any inflation of non-phenomenal properties to ‘experience’ in the first place. I don’t find it hard to articulate, though many people do seem to have a hard time accepting that it makes sense.

“. The only problem is in the expectation that all terms should translate into all languages. There is no problem with reducing a subjective quality of phenomenal experience into a word or gesture – the hard problem is why and how there should be any inflation of non-phenomenal properties to ‘experience’ in the first place. I don’t find it hard to articulate, though many people do seem to have a hard time accepting that it makes sense.

Test, test, test, one.

🙂

I don’t think we could ever tell what a fish is experiencing, only what the fish is “sort-of” experiencing as we would never be able to lose our linguistic self totally in the mind-meld process; or if we did we couldn’t know it because the part of my self that understands the concept “this is what being a fish is like” can never be eliminated lest the understanding be as well, and then you’d just be a fish. (Perhaps you could have a vague memory of fish life once, much as we have vague memories of infant life.) What it’s ABSOLUTELY like to be a fish (if it’s like anything at all) can be known only to the fish. “If a Lion could talk we could not understand him” goes the famous Wittgenstein quote, but in reality if a Lion could speak, one, he wouldn’t be a lion anymore, and two, we’d have to be able to understand him.

Yes, I agree!