Archive

Wittgenstein, Physics, and Free Will

JE: My experience from talking to philosophers is that WIttgenstein’s view is certainly contentious. There seem to be two camps. There are those seduced by his writing who accept his account and there are others who, like me, feel that Wittgenstein expressed certain fairly trivial insights about perception and language that most people should have worked out for themselves and then proceeded to draw inappropriate conclusions and screw up the progress of contemporary philosophy for fifty years. This latter would be the standard view amongst philosophers working on biological problems in language as far as I can see.

Wittgenstein is right to say that words have different meanings in different situations – that should be obvious. He is right to say that contemporary philosophers waste their time using words inappropriately – any one from outside sees that straight away. But his solution – to say that the meaning of words is just how they are normally used, is no solution – it turns out to be a smoke screen to allow him to indulge his own prejudices and not engage in productive explanation of how language actually works inside brains.

The problem is a weaseling going on that, as I indicated before, leads to Wittgenstein encouraging the very crime he thought he was clever to identify. The meaning of a word may ‘lie in how it is used’ in the sense that the occurrences of words in talk is functionally connected to the roles words play in internal brain processes and relate to other brain processes but this is trivial. To say that meaning is use is, as I said, clearly a route to the W crime itself. If I ask how do you know meaning means use you will reply that a famous philosopher said so. Maybe he did but he also said that words do not have unique meanings defined by philosophers – they are used in all sorts of ways and there are all sorts of meanings of meaning that are not ‘use’, as anyone who has read Grice or Chomsky will have come to realise. Two meanings of a word may be incompatible yet it may be well nigh impossible to detect this from use – the situation I think we have here. The incompatibility only becomes clear if we rigorously explore what these meanings are. Wittgenstein is about as much help as a label on a packet of pills that says ‘to be taken as directed’.

But let’s be Wittgensteinian and play a language game of ordinary use, based on the family resemblance thesis. What does choose mean? One meaning might be to raise in the hearer the thought of having a sense of choosing. So a referent of ‘choose’ is an idea or experience that seems to be real and I think must be. But we were discussing what we think that sense of choosing relates to in terms of physics. We want to use ‘choose’ to indicate some sort of causal relation or an aspect of causation, or if we are a bit worried about physics still having causes we could frame it in terms of dynamics or maybe even just connections in a spacetime manifold. If Wheeler thinks choice is relevant to physics he must think that ‘choose’ can be used to describe something of this sort, as well as the sense of choosing.

So, as I indicated, we need to pin down what that dynamic role might be. And I identified the fact that the common presumption about this is wrong. It is commonly thought that choosing is being in a situation with several possible outcomes. However, we have no reason to think that. The brain may well not be purely deterministic in operation. Quantum indeterminacy may amplify up to the level of significant indeterminacy in such a complex system with so powerful amplification systems at work. However, this is far from established and anyway it would have nothing to do with our idea of choosing if it was just a level of random noise. So I think we should probably work on the basis that the brain is in fact as tightly deterministic as matters here. This implies that in the situation where we feel we are choosing THERE IS ONLY ONE POSSIBLE OUTCOME.

The problem, as I indicated is that there seem to be multiple possible outcomes to us because we do not know how are brain is going to respond. Because this lack of knowledge is a standard feature of our experience our idea of ‘a situation’ is better thought of as ‘an example of an ensemble of situations that are indistinguishable in terms of outcome’. If I say when I get to the main road I can turn right or left I am really saying that I predict an instance of an ensemble of situations which are indistinguishable in terms of whether I go right or left. This ensemble issue of course is central to QM and maybe we should not be so surprised about that – operationally we live in a world of ensembles, not of specific situations.

So this has nothing to do with ‘metaphysical connotations’ which is Wittgenstein’s way of blocking out any arguments that upset him – where did we bring metaphysics in here? We have two meanings of choose. 1. Being in a situation that may be reported as being one of feeling one has choice (to be purely behaviourist) and 2. A dynamic account of that situation that turns out not to agree with what 99.9% of the population assume it is when they feel they are choosing. People use choose in a discussion of dynamics as if it meant what it feels like in 1 but the reality is that this use is useless. It is a bit like making burnt offerings to the Gods. That may be a use for goats but not a very productive one. It turns out that the ‘family resemblance’ is a fake. Cousin Susan who has pitched up to claim her inheritance is an impostor. That is why I say that although to ‘feel I am choosing’ is unproblematic the word ‘choice’ has no useful meaning in physics. It is based on the same sort of error as thinking a wavefunction describes a ‘particle’ rather than an ensemble of particles. The problem with Wittgenstein is that he never thought through where his idea of use takes you if you take a careful scientific approach. Basically I think he was lazy. The common reason why philosophers get tied in knots with words is this one – that a word has several meanings that do not in fact have the ‘family relations’ we assume they have – this is true for knowledge, perceiving, self, mind, consciousness – all the big words in this field. Wittgenstein’s solution of going back to using words the way they are ‘usually’ used is nothing more than an ostrich sticking its head in the sand.

So would you not agree that in Wheeler’s experiments the experimenter does not have a choice in the sense that she probably feels she has? She is not able to perform two alternative manoeuvres on the measuring set up. She will perform a manoeuvre, and she may not yet know which, but there are no alternatives possible in this particular instance of the situation ensemble. She is no different from a computer programmed to set the experiment up a particular way before particle went through the slits, contingent on a meteorite not shaking the apparatus after it went through the slits (causality is just as much an issue of what did not happen as what did). So if we think this sort of choosing tells us something important about physics we have misunderstood physics, I beleive.

Nice response. I agree almost down the line.

As far as the meaning of words go, I think that no word can have only one meaning because meaning, like all sense, is not assembled from fragments in isolation, but rather isolated temporarily from the totality of experience. Every word is a metaphor, and metaphor can be dialed in and out of context as dictated by the preference of the interpreter. Even when we are looking at something which has been written, we can argue over whether a chapter means this or that, whether or not the author intended to mean it. We accept that some meanings arise unintentionally within metaphor, and when creating art or writing a book, it is not uncommon to glimpse and develop meanings which were not planned.

To choose has a lower limit, between the personal and the sub-personal which deals with the difference between accidents and ‘on purpose’ where accidents are assumed to demand correction, and there is an upper limit on choice between the personal and the super-personal in which we can calibrate our tolerance toward accidents, possibly choosing to let them be defined as artistic or intuitive and even pursuing them to be developed.

I think that this lensing of choice into upper and lower limits, is, like red and blue shift, a property of physics – of private physics. All experiences, feelings, words, etc can explode into associations if examined closely. All matter can appear as fluctuations of energy, and all energy can appear as changes in the behavior of matter. Reversing the figure-ground relation is a subjective preference. So too is reversing the figure-ground relation of choice and determinism a subjective preference. If we say that our choices are determined, then we must explain why there is a such thing as having a feeling that we choose. Why would there be a difference, for example, in the way that we breathe and the way that we intentionally control our breathing? Why would different areas of the brain be involved in voluntary control, and why would voluntary muscle tissue be different from smooth muscle tissue if there were no role for choice in physics? We have misunderstood physics in that we have misinterpreted the role of our involvement in that understanding.

We see physics as a collection of rules from which experiences follow, but I think that it can only be the other way around. Rules follow from experiences. Physics lags behind awareness. In the case of humans, our personal awareness lags behind our sub-personal awareness (as shown by Libet, etc) but that does not mean that our sub-personal awareness follows microphysical measurables. If you are going to look at the personal level of physics, you only have to recognize that you can intend to stand up before you stand up, or that you can create an opinion intentionally which is a compromise between select personal preferences and the expectations of a social group.

Previous Wittgenstein post here.

The Primacy of Spontaneous Unique Simplicity

This post is inspired by a long running (perpetual?) debate that I have going with a fellow consciousness aficionado who is a mathematics professor. He has some unique insights into artificial intelligence, particularly where advanced interpretations of the likes of Gödel, Turing, Kleene open up to speculations on the nature of machine consciousness. One of his results has been sort of a Multiple Worlds Interpretation in which numbers themselves would replace metaphysics, so that things like matter become inevitable illusions from within the experience of Platonic-arithmetic machines.

His theory is perhaps nowhere crystallized more understandably than in his Universal Dovetailer Argument (UDA) in which there is a single machine which runs through every possible combination of programs, thereby creating everything that can be possible from basic arithmetic elements such as numbers, addition, and multiplication. This is based on the assumption that computation can duplicate the machinery which generates human consciousness – which is the assumption that I question. Below, I try to run through a treatment where the conceptual problems of computationalism lie, and how to get passed them by inverting the order in which his UD (Universal Dovetailer) runs. Instead of a program that mechanically writes increasingly complex programs, some of which achieve a threshold of self-awareness, I use PIP (Primordial Identity Pansensitivity) to put sense first and numbers second. Here’s how it goes:

I. Trailing Dovetail Argument (TDA)

A. Computationalism makes two ontological assumptions which have not been properly challenged:

- The universality of recursive cardinality

- Complexity driven novelty.

Both of these, I intend to show, are intrinsically related to consciousness in a non-obvious way.

B. Universal Recursive Cardinality

Mathematics, I suggest is defined by the assumption of universal cardinality: The universe is reducible to a multiplicity of discretely quantifiable units. The origin of cardinality, I suggest, is the partitioning or multiplication of a single, original unit, so that every subsequent unit is a recursive copy of the original.

Because recursiveness is assumed to be fundamental through math, the idea of a new ‘one’ is impossible. Every instance of one is a recurrence of the identical and self-same ‘one’, or an inevitable permutation derived from it. By overlooking the possibility of absolute uniqueness, computationalism must conceive of all events as local reproductions of stereotypes from a Platonic template rather than ‘true originals’.

A ‘true original’ is that which has no possible precedent. The number one would be a true original, but then all other integers represent multiple copies of one. All rational numbers represent partial copies of one. All prime numbers are still divisible by one, so not truly “prime”, but pseudo-prime in comparison to one. One, by contrast, is prime, relative to mathematics, but no number can be a true original since it is divisible and repeatable and therefore non-unique. A true original must be indivisible and unrepeatable, like an experience, or a person. Even an experience which is part of an experiential chain that is highly repetitive is, on some level unique in the history of the universe, unlike a mathematical expression such as 5 x 4 = 20, which is never any different than 5 x 4 = 20, regardless of the context.

I think that when we assert a universe of recursive recombinations that know no true originality, we should not disregard the fact that this strongly contradicts our intuitions about the proprietary nature of identity. A generic universe would seem to counterfactually predict a very low interest in qualities such as individuality and originality, and identification with trivial personal preferences. Of course, what we see the precise opposite, as all celebrity it propelled by some suggestion unrepeatability and the fine tuning of lifestyle choices is arguably the most prolific and successful feature of consumerism.

If the experienced universe were strictly an outcropping of a machine that by definition can create only trivially ‘new’ combinations of copies, why would those kinds of quantitatively recombined differences such as that between 456098209093457976534 and 45609420909345797353 seem insignificant to us, but the difference between a belt worn by Elvis and a copy of that belt to be demonstrably significant to many people?

C. Complexity Driven Novelty

Because computationalism assumes finite simplicity, that is, it provides only a pseudo-uniqueness by virtue of the relatively low statistical probability of large numbers overlapping each other precisely. There is no irreducible originality to the original Mona Lisa, only the vastness of the physical painting’s microstructure prevents it from being exactly reproduced very easily. Such a perfect reproduction, under computationalism is indistinguishable from the original and therefore neither can be more original than the other (or if there are unavoidable differences due to uncertainty and incompleteness, they would be noise differences which we would be of no consequence).

This is where information theory departs from realism, since reality provides memories and evidence of which Mona Lisa is new and which one was painted by Leonardo da Vinci at the beginning of the 16th century in Florence, Italy, Earth, Sol, Milky Way Galaxy*.

Mathematics can be said to allow for the possibility of novelty only in one direction; that of higher complexity. New qualities, by computationalism, must arise on the event horizons of something like the Universal Dovetailer. If that is the case, it seems odd that the language of qualia is one of rich simplicity rather than cumbersome computables. With comp, there can be no new ‘one’, but in reality, every human experience is exactly that – a new day, a new experience, even if it often seems much like the one before. Numbers don’t work that way. Each mechanical result is identical. A = A. A does not ‘seem much like the A before, yet in a new way‘. This is a huge problem with mathematics and theoretical physics. They don’t get the connection between novelty and simplicity, so they hope to find it out in the vastness of super-human complexity.

II. Computation as Puppetry

I think that even David Chalmers, who I respect immensely for his contributions to philosophy of mind and in communicating the Hard Problem missed the a subtle but important distinction. The difference between a puppet and a zombie, while superficially innocuous, has profound implications for the formulation of a realistic critique of Strong AI. When Chalmers introduced or popularized the term zombie in reference to hypothetical perfect human duplicates which lack qualia and subjective experience, he inadvertently let an unscientific assumption leak in.

A zombie is supernatural because it implies the presence of an absence. It is an animated, un-dead cadaver in which a living person is no longer present. The unconsciousness of a puppet, however, is merely tautological – it is the natural absence of presence of consciousness which is the case with any symbolic representation of a character, such as a doll, cartoon, or emoticon. A symbolic representation, such as Bugs Bunny, can be mass produced using any suitable material substance or communication media. Even though Bugs is treated as a unique intellectual property, in reality, the title to that property is not unique and can be transferred, sold, shared, etc.

The reason that Intellectual Property law is such a problem is because anyone can take some ordinary piece of junk, put a Bugs Bunny picture on it, and sell more of it than they would have otherwise. Bugs can’t object to having his good name sullied by hack counterfeiters, so the image of Bugs Bunny is used both to falsely endorse an inferior product and to falsely impugn the reputation of a brand. The problem is, any reasonable facsimile of Bugs Bunny is just as authentic, in an Absolute sense, as any other. The only true original Bugs Bunny is the one we experience through our imagination and the imagination of Mel Blanc and the Looney Tunes animators.

The impulse to reify the legitimacy of intellectual property into law is related to the impulse to project agency and awareness onto machines. As a branch of the “pathetic fallacy” which takes literally those human qualities which have been applied to non-humans as figurative conveniences of language, the computationalistic fallacy projects an assumed character-hood on the machine as a whole. Reasoning (falsely, I think) that since all that our body can see of ourselves is a body, it is the body which is the original object from which the subject is produced through its functions. Such a conclusion, when we begin from mechanism, seems unavoidable at first.

III. Hypothesis

I propose that we reverse the two assumptions of mathematics above, so that

- Recursion is assumed to be derived from primordial spontaneity rather than the other way around.

- Novelty can only be meaningful if it re-asserts simplicity in addition to complexity.This would mean:

- The expanding event horizon of the Universal Dovetailer would have to be composed of recordings of sensed experiences after the fact, rather than precursors to subjective simulation of the computation.

- Comp is untrue by virtue of diagonalization of immeasurable novelty against incompleteness.

- Sense out-incompletes arithmetic truth, and therefore leaves it frozen in stasis by comparison in every instant, and in eternity.

- Computation cannot animate anything except through the gullibility of the pathetic fallacy.

This may seem like an unfair or insulting to the many great minds who have been pioneering AI theory and development, but that is not my intent. By assertively pointing out the need to move from a model of consciousness which hinges on simulated spontaneity to a model in which spontaneity can never, by definition be simulated, I am trying to express the importance and urgency of this shift. If I am right, the future of human understanding depends ultimately on our ability to graduate from the cul-de-sac of mechanistic supremacy to the more profound truth of rehabilitated animism. Feeling does compute because computation is how the masking of feeling into a localized unfeeling becomes possible.

IV. Reversing the Dovetailer

By uncovering the intrinsic antagonism between the above mathematical assumptions and the authentic nature of consciousness, it might be possible to ascertain a truer model of consciousness by reversing the order of the Universal Dovetailer (machine that builds the multiverse out of programs).

- The universality of recursive cardinality reverses as the Diagonalization of the Unique

- Complexity driven novelty can be reversed by Pushing the UD.

A. Diagonalization of the Unique

Under the hypothesis that computation lags behind experience*, no simulation of a brain can ever catch up to what a natural person can feel through that brain, since the natural person is constantly consuming the uniqueness of their experience before it can be measured by anything else. Since the uniqueness of subjectivity is immeasurable and unprecedented within its own inertial frame, no instrument from outside of that frame can capture it before it decoheres into cascades of increasingly generic public reflections.

PIP flips the presumption of Universal Recursive Cardinality inherent in mathematics so that all novelty exists as truly original simplicity, as well as a relatively new complex recombination, such that the continuum of novelty extends in both directions. This, if properly understood, should be a lightning bolt that recontextualizes the whole of mathematics. It is like discovering a new kind of negative number. Things like color and human feeling may exploit the addressing scheme that complex computation offers, but the important part of color or feeling is not in that address, but in the hyper-simplicity and absolute novelty that ‘now’ corresponds to that address. The incardinality of sense means that all feelings are more primitive than even the number one or the concept of singularity. They are rooted in the eternal ‘becoming of one’; before and after cardinality. Under PIP, computation is a public repetition of what is irreducibly unrepeatable and private. Computation can never get ahead of experience, because computation is an a posteriori measurement of it.

For example, a computer model of what an athlete will do on the field that is based on their past performance will always fail to account for the possibility that the next performance will be the first time that athlete does something that they never have done before and that they could not have done before. Natural identities (not characters, puppets, etc) are not only self-diagonalizing, natural identity itself is self-diagonalization. We are that which has not yet experienced the totality of its lifetime, and that incompleteness infuses our entire experience. The emergence of the unique always cheats prediction, since all prediction belongs to the measurements of an expired world which did not yet contain the next novelty.

B. Pushing the UD – If the UD is a program which pulls the experienced universe behind it as it extends, the computed realm, faster than light, ahead of local appearances. It assumes all phenomena are built bottom up from generic, interchangeable bits. The hypothesis under PIP is that if there were a UD, it would be pushed by experience from the top down, as well as recollecting fragments of previous experiences from the bottom up. Each experience decays from immeasurable private qualia that is unique into public reflections that are generic recombinations of fixed elements. Reversing the Dovetailer puts universality on the defense so that it becomes a storage device rather than a pseudo-primitive mechina ex deus.

The primacy of sense is corroborated by the intuition that every measure requires a ruler. Some example which is presented as an index for comparison. The uniqueness comes first, and the computability follows by imitation. The un-numbered Great War becomes World War II only in retrospect. The second war does not follow the rule of world wars, it creates the rule by virtue of its similarities. The second war is unprecedented in its own right, as an original second world war, but unlike the number two, it is not literally another World War I. In short, experiences do not follow from rules; rules follow from experience.

V. Conclusions

If we extrapolate the assumptions of Compuationalism out, I think that they would predict that the painting of the Mona Lisa is what always happens under the mathematical conditions posed by a combination of celestial motions, cells, bodies, brains, etc. There can be no truly original artwork, as all art works are inevitable under some computable probability, even if the the particular work is not predictable specifically by computation. Comp makes all originals derivatives of duplication. I suggest that it makes more sense that the primordial identity of sense experience is a fundamental originality from which duplication is derived. The number one is a generic copy – a one-ness which comments on an aspect of what is ultimately boundaryless inclusion rather than naming originality itself.

Under Multisense Realism (MSR), the sense-first view ultimately makes the most sense but it allows that the counter perspective, in which sense follows computation or physics, would appear to be true in another way, one which yields meaningful insights that could not be accessed otherwise.

When we shift our attention from the figure of comp in the background of sense to the figure of sense in the background of comp, the relation of originality shifts also. With sense first, true originality makes all computations into imposters. With computation first, arithmetic truth makes local appearances of originality artifacts of machine self-reference. Both are trivially true, but if the comp-first view were Absolutely true, there would be no plausible justification for such appearances of originality as qualitatively significant. A copy and an original should have no greater difference than a fifteenth copy and a sixteenth copy, and being the first person to discover America should have no more import than being the 1,588,237th person to discover America. The title of this post as 2013/10/13/2562 would be as good of a title as any other referenceable string.

*This is not to suggest that human experience lags behind neurological computation. MSR proposes a model called eigenmorphism to clarify the personal/sub-personal distinction in which neurological-level computation corresponds to sub-personal experience rather than personal level experience. This explains the disappearance of free will in neuroscientific experiments such as Libet, et. al. Human personhood is a simple but deep. Simultaneity is relative, and nowhere is that more true than along the continuum between the microphysical and the macrophenomenal. What can be experimented on publicly is, under MSR, a combination of near isomorphic and near contra-isomorphic to private experience.

If You See Wittgenstein on the Road… (you know what to do)

Me butting into a language based argument about free will:

> I don’t see anything particularly contentious about Wittgenstein’s claim that the meaning of a word lies in how it is used.

Can something (a sound or a spelling) be used as a word if it has no meaning in the first place though?

>After all, language is just an activity in which humans engage in order to influence (and to be influenced by) the behaviour of other humans.

Not necessarily. I imagine that the origin of language has more to do with imitation of natural sounds and gestures. Onomatopoeia, for example. Clang, crunch, crash… these are not arbitrary signs which derive their meaning from usage alone. C. S. Pierce was on the right track with discerning between symbols (arbitrary signs whose meaning is attached by use alone), icons (signs which are isomorphic to their referent), and index (signs which refer by inevitable association as smoke is an index of fire). Words would not develop out of what they feel like to say and to hear, and the relation of that feeling to what is meant.

>I’m inclined to regard his analysis of language in the same light as I regard Hume’s analysis of the philosophical notion of ‘substance’ (and you will be aware that I side with process over substance) – i.e. there is no essential essence to a word. Any particular word plays a role in a variety of different language games, and those various roles are not related by some kind of underlying essence but by what Wittgenstein referred to as a family resemblance. The only pertinent question becomes that of what role a word can be seen to play in a particular language game (i.e. what behavioural influences it has), and this is an empirical question – i.e. it does not necessarily have any metaphysical connotations.

While Wittgenstein’s view is justifiably influential, I think that it belongs to the perspective of modernism’s transition to postmodernity. As such, it is bound by the tenets of existentialism, in which isolation, rather than totality is assumed. I question the validity of isolation when it comes to subjectivity (what I call private physics) since I think that subjectivity makes more sense as a temporary partition, or diffraction within the totality of experience rather than a product of isolated mechanisms. Just as a prism does not produce the visible spectrum by reinventing it mechanically – colors are instead revealed through the diffraction of white light. Much of what goes on in communication is indeed language games, and I agree that words do not have an isolated essence, but that does not mean that the meaning of words is not rooted in a multiplicity of sensible contexts. The pieces that are used to play the language game are not tokens, they are more like colored lights that change colors when they are put together next to each other. Lights which can be used to infer meaning on many levels simultaneously, because all meaning is multivalent/holographic.

> So if I wish to know the meaning of a word, e.g. ‘choice’, I have to LOOK at how the word is USED rather than THINK about what kind of metaphysical scheme might lie behind the word (Philosophical Investigations section 66 and again in section 340).

That’s a good method for learning about some aspects of words, but not others. In some case, as in onomatopoeia, that is the worst way of learning anything about it and you will wind up thinking that Pow! is some kind of commentary about humorous violence and has nothing to do with the *sound* of bodies colliding and it’s emotional impact. It’s like the anthropologist who gets the completely wrong idea about what people are doing because they are reverse engineering what they observe back to other ethnographers interpretations rather than to the people’s experienced history together.

> So, for instance, when Jane asks me “How should I choose my next car?” I understand her perfectly well to be asking about the criteria she should be employing in making her decision. Similarly with the word ‘free’ – I understand perfectly well what it means for a convict to be set free. And so to the term ‘free will’; As Hume pointed out, there is a perfectly sensible way to use the term – i.e. when I say “I did it of my own free will”, all I mean is that I was not coerced into doing it, and I’m conferring no metaphysical significance upon my actions (the compatibilist notion of free will in contrast to the metaphysical notion of free will).

Why would that phrase ‘free will’ be used at all though? Why not just say “I was not coerced” or nothing at all, since without metaphysical (or private physical) free will, there would be no important difference between being coerced by causes within your body or beyond your body. Under determinism, there is no such thing as not being coerced.

> The word ‘will’ is again used in a variety of language games, and the family resemblance would appear to imply something about the future (e.g. “I will get that paper finished today”). When used in the free will language game, it shares a significant overlap with the choice language game. But when we lift a word out of its common speech uses and confer metaphysical connotations upon it, Wittgenstein tells us that language has ceased doing useful work (as he puts it in the PI section 38, “philosophical problems arise when language goes on holiday”).

We should not presume that work is useful without first assuming free will. Useful, like will, is a quality of attention, an aesthetic experience of participation which may be far more important than all of the work in the universe put together. It is not will that must find a useful function, it is function that acquires use only through the feeling of will.

> And, of course, the word ‘meaning’ is itself employed in a variety of different language games – I can say that I had a “meaningful experience” without coming into conflict with Wittgenstein’s claim that the meaning of a word lies in its use.

Use is only one part of meaning. Wittgenstein was looking at a toy model of language that ties only to verbal intellect itself, not to the sensory-motor foundations of pre-communicated experience. It was a brilliant abstraction, important for understanding a lot about language, but ultimately I think that it takes the wrong things too seriously. All that is important about awareness and language would, under the Private Language argument, be passed over in silence.

> Regarding Wheeler’s delayed choice experiment, the experimenter clearly has a choice as to whether she will deploy a detector that ignores the paths by which the light reaches it, or a detector that takes the paths into account. In Wheeler’s scenario that choice is delayed until the light has already passed through (one or both of) the slits. I really can’t take issue with the word ‘choice’ as it is being used here.

I think that QM also will eventually be explained by dropping the assumption of isolation. Light is visual sense. It is how matter sees and looks. Different levels of description present themselves differently from different perspectives, so that if you put matter in the tiniest box you can get, you give it no choice but to reflect back the nature of the limitation of that specific measurement, and measurement in general.

Law of Conservation of Mystery

Law of Conservation of Mystery – Refers to the weird tendency for profound and fundamental issues to resist final resolution. Under eigenmorphism, both the microcosmic and cosmic frames (the infinitesimal and the great) relate to the fusion of chance and choice. It is as if the personal, macrocosmic range of awareness might act as a lens, bending the impersonality of the universe into a personal bubble, and in another sense, the personal bubble may project an illusion of impersonality outwardly. Both of these can be thought of not as illusions or distortions, but of mutual relation between foreground and background which constitutes a tessellated synergy.

Both the sub-personal quirkiness of QM and the super-personal spookiness of divination (such as the I Ching or Tarot cards) exemplify that the perception of spiritual or mechanical absolutes is elusive and bound to the choice between belief and belief in disbelief. In both quantum mechanics and divination, the human participant is responsible for the interpretation – the individual is the prism which splits the beam of their interpretation between chance and choice…or the individual is responsible for remaining skeptical and resisting pseudoscientific claims. If we choose to allow choice on the cosmological level, even there, the continuum between luck which is intentionally or unintentionally fateful and karma which is divinely mechanistic reflects a difference in degree of universal approbation. The Law of Conservation of Mystery is particularly applicable to paranormal phenomena. Everything from UFOs to NDEs have passionately devoted supporters who are either seen as deluded fools stuck in a prescientific past or prophets of enlightenment ahead of their time. What preserves that bifocal antagonism is technically eigenmorphism – it’s how different perceptual inertial frames maintain their character, but this special case of perceptual relativity is on us. It keeps us guessing and pushing further, but it also keeps us blind and stuck in our assumptions.

Superposition of the Absolute – The concept of superposition has enjoyed wide acceptance on the microcosmic level of quantum physics, but the idea of the Totality of the universe having a kind of multistable nature has not yet been widely considered as far as I know. The superposition of a wavefunction is tolerated because it helps us justify what we have measured, but any escalation of this kind of merged possibility to the macrocosm is strictly forbidden. Under PIP, the entire cosmos can be understood to be perpetually in superposition, or perhaps meta-superposition. Any event can be meaningful or meaningless according to one’s interpretation, but some events are more insistent upon meaningful interpretations than others.

Coincidence and pattern invariably count as evidence of the Absolute, whether the Absolute is regarded as mechanical law, divine will, or existential indifference. In this way, the insistence or existence of pattern can be understood as the wavefunction collapse of eternity. This is hard to grasp since eternity is the opposite of instantaneous, so that the ‘collapse’ is occurring in some sense across all time, weaving through it as a mysterious thread that pulls every participant forward into their own knowledge and delusion. On the Absolute scale, every lifetime is a single moment that echoes forever, and the echo of the forever-now into nested subroutines of smaller and smaller ‘nows’.

On the super-personal level (super-private/transpersonal/collective), when coincidence seems to become something more (synchronicity, precognition, or destiny), the collapse can be understood as a collapse on multiple nested frames at once. “Then it all made sense”, Eureka!, I hit bottom. etc. The multiplicity of conflicting possibilities can, for a moment, pop into a single focus that will resonate for a lifetime.

On the quantum level, probability is used in a particular way to explain behaviors of phenomena to which we attribute no intentional choice. Einstein’s famous objection ‘God does not play dice’ perhaps echoes a deep intuition that people have always had about the way that nature reflects a partially hidden order. This expectation is perhaps the common thread of all three epistemological branches – the theological, the philosophical, and the scientific. The value of prediction is particularly powerful for both scientific and theocratic authority as evidence of positive connection with either natural law or divine will. Science demands theories predict successfully, while religion demands prophetic promise. Under the Superposition of the Absolute, the ultimate natural law can be seen to become more flexible and porous, and the localization of divine will can be seen to have limitations and natural constraints. If PIP is to make a prediction itself, it would be to suggest that all wavefunctions share the identical, nested, non-well-founded superposition, one which can be understood as sense or perceptual relativity itself.

Wittgenstein in Wonderland, Einstein under Glass

If I understand the idea correctly – that is, if there is enough of the idea which is not private to Ludwig Wittgenstein that it can be understood by anyone in general or myself in particular, then I think that he may have mistaken the concrete nature of experienced privacy for an abstract concept of isolation. From Philosophical Investigations:

The words of this language are to refer to what can be known only to the speaker; to his immediate, private, sensations. So another cannot understand the language. – http://plato.stanford.edu/entries/private-language/

To begin with, craniopagus (brain conjoined) twins, do actually share sensations that we would consider private.

The results of the test did not surprise the family, who had long suspected that even when one girl’s vision was angled away from the television, she was laughing at the images flashing in front of her sister’s eyes. The sensory exchange, they believe, extends to the girls’ taste buds: Krista likes ketchup, and Tatiana does not, something the family discovered when Tatiana tried to scrape the condiment off her own tongue, even when she was not eating it.

There should be no reason that it would not be technologically feasible to eventually export the connectivity which craniopagus twins experience through some kind of neural implant or neuroelectric multiplier. There are already computers that can be controlled directly through the brain.

Brain-computer interfaces that monitor brainwaves through EEG have already made their way to the market. NeuroSky’s headset uses EEG readings as well as electromyography to pick up signals about a person’s level of concentration to control toys and games (see “Next-Generation Toys Read Brain Waves, May Help Kids Focus”). Emotiv Systems sells a headset that reads EEG and facial expression to enhance the experience of gaming (see “Mind-Reading Game Controller”).

All that would be required in principle would be to reverse the technology to make them run in the receiving direction (computer>brain) and then imitate the kinds of neural connections which brain conjoined twins have that allow them to share sensations. The neural connections themselves would not be aware of anything on a human level, so it would not need to be public in the sense that sensations would be available without the benefit of a living human brain, only that the awareness could, to some extent, incite a version of itself in an experientially merged environment.

Because of the success and precision of science has extended our knowledge so far beyond our native instruments, sometimes contradicting them successfully, we tend to believe that the view that diagnostic technology provides is superior to, or serves as a replacement for our own awareness. While it is true that our own experience cannot reveal the same kinds of things that an fMRI or EEG can, I see that as a small detail compared to the wealth of value that our own awareness provides about the brain, the body, and the worlds we live in. Natural awareness is the ultimate diagnostic technology. Even though we can certainly benefit from a view outside of our own, there’s really no good reason to assume that what we feel, think, and experience isn’t a deeper level of insight into the nature of biochemical physics than we could possibly gain otherwise. We are evidence that physics does something besides collide particles in a void. Our experience is richer, smarter, and more empirically factual than what an instrument outside of our body can generate on its own. The problem is that our experience is so rich and so convoluted with private, proprietary knots, that we can’t share very much of it. We, and the universe, are made of private language. It is the public reduction of privacy which is temporary and localized…it’s just localized as a lowest common denominator.

While It is true that at this stage in our technical development, subjective experience can only be reported in a way which is limited by local social skills, there is no need to invoke a permanent ban on the future of communication and trans-private experience. Instead of trying to report on a subjective experience, it could be possible to share that experience through a neurological interface – or at least to exchange some empathic connection that would go farther than public communication.

If I had some psychedelic experience which allowed me to see a new primary color, I can’t communicate that publicly. If I can just put on a device that allows our brains to connect, then someone else might be able to share the memory of what that looked like.

It seems to me that Wittgenstein’s private language argument (sacrosanct as it seems to be among the philosophically inclined) assumes privacy as identical to isolation, rather than the primordial identity pansensitivty which I think it could be. If privacy is accomplished as I suggest, by the spatiotemporal ‘masking’ of eternity, than any experience that can be had is not a nonsense language to be ‘passed over in silence’, but rather a personally articulated fragment of the Totality. Language is only communication – intellectual measurement for sharing public-facing expressions. What we share privately is transmeasurable and inherently permeable to the Totality beneath the threshold of intellect.

Said another way, everything that we can experience is already shared by billions of neurons. Adding someone else’s neurons to that group should indeed be only a matter of building a synchronization technology. If, for instance, brain conjoined twins have some experience that nobody else has (like being the first brain conjoined twins to survive to age 40 or something), then they already share that experience, so it would no longer be a ‘private language’. The true future of AI may not be in simulating awareness as information, but in using information to share awareness. Certainly the success of social networking and MMPGs has shown us that what we really want out of computers is not for them to be us, but for us to be with each other in worlds we create.

I propose that rather than beginning from the position of awareness being a simulation to represent a reality that is senseless and unconscious, we should try assuming that awareness itself is the undoubtable absolute. I would guess that each kind of awareness already understands itself far better than we understand math or physics, it is only the vastness of human experience which prevents that understanding to be shared on all levels of itself, all of the time.

The way to understand consciousness would not be to reduce it to a public language of physics and math, since our understanding of our public experience is itself robotic and approximated by multiple filters of measurement. To get at the nature of qualia and quanta requires stripping down the whole of nature to Absolute fundamentals – beyond language and beyond measurement. We must question sense itself, and we must rehabilitate our worldview so that we ourselves can live inside of it. We should seek the transmeasurable nature of ourselves, not just the cells of our brain or the behavioral games that we have evolved as one particular species in the world. The toy model of consciousness provided by logical positivism and structural realism is, in my opinion, a good start, but in the wrong direction – a necessary detour which is uniquely (privately?) appropriate to a particular phase of modernism. To progress beyond that I think requires making the greatest cosmological 180 since Galileo. Einstein had it right, but he did not generalize relativity far enough. His view was so advanced in the spatialization of time and light that he reduced awareness to a one dimensional vector. What I think he missed, is that if we begin with sensitivity, then light becomes a capacity with which to modulate insensitivity – which is exactly what we see when we share light across more than one slit – a modulation of masked sensitivity shared by matter independently of spacetime.

Jesse Prinz -On the (Dis)unity of Consciousness

Jesse Prinz gives a well developed perspective on neuronal synchronization as the correlate to attention and explores the question of binding. As always, neuroscience offers important details and clues for us to guide our understanding, however, knowledge alone may not be the pure and unbiased resource that we presume it to be. The assumptions that we make about a world in which we have already defined consciousness to be the behavior of neurons are not neutral. They direct and some cases self-validate the approach as much as any cognitive bias could. For those who watch the video, here are my comments:

To begin with, aren’t unity and disunity qualitative discernments within consciousness? To me, the binding problem is most likely generated from the assumption that consciousness arises a posteriori of distinctions like part-whole, when in fact, awareness may be identical to the capacity for any distinction at all, and is therefore outside of any notion of ‘it-ness’, ‘unity’, or multiplicity. To me, it is clear that consciousness is unified, not-unified, both unified and not unified, and neither unified nor not unified. If we call consciousness ‘attention’, what should we call our awareness of the periphery of our awareness – of memories and intuitions?

The assumption that needs to be questioned is that sub-conscious awareness is different from consciousness in some material way. Our awareness of our awareness is of course limited, but that doesn’t mean that low level ‘processing’ is not also private experience in its own right.

Pointing to synchronization of neuronal activity as causing attention just pushes the hard problem down to a microphenomenal level. In order to synchronize with each other, neurons themselves would ostensibly have to be aware and pay attention to each other in some way.

Synchrony may not be the cause, but the symptom. Experience is stepped down from the top and up from the bottom in the same way that I am using codes of letters to make words which together communicate my top-down ideas. Neurons are the brain’s ‘alphabet’, they are not the author of consciousness, they are not sufficient for consciousness, but they are necessary for a human quality of consciousness. (In my opinion).

Later on, when he covers the idea of Primitive Unity, he dismisses holistic awareness on the basis of separate areas of the brain contribute separate information, but that is based on an expectation that the brain is the cause of awareness rather than the event horizon of privacy as it becomes public (and vice versa) on many levels and scales. The whole idea of ‘building whole experiences’ from atomistic parts assumes holism as a possibility, even as it seeks to deny that possibility. How can a whole experience be built without an expectation of wholes?

Attention is not what consciousness is, it is what consciousness does. In order for attention to exist, there must first be the capacity to receive sensation and to appreciate that sensation qualitatively. Only then, when we have something to pay attention to, can be find our capacity to participate actively in what we perceive.

As far as the refrigerator light idea goes, I think that is a good line of thought to explore with consciousness as I think it should lead to a questioning not only of the constancy of the light, but of the darkness as well. We cannot assume that either the naive state of light on or the sophisticated state of light on with door open/off when closed is more real than the other. Instead, each view only reflects the perspective which is getting the attention. When we look at consciousness from the point of view of a brain, we can only find explanations which break consciousness apart into subconscious and impersonal operations. It is a confirmation bias of a different sort which is never considered.

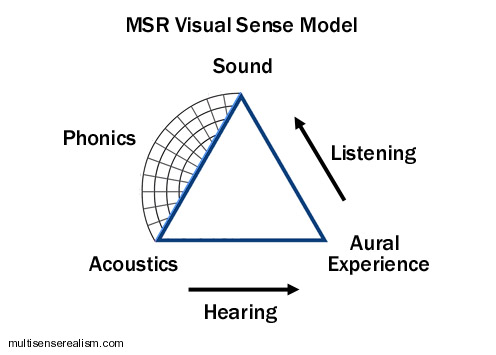

Light, Vision, and Optics

In the above diagram, the nature of light is examined from a semiotic perspective. As with Piercian sign trichotomies, and semiotics in general the theme of interpretation is deconstructed as it pertains to meanings, interpreters, and objects. In this case the object or sign is “Optics”. This would be the classical, macroscopic appearance of light as beams or rays which can be focused and projected, Color wheels and primary colors are among the tools we use to orient our own human experience of vision with the universal nature of material illumination.

On the other side of bottom of the triangle is “Vision”. This is the component which gives vision a visual quality. The arrows leading to and from vision denote the incoming receptivity from optics and the outgoing engagement toward “Light”. When we see, our awareness is informed from the bottom up and the top down. Seeing rides on top of the low level interactions of our cells, while looking is our way of projecting our will as attention to the visual field.

While optics dictate measurable relationships among physical properties of light on the macroscopic scale, ‘light’ is the hypothetical third partner in the sensory triad. Light is both the microphysical functions of quantum electrodynamics and the absolute frame of perceptual relativity from which various perceptual inertial frames emerge. The span between light and optics is marked by the polar graph and label “Image” to describe the role of resemblance and relativity. Image is a fusion of the cosmological truth of all that can be seen and illuminated (light), with the localization to a particular inertial frame (optics-in-space), and recapitulation by a particular interpreter – who is a time-feeler of private experience.

This triangle schema is not limited to light. Any sense can be used with varying degrees of success:

The overall picture can be generalized as well:

Note that the afferent and efferent sided of the triangle have a push-pull orientation, while the quanta side is an expanding graph. This is due to the difference between participation within spacetime, which is proprietary feeling, and the measured positions between participants on multiple scales or frames of participation. Sense is the totality of experience from which subjective extractions are derived. The physical mode describes the relation between each subjective experience and between other frames of subjective experience as representational tokens: bodies or forms. It’s all a kind of trail of breadcrumbs which lead back to the source, which is originality itself.

Recent Comments