Archive

John Weldon’s “To Be”

http://www.youtube.com/watch?v=pdxucpPq6Lc

If you say yes to the scientist, you are saying that originality is an illusion and simulation is absolute. Arithmetic can do so many things, but it can’t do something that can only be done once. Think of consciousness as not only that which can’t be done more than once, it is that which cannot even be fully completed one time. It doesn’t begin or end, and it is neither finite nor infinite, progressing or static, but instead it is the fundamental ability for beginnings and endings to seem to exist and to relate to each other sensibly. Consciousness is orthogonal to all process and form, but it reflects itself in different sensible ways through every appreciation of form.

The not-even-done-onceness of consciousness and the done-over-and-overness of its self reflection can be made to seem equivalent from any local perspective, since the very act of looking through a local perspective requires a comparison with prior perspectives, and therefore attention to the done-over-and-overness – the rigorously measured and recorded. In this way, the diagonalization of originality is preserved, but always behind our back. Paradoxically, it is only when we suspend our rigid attention and unexamine the forms presented within consciousness and the world that we can become the understanding that we expect.

Computation as Shadow of Consciousness

I’m borrowing a (fantastic) image from

Incredible Shadow Art Created From Junk by Tim Noble & Sue Webster.

to make a point about the Map-Territory distinction and how it pertains to simulated intelligence.

Although a computer simulation produces an output over time, that does not mean that it is four dimensional (Time can be an additional dimension on any number of dimensions – a cartoon can be 2D but still last fifteen minutes.) By using the terms N and N-1 dimensional, I’m trying to make the point that no matter how extensive the measurements we make and how compelling their coherence seems to be, it can still be one dimension flatter than the original without our knowing it.

In the case of consciousness, I would say that it absolutely cannot be defined or described quantitatively, so that it truly is N dimensional, or trans-dimensional. Whatever number of dimensions we want to ascribe to some aspect of consciousness, that description will always be n-1 to the real thing. That is, in my understanding, the nature of representation – a destructive reduction of a presentation through a lower level (n-1 or n-x) medium which can be reconstructed by at the higher level if, and only if, there is a higher level interpreter.

Some might object to the metaphor on the grounds that computation is not like a shadow, since changing a computation has predictable effects, while changing a shadow’s appearance does not effect the object. That’s true, but again, in this case, I am using N dimensional phenomena, so that interactiveness is part of the conservation of the temporal, before and after/cause and effect axis. In such a scenario, the flatland effect itself is modified somewhat and there are more dimensions shared. More shared dimensions = more conservation of sensory-motive agreement, however, there is also more that is not shared (feeling, sensations, colors, understanding, for example).

Some who are more familiar with the spectacular capabilities of cutting edge computation might object to the over-simplification, and say that I am criticizing an older generation of approaches to machine learning. There is some truth to that, but in the rarefied air of higher math, we get into what I call the super-impersonal level of intelligence. By comparison, the super-personal level of awareness could be described as mythic or poetic. Synchronicity can be concentrated through divination techniques like Ouija boards and Tarot cards. The Platonic ‘realm’ accessed through ultra-sophisticated computation are, in my view, a dual of that kind of divination, except rooted in the generic and repeatable rather than the instantaneous and volitional. The computer is an oracle to impersonal truths about truth itself, and can build locally applicable strategies from there, however, it cannot factor in any kind of personal feeling or intention.

The computational oracle is much more seductive than divination, since it does not leave the interpretation up to the audience. The computational oracle’s output is to be interpreted as objective fact…which is tremendously useful of course, when we are talking about objects. The danger is when we start believing what it has to say about subejcts. As correct as the computer is about objective truth, it is equally incorrect about people. In the same/opposite way, the Ouija board is neither correct nor incorrect, but offers possibilities that tantalize the imagination.

Analogue, Brain Simulation Thread

Tell the difference between a set of algorithm’s in code that can mimic all the known processes for the input and output of a guitar into analogue equipment. The answer is no, because pros cant tell the difference. The entire analogue process has been sufficiently well modeled and encapsulated in the algorithmns. The inputs and outputs are physically realistic where the input and output are important. That is what substrate modelling of brain processes in computational neuroscience is about. i.e. Brain simulations.

Just because our analysis of what is going on in the brain reminds is of information processing does not mean that the brain is only an information processor, or that consciousness is conjured into existence as a kind of information-theoretic exhaust from the manipulation of bits.

What you are not considering is that beneath any mechanical or theoretical process (which is all that computation is as far as we know) is an intrinsic sensible-physical context which allows switches to load, store, and compare – allows recursive enumeration, digital identities,…a whole slew of rules about how generic functions work. This is already a low level kind of consciousness. That could still support Strong AI in theory, because bits being the tips of an iceberg of arithmetic awareness would make it natural to presume that low level awareness scales up neatly to high level awareness.

In practice, however, this does not have to be the case, and in fact what we see thus far is the opposite. The universally impersonal and uncanny nature of all artificial systems suggests the complete lack of personal presence. Regardless of how sophisticated the simulation, all imitations have some level at which some detector cannot be fooled. Consciousness itself however, like the wetness of water, cannot be fooled. No doll, puppet, or machine which is constructed from the outside in has any claim on sentience at the level which we have projected onto it. This is not about a substitution level, it is about the specific nature of sense being grounded in the unprecedented, genuine, simple, proprietary, and absolute rather than the opposite (probabilistic, reproducible, complex, generic, and local). From the low level to a high is not a difference in degree, but a difference in kind, even though the difference between the high level and low level is a difference in degree.

What I mean by that is that anything can be counted, but numbers cannot be reconstructed into what has been counted. I count my fingers…1, 2, 3, 4, 5. We have now destructively compressed the “information” of my hand, each unique finger and the thumb, into a figure. Five. Five can apply generically to anything, so we cannot imagine that five contains the recipe for fingers. This is obviously a reductio ad absurdum, but I introduce it not as a straw man but as a clear, simple illustration of the difference between sensory-motive realism and information-theoretic abstractions. You can map a territory, but you can’t make a territory out of a map regardless of how much the map reminds you of the territory.

So yes, digital representations can seem exactly like analog representations to us, but they are both representations within a sensory context rather than a sensory-motive presentation of their own. All forms of representation exist to communicate across space and time, bridging or eliding the entropic gaps in direct experience. It’s not a bad thing that modeling a brain will not result in a human consciousness, its a great thing. If it were not, it would be criminal to subject living beings to the horrors of being developed and enslaved in a lab. Fortunately, by modeling these beautiful 4-D dynamic sculptures of the recordings of our consciousness, we can tap into something very new and different from ourselves, but without being a threat to us (unless we take it for granted that they have true understanding, then we’re screwed).

Chess, Media, and Art

I was listening to Brian Regan’s comedy bit about chess, and how a checkmate is such an unsatisfying ending compared to other games and sports. This is interesting from the standpoint of the insufficiency of information to account for all of reality. Because chess is a game that is entirely defined by logical rules, the ending is a mathematical certainty, given a certain number of moves. That number of moves depends on the computational resources which can be brought to bear on the game, so that a sufficiently powerful calculator will always beat a human player, since human computation is slower and buggier than semiconductors. The large-but-finite number of moves and games* will be parsed much more rapidly and thoroughly by a computer than a person could.

This deterministic structure is very different (as Brian Regan points out) from something like football, where the satisfaction of game play is derived explicitly from the consummation of the play. It is not enough to be able to claim that statistically an opponent’s win is impossible, because in reality statistics are only theoretical. A game played in reality rather than in theory depends on things like the weather and can require a referee. Computers are great at games which depend only on information, but have no sense of satisfaction in aesthetic realism.

In contrast to mechanical determinism, the appearance of clichés presents a softer kind of determinism. Even though there are countless ways that a fictional story could end, the tropes of storytelling provide a feedback loop between audiences and authors which can be as deterministic -in theory- as the literal determinism of chess. By switching the orientation from digital/binary rules to metaphorical/ideal themes, it is the determinism itself which becomes probabilistic. The penalty of making a movie which deviates too far from the expectations of the audience is that it will not be well received by enough people to make it worth producing. Indeed, most of what is produced in film, TV, and even gaming is little more than a skeleton of clichés dressed up in more clichés.

The pull of the cliché is a kind of moral gravity – a social conditioning in which normative thoughts and feelings are reinforced and rewarded. Art and life do not reflect each other so much as they reflect a common sense of shared reassurance in the face of uncertainty. Fine art plays with breaking boundaries, but playfully – it pretends to confront the status quo, but it does so within a culturally sanctioned space. I think that satire is tolerated in Western-objective society because of its departure from the subjective (“Eastern”) worldview, in which meaning and matter are not clearly divided. Satire is seen as both not as threatening to the material-commercial machine, which does not depend on human sentiments to run, and also the controversy that satire produces can be used to drive consumer demands. Something like The Simpsons can be both a genuinely subversive comedy, as well as a fully merchandized, commercial meme-generating partner of FOX.

What lies between the literally closed world of logical rules and the figuratively open world of surreal ideals is what I would call reality. The games that are played in fact rather than just in theory, which share timeless themes but also embody a specific theme of their own are the true source of physical sustenance. Reality emerges from the center out, and from the peripheries in.

*“A guesstimate is that the maximum logical possible positions are somewhere in the region of +-140,100,033, including trans-positional positions, giving the approximation of 4,670,033 maximum logical possible games”

Consciousness and The Interface Theory of Perception, Donald Hoffman

A very good presentation with lot of overlap on my views. He proposes similar ideas about a sensory-motive primitive and the nature of the world as experience rather than “objective”. What is not factored in is the relation between local and remote experiences and how that relation actually defines the appearance of that relation. Instead of seeing agents as isolated mechanisms, I think they should be seen as more like breaches in the fabric of insensitivity.

It is a little misleading to say (near the end) that a spoon is no more public than a headache. In my view what makes a spoon different from a headache is precisely that the metal is more public than the private experience of a headache. If we make the mistake of assuming an Absolutely public perspective*, then yes, the spoon is not in it, because the spoon is different things depending on how small, large, fast, or slow you are. For the same reason, however, nothing can be said to be in such a perspective. There is no experience of the world which does not originate through the relativity of experience itself. Of course the spoon is more public than a headache, in our experience. To think otherwise as a literal truth would be psychotic or solipsistic. In the Absolute sense, sure, the spoon is a sensory phenomena and nothing else, it is not purely public (nothing is), but locally, is certainly is ‘more’ public.

Something that he mentioned in the presentation had to do with linear algebra and using a matrix of columns which add up to be one. To really jump off into a new level of understanding consciousness, I would think of the totality of experience as something like a matrix of columns which add up, not to 1, but to “=1”. Adding up to 1 is a good enough starting point, as it allows us to think of agents as holes which feel separate on one side and united on the other. Thinking of it as “=1” instead makes it into a portable unity that does something. Each hole recapitulates the totality as well as its own relation to that recapitulation: ‘just like’ unity. From there, the door is open to universal metaphor and local contrasts of degree and kind.

*mathematics invites to do this, because it inverts the naming function of language. Instead of describing a phenomenon in our experience through a common sense of language, math enumerates relationships between theories about experience. The difference is that language can either project itself publicly or integrate public-facing experiences privately, but math is a language which can only face itself. Through math, reflections of experience are fragmented and re-assembled into an ideal rationality – the ideal rationality which reflects the very ideal of rationality that it embodies.

Questioning the Sufficiency of Information

Searle’s “Chinese Room” thought experiment tends to be despised by strong AI enthusiasts, who seem to take issue with Searle personally because of it. Accusing both the allegory and the author of being stupid, the Systems Reply is the one offered most often. The man in the room may not understand Chinese, but surely the whole system, including book of translation, must be considered to understand Chinese.

Here then is simpler and more familiar example of how computation can differ from natural understanding which is not susceptible to any mereological Systems argument.

If any of you have use passwords which are based on a pattern of keystrokes rather than the letters on the keys, you know that you can enter your password every day without ever knowing what it is you are typing (something with a #r5f^ in it…?).

I think this is a good analogy for machine intelligence. By storing and copying procedures, a pseudo-semantic analysis can be performed, but it is an instrumental logic that has no way to access the letters of the ‘human keyboard’. The universal machine’s keyboard is blank and consists only of theoretical x,y coordinates where keys would be. No matter how good or sophisticated the machine is, it will still have no way to understand what the particular keystrokes “mean” to a person, only how they fit in with whatever set of fixed possibilities has been defined.

Taking the analogy further, the human keyboard only applies to public communication. Privately, we have no keys to strike, and entire paragraphs or books can be represented by a single thought. Unlike computers, we do not have to build our ideas up from syntactic digits. Instead the public-facing computation follows from the experienced sense of what is to be communicated in general, from the top down, and the inside out.

How large does a digital circle have to be before the circumference seems like a straight line?

Digital information has no scale or sense of relation. Code is code. Any rendering of that code into a visual experience of lines and curves is a question of graphic formatting and human optical interaction. With a universe that assumes information as fundamental, the proximity-dependent flatness or roundness of the Earth would have to be defined programmatically. Otherwise, it is simply “the case” that a person is standing on the round surface of the round Earth. Proximity is simply a value with no inherent geometric relevance.

When we resize a circle in Photoshop, for instance, the program is not transforming a real shape, it is erasing the old digital circle and creating a new, unrelated digital circle. Like a cartoon, the relation between the before and after, between one frame and the “next” is within our own interpretation, not within the information.

Playing Cards With Qualia

Here is an example to help illustrate what I think is the relationship between information and qualia that makes the most sense.

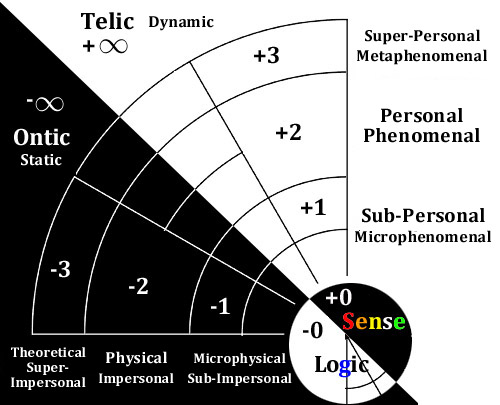

Here I am using the delta (Δ) to denote “difference”, n to mean “numbers” or information, kappa for aesthetic “kind” or qualia, and delta n degree (Δn°) for “difference in degree”.

The formula on top means “The difference between numbers and aesthetic qualities is not a difference in degree. This means that there is no known method by which a functional output of a computation can acquire an aesthetic quality, such as a color, flavor, or feeling.

Reversing the order in the bottom formula, I am asserting that the difference between qualia and numbers actually is only a difference in degree, not a difference in kind. That means that we can make numbers out of qualia, by counting them, but numbers can’t make qualia no matter what we do with them. This is to say also that subjects can reduce each other to objects, but objects cannot become subjects.

Let’s use playing cards as an example.

Each card has a quantitative value, A-K. The four suits, their colors and shapes, the portraits on the royal cards…none of them add anything at all to the functionality of the game. Every card game ever conceived can be played just as well with only four sets of 13 number values.

The view which is generally offered by scientific or mathematical accounts, would be that the nature of hearts, clubs, diamonds, kings, etc can differ only in degree from the numbers, and not in kind. Our thinking about the nature of consciousness puts the brain ahead of subjective experience, so that all feelings and qualities of experience are presumed to be representations of more complicated microphysical functions. This is mind-brain identity theory. The mind is the functioning of the brain, so that the pictures and colors on the cards would, by extension, be representations of the purely logical values.

To me, that’s obviously bending over backward to accommodate a prejudice toward the quantitative. The functionalist view prefers to preserve the gap between numbers and suits and fill it with faith, rather than consider the alternative that now seems obvious to me: You can turn the suit qualities into numbers easily – just enumerate them. The four suits can be reduced to 00,01,10, and 11. A King can be #0D, an Ace can be 01, etc. There is no problem with this, and indeed it is the natural way that all counting has developed: The minimalist characterization of things which are actually experienced qualitatively.

The functionalist view requires the opposite transformation, that the existence of hearts and clubs, red and black, is only possible through a hypothetical brute emergence by which computations suddenly appear heart shaped or wearing a crown, because… well because of complexity, or because we can’t prove that it isn’t happening. The logical fallacy being invoked is Affirming the Consequent:

If Bill Gates owns Fort Knox, then he is rich.

Bill Gates is rich.

Therefore, Bill Gates owns Fort Knox.

If the brain is physical, then it can be reduced to a computation.

We are associated with the activity of a brain.

Therefore, we can be reduced to a computation.

To correct this, we should invert our assumption, and look to a model of the universe in which differences in kind can be quantified, but differences in degree cannot be qualified. Qualia reduce to quanta (by degree), but quanta does not enrich to qualia (at all).

To take this to the limit, I would add the players of the card game to the pictures, suits, and colors of the cards, as well as their intention and enthusiasm for winning the game. The qualia of the cards is more “like them” and helps bridge the gap to the quanta of the cards, which is more like the cards themselves – digital units in a spatio-temporal mosaic.

Free Will Isn’t a Predictive Statistical Model

Free will is a program guessing what could happen if resources were spent executing code before having to execute it.

I suggest that Free Will is not merely the feeling of predicting effects, but is the power to dictate effects. It gets complicated because when we introspect on our own introspection, our personal awareness unravels into a hall of sub-personal mirrors. When we ask ourselves ‘why did I eat that pizza’, we can trace back a chain of ‘because…I wanted to. Because I was hungry…Because I saw a pizza on TV…’ and we are tempted to conclude that our own involvement was just to passively rubber stamp a course of multiple-choice actions that were already in motion.

If instead, we look at the entire ensemble of our responses to the influences, from TV image, to the body’s hunger, to the preference for pizza, etc as more of a kaleidoscope gestalt of ‘me’, then we can understand will on a personal level rather than a mechanical level. On the sub-personal level, where there is processing of information in the brain and competing drives in the mind, we, as individuals do not exist. This is the mistake of the neuroscientific experiments thus far. They assume a bottom-up production of consciousness from unconscious microphysical processes, rather than seeing a bi-directional relation between many levels of description and multiple kinds of relation between micro and macro, physical and phenomenal.

My big interest is in how intention causes action

I think that intention is already an action, and in a human being that action takes place on the neurochemical level if we look at it from the outside. For the motive effect of the brain to translate into the motor effect of the rest of the body involves the sub-personal imitation of the personal motive, or you could say the diffraction of the personal motive as it is made increasingly impersonal, slower, larger, and more public-facing (mechanical) process.

Recent Comments